1. Digraph3 Tutorials

- Author:

Raymond Bisdorff, Emeritus Professor of Applied Mathematics and Computer Science, University of Luxembourg

- Url:

- Version:

Python 3.12 (release: 3.12.3)

- PDF version:

- Copyright:

R. Bisdorff 2013-2024

- New:

A tutorial on using multiprocessing resources when tackling large performance tableaux with several hundreds of decision alternatives.

Two tutorials on computing fair intergroup and fair intragroup pairing solutions

1.1. Contents

Working with digraphs and outranking digraphs

Evaluation and decision models and tools

Evaluation and decision case studies

Working with big outranking digraphs

Moving on to undirected graphs

Preface

The tutorials in this document describe the practical usage of our Digraph3 Python3 software resources in the field of Algorithmic Decision Theory and more specifically in outranking based Multiple Criteria Decision Aid (MCDA). They mainly illustrate practical tools for a Master Course on Algorithmic Decision Theory at the University of Luxembourg. The document contains first a set of tutorials introducing the main objects available in the Digraph3 collection of Python3 modules, like bipolar-valued digraphs, outranking digraphs, and multicriteria performance tableaux. The second and methodological part of this tutorials is decision problem oriented and shows how to edit multicriteria performance tableaux, how to compute the potential winner(s) of an election, how to build a best choice recommendation, and how to rate or linearly rank with multiple incommensurable performance criteria. A third part presents three evaluation and decision case studies. A fourth part with more graph theoretical tutorials follows. One on working with undirected graphs, followed by a tutorial on how to compute non isomorphic maximal independent sets (kernels) in the n-cycle graph. Special tutorials are devoted to perfect graphs, like split, interval and permutation graphs, and to tree-graphs and forests. Finally we discuss the fair intergroup and intragroup pairing problem.

- Appendices

1.2. Working with digraphs and outranking digraphs

This first part of the tutorials introduces the Digraph3 software collection of Python programming resources.

1.2.1. Working with the Digraph3 software resources

1.2.1.1. Purpose

The basic idea of the Digraph3 Python resources is to make easy python interactive sessions or write short Python3 scripts for computing all kind of results from a bipolar-valued digraph or graph. These include such features as maximal independent, maximal dominant or absorbent choices, rankings, outrankings, linear ordering, etc. Most of the available computing resources are meant to illustrate a Master Course on Algorithmic Decision Theory given at the University of Luxembourg in the context of its Master in Information and Computer Science (MICS).

The Python development of these computing resources offers the advantage of an easy to write and maintain OOP source code as expected from a performing scripting language without loosing on efficiency in execution times compared to compiled languages such as C++ or Java.

1.2.1.2. Downloading of the Digraph3 resources

Using the Digraph3 modules is easy. You only need to have installed on your system the Python programming language of version 3.+ (readily available under Linux and Mac OS).

Several download options (easiest under Linux or Mac OS-X) are given.

(Recommended) With a browser access, download and extract the latest distribution zip archive from

By using a git client either, cloning from github

...$ git clone https://github.com/rbisdorff/Digraph3

Or, from sourceforge.net

...$ git clone https://git.code.sf.net/p/digraph3/code Digraph3

See the Installation section in the Technical Reference.

1.2.1.3. Starting a Python3 terminal session

You may start an interactive Python3 terminal session in the Digraph3 directory.

1$HOME/.../Digraph3$ python3

2Python 3.10.0 (default, Oct 21 2021, 10:53:53)

3[GCC 11.2.0] on linux Type "help", "copyright",

4"credits" or "license" for more information.

5>>>

For exploring the classes and methods provided by the Digraph3 modules (see the Reference manual) enter the Python3 commands following the session prompts marked with >>> or ... . The lines without the prompt are console output from the Python3 interpreter.

1>>> from randomDigraphs import RandomDigraph

2>>> dg = RandomDigraph(order=5,arcProbability=0.5,seed=101)

3>>> dg

4 *------- Digraph instance description ------*

5 Instance class : RandomDigraph

6 Instance name : randomDigraph

7 Digraph Order : 5

8 Digraph Size : 12

9 Valuation domain : [-1.00; 1.00]

10 Determinateness : 100.000

11 Attributes : ['actions', 'valuationdomain', 'relation',

12 'order', 'name', 'gamma', 'notGamma',

13 'seed', 'arcProbability', ]

In Listing 1.1 we import, for instance, from the randomDigraphs module the RandomDigraph class in order to generate a random digraph object dg of order 5 - number of nodes called (decision) actions - and arc probability of 50%. We may directly inspect the content of python object dg (Line 3).

Note

For convenience of redoing the computations, all python code-blocks show in the upper right corner a specific copy button which allows to both copy only code lines, i.e. lines starting with ‘>>>’ or ‘…’, and stripping the console prompts. The copied code lines may hence be right away pasted into a Python console session.

1.2.1.4. Digraph object structure

All Digraph objects contain at least the following attributes (see Listing 1.1 Lines 11-12):

A name attribute, holding usually the actual name of the stored instance that was used to create the instance;

A ordered dictionary of digraph nodes called actions (decision alternatives) with at least a ‘name’ attribute;

An order attribute containing the number of graph nodes (length of the actions dictionary) automatically added by the object constructor;

A logical characteristic valuationdomain dictionary with three decimal entries: the minimum (-1.0, means certainly false), the median (0.0, means missing information) and the maximum characteristic value (+1.0, means certainly true);

A double dictionary called relation and indexed by an oriented pair of actions (nodes) and carrying a decimal characteristic value in the range of the previous valuation domain;

Its associated gamma attribute, a dictionary containing the direct successors, respectively predecessors of each action, automatically added by the object constructor;

Its associated notGamma attribute, a dictionary containing the actions that are not direct successors respectively predecessors of each action, automatically added by the object constructor.

See the technical documentation of the root digraphs.Digraph class.

1.2.1.5. Permanent storage

The save() method stores the digraph object dg in a file named ‘tutorialDigraph.py’,

>>> dg.save('tutorialDigraph')

*--- Saving digraph in file: <tutorialDigraph.py> ---*

with the following content

1from decimal import Decimal

2from collections import OrderedDict

3actions = OrderedDict([

4 ('a1', {'shortName': 'a1', 'name': 'random decision action'}),

5 ('a2', {'shortName': 'a2', 'name': 'random decision action'}),

6 ('a3', {'shortName': 'a3', 'name': 'random decision action'}),

7 ('a4', {'shortName': 'a4', 'name': 'random decision action'}),

8 ('a5', {'shortName': 'a5', 'name': 'random decision action'}),

9 ])

10valuationdomain = {'min': Decimal('-1.0'),

11 'med': Decimal('0.0'),

12 'max': Decimal('1.0'),

13 'hasIntegerValuation': True, # repr. format

14 }

15relation = {

16 'a1': {'a1':Decimal('-1.0'), 'a2':Decimal('-1.0'),

17 'a3':Decimal('1.0'), 'a4':Decimal('-1.0'),

18 'a5':Decimal('-1.0'),},

19 'a2': {'a1':Decimal('1.0'), 'a2':Decimal('-1.0'),

20 'a3':Decimal('-1.0'), 'a4':Decimal('1.0'),

21 'a5':Decimal('1.0'),},

22 'a3': {'a1':Decimal('1.0'), 'a2':Decimal('-1.0'),

23 'a3':Decimal('-1.0'), 'a4':Decimal('1.0'),

24 'a5':Decimal('-1.0'),},

25 'a4': {'a1':Decimal('1.0'), 'a2':Decimal('1.0'),

26 'a3':Decimal('1.0'), 'a4':Decimal('-1.0'),

27 'a5':Decimal('-1.0'),},

28 'a5': {'a1':Decimal('1.0'), 'a2':Decimal('1.0'),

29 'a3':Decimal('1.0'), 'a4':Decimal('-1.0'),

30 'a5':Decimal('-1.0'),},

31 }

1.2.1.6. Inspecting a Digraph object

We may reload (see Listing 1.2) the previously saved digraph object from the file named ‘tutorialDigraph.py’ with the Digraph class constructor and different show methods (see Listing 1.2 below) reveal us that dg is a crisp, irreflexive and connected digraph of order five.

1>>> from digraphs import Digraph

2>>> dg = Digraph('tutorialDigraph')

3>>> dg.showShort()

4 *----- show short -------------*

5 Digraph : tutorialDigraph

6 Actions : OrderedDict([

7 ('a1', {'shortName': 'a1', 'name': 'random decision action'}),

8 ('a2', {'shortName': 'a2', 'name': 'random decision action'}),

9 ('a3', {'shortName': 'a3', 'name': 'random decision action'}),

10 ('a4', {'shortName': 'a4', 'name': 'random decision action'}),

11 ('a5', {'shortName': 'a5', 'name': 'random decision action'})

12 ])

13 Valuation domain : {

14 'min': Decimal('-1.0'),

15 'max': Decimal('1.0'),

16 'med': Decimal('0.0'), 'hasIntegerValuation': True

17 }

18>>> dg.showRelationTable()

19 * ---- Relation Table -----

20 S | 'a1' 'a2' 'a3' 'a4' 'a5'

21 ------|-------------------------------

22 'a1' | -1 -1 1 -1 -1

23 'a2' | 1 -1 -1 1 1

24 'a3' | 1 -1 -1 1 -1

25 'a4' | 1 1 1 -1 -1

26 'a5' | 1 1 1 -1 -1

27 Valuation domain: [-1;+1]

28>>> dg.showComponents()

29 *--- Connected Components ---*

30 1: ['a1', 'a2', 'a3', 'a4', 'a5']

31>>> dg.showNeighborhoods()

32 Neighborhoods:

33 Gamma :

34 'a1': in => {'a2', 'a4', 'a3', 'a5'}, out => {'a3'}

35 'a2': in => {'a5', 'a4'}, out => {'a1', 'a4', 'a5'}

36 'a3': in => {'a1', 'a4', 'a5'}, out => {'a1', 'a4'}

37 'a4': in => {'a2', 'a3'}, out => {'a1', 'a3', 'a2'}

38 'a5': in => {'a2'}, out => {'a1', 'a3', 'a2'}

39 Not Gamma :

40 'a1': in => set(), out => {'a2', 'a4', 'a5'}

41 'a2': in => {'a1', 'a3'}, out => {'a3'}

42 'a3': in => {'a2'}, out => {'a2', 'a5'}

43 'a4': in => {'a1', 'a5'}, out => {'a5'}

44 'a5': in => {'a1', 'a4', 'a3'}, out => {'a4'}

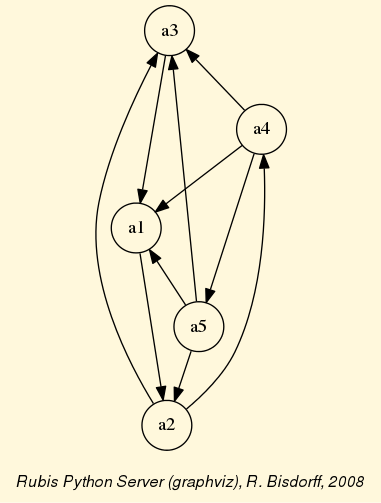

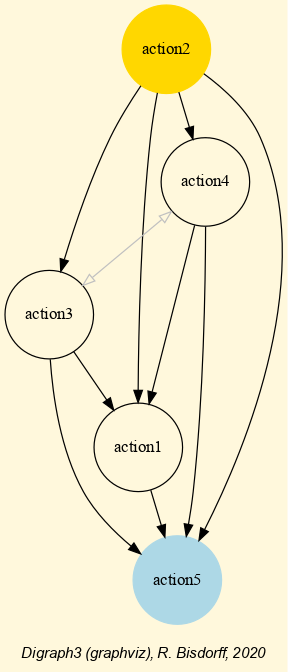

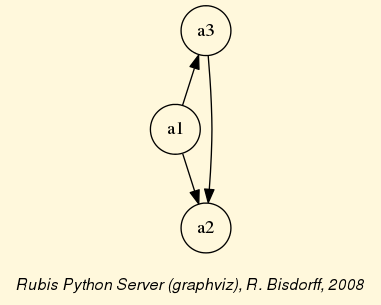

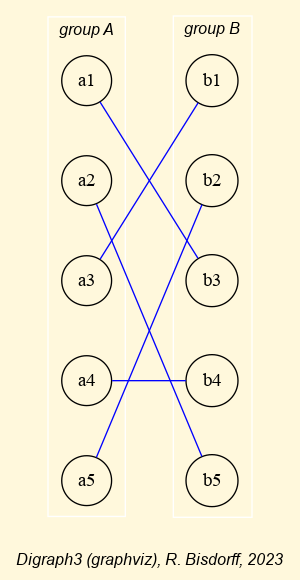

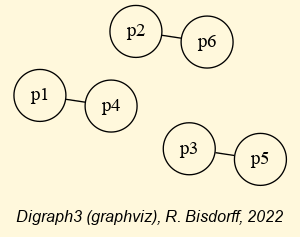

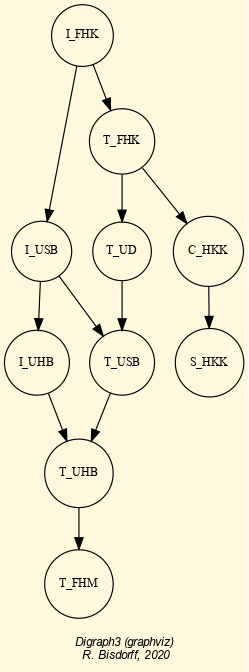

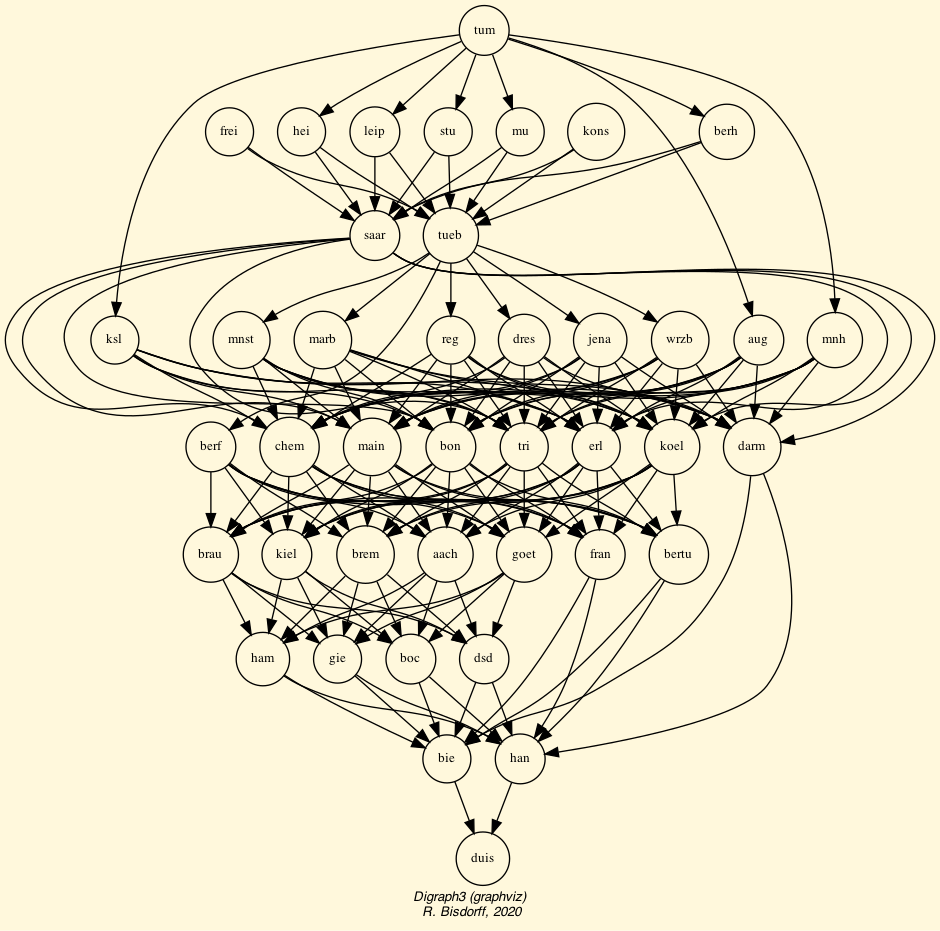

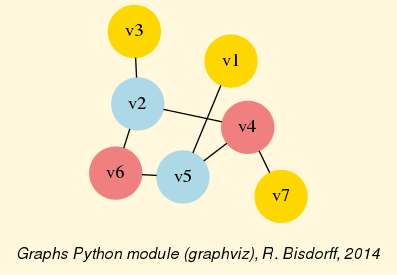

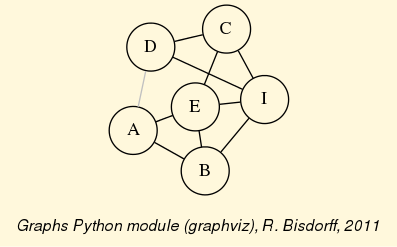

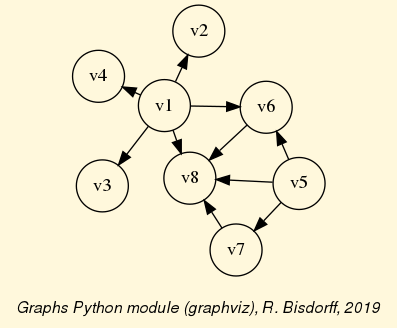

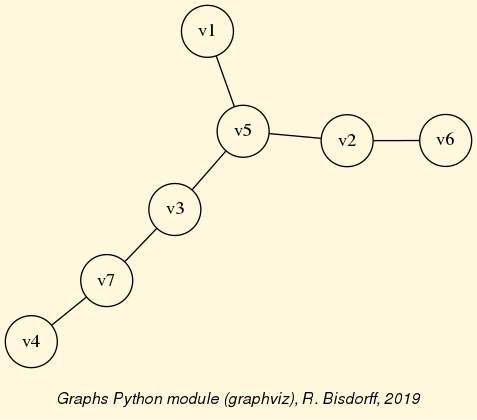

The exportGraphViz() method generates in

the current working directory a ‘tutorialDigraph.dot’ file and a

‘tutorialdigraph.png’ picture of the tutorial digraph dg (see Fig. 1.1), if the graphviz tools are installed on your system [1].

1>>> dg.exportGraphViz('tutorialDigraph')

2 *---- exporting a dot file do GraphViz tools ---------*

3 Exporting to tutorialDigraph.dot

4 dot -Grankdir=BT -Tpng tutorialDigraph.dot -o tutorialDigraph.png

Fig. 1.1 The tutorial crisp digraph

Further methods are provided for inspecting this Digraph object dg , like the following showStatistics() method.

1>>> dg.showStatistics()

2 *----- general statistics -------------*

3 for digraph : <tutorialDigraph.py>

4 order : 5 nodes

5 size : 12 arcs

6 # undetermined : 0 arcs

7 determinateness (%) : 100.0

8 arc density : 0.60

9 double arc density : 0.40

10 single arc density : 0.40

11 absence density : 0.20

12 strict single arc density: 0.40

13 strict absence density : 0.20

14 # components : 1

15 # strong components : 1

16 transitivity degree (%) : 60.0

17 : [0, 1, 2, 3, 4, 5]

18 outdegrees distribution : [0, 1, 1, 3, 0, 0]

19 indegrees distribution : [0, 1, 2, 1, 1, 0]

20 mean outdegree : 2.40

21 mean indegree : 2.40

22 : [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10]

23 symmetric degrees dist. : [0, 0, 0, 0, 1, 4, 0, 0, 0, 0, 0]

24 mean symmetric degree : 4.80

25 outdegrees concentration index : 0.1667

26 indegrees concentration index : 0.2333

27 symdegrees concentration index : 0.0333

28 : [0, 1, 2, 3, 4, 'inf']

29 neighbourhood depths distribution: [0, 1, 4, 0, 0, 0]

30 mean neighbourhood depth : 1.80

31 digraph diameter : 2

32 agglomeration distribution :

33 a1 : 58.33

34 a2 : 33.33

35 a3 : 33.33

36 a4 : 50.00

37 a5 : 50.00

38 agglomeration coefficient : 45.00

These show methods usually rely upon corresponding compute methods, like the computeSize(), the computeDeterminateness() or the computeTransitivityDegree() method (see Listing 1.3 Line 5,7,16).

1>>> dg.computeSize()

2 12

3>>> dg.computeDeterminateness(InPercents=True)

4 Decimal('100.00')

5>>> dg.computeTransitivityDegree(InPercents=True)

6 Decimal('60.00')

Mind that show methods output their results in the Python console. We provide also some showHTML methods which output their results in a system browser’s window.

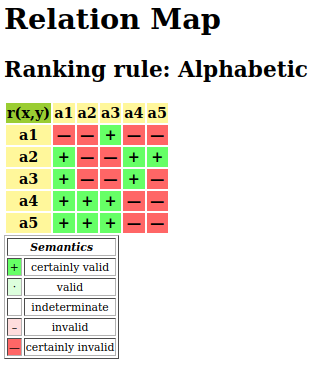

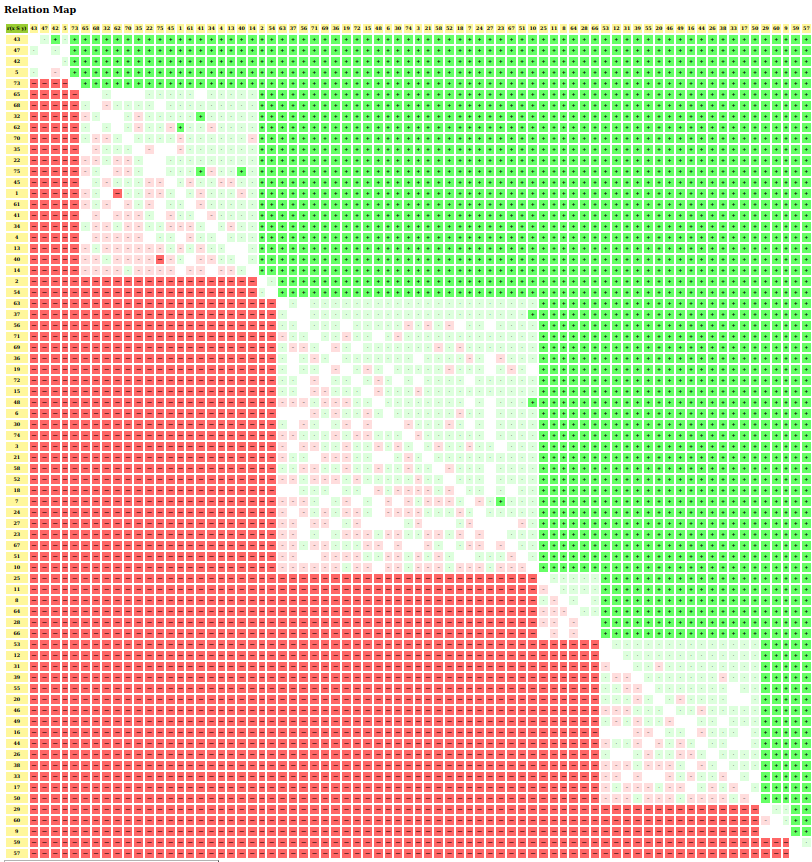

>>> dg.showHTMLRelationMap(relationName='r(x,y)',rankingRule=None)

Fig. 1.2 Browsing the relation map of the tutorial digraph

In Fig. 1.2 we find confirmed again that our random digraph instance dg, is indeed a crisp, i.e. 100% determined digraph instance.

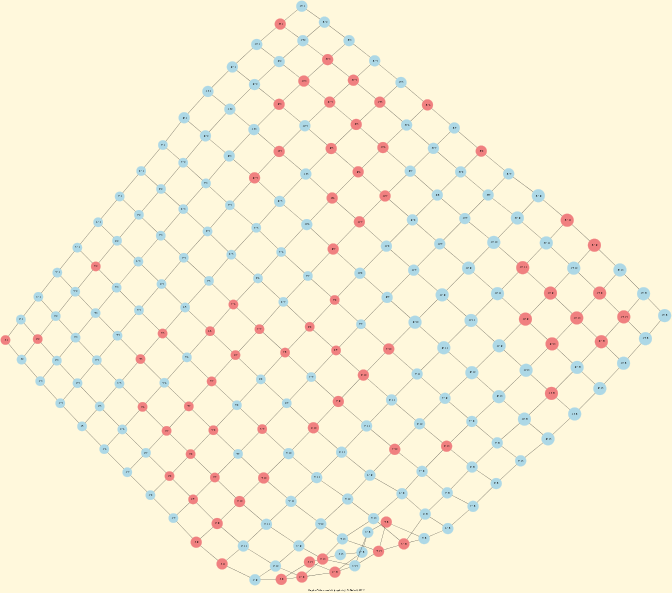

1.2.1.7. Special Digraph instances

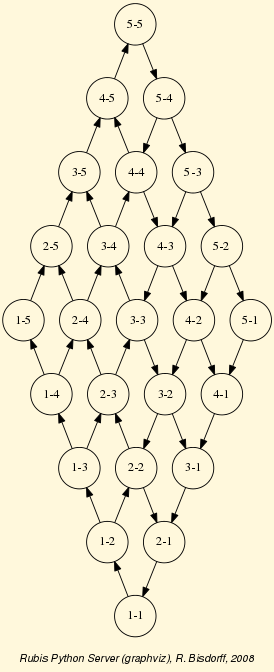

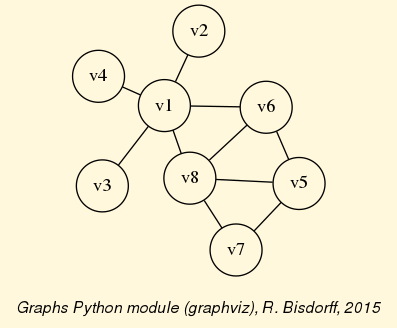

Some constructors for universal digraph instances, like the CompleteDigraph, the EmptyDigraph or the circular oriented GridDigraph constructor, are readily available (see Fig. 1.3).

1>>> from digraphs import GridDigraph

2>>> grid = GridDigraph(n=5,m=5,hasMedianSplitOrientation=True)

3>>> grid.exportGraphViz('tutorialGrid')

4 *---- exporting a dot file for GraphViz tools ---------*

5 Exporting to tutorialGrid.dot

6 dot -Grankdir=BT -Tpng TutorialGrid.dot -o tutorialGrid.png

Fig. 1.3 The 5x5 grid graph median split oriented

For more information about its resources, see the technical documentation of the digraphs module.

Back to Content Table

1.2.2. Working with the digraphs module

1.2.2.1. Random digraphs

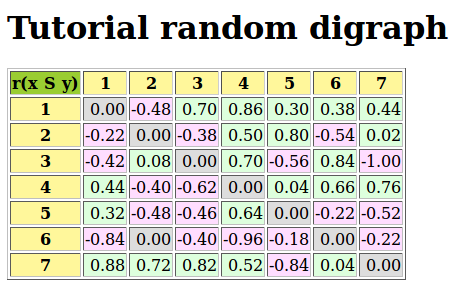

We are starting this tutorial with generating a uniformly random [-1.0; +1.0]-valued digraph of order 7, denoted rdg and modelling, for instance, a binary relation (x S y) defined on the set of nodes of rdg. For this purpose, the Digraph3 collection contains a randomDigraphs module providing a specific RandomValuationDigraph constructor.

1>>> from randomDigraphs import RandomValuationDigraph

2>>> rdg = RandomValuationDigraph(order=7)

3>>> rdg.save('tutRandValDigraph')

4>>> from digraphs import Digraph

5>>> rdg = Digraph('tutRandValDigraph')

6>>> rdg

7 *------- Digraph instance description ------*

8 Instance class : Digraph

9 Instance name : tutRandValDigraph

10 Digraph Order : 7

11 Digraph Size : 22

12 Valuation domain : [-1.00;1.00]

13 Determinateness (%) : 75.24

14 Attributes : ['name', 'actions', 'order',

15 'valuationdomain', 'relation',

16 'gamma', 'notGamma']

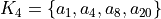

With the save() method (see Listing 1.4 Line 3) we may keep a backup version for future use of rdg which will be stored in a file called tutRandValDigraph.py in the current working directory. The genric Digraph class constructor may restore the rdg object from the stored file (Line 4). We may easily inspect the content of rdg (Lines 5). The digraph size 22 indicates the number of positively valued arcs. The valuation domain is uniformly distributed in the interval ![[-1.0; 1.0]](_images/math/30173f024d28e69ed5ab382b31bdc7cb1df97065.png) and the mean absolute arc valuation is

and the mean absolute arc valuation is  (Line 12) .

(Line 12) .

All Digraph objects contain at least the list of attributes shown here: a name (string), a dictionary of actions (digraph nodes), an order (integer) attribute containing the number of actions, a valuationdomain dictionary, a double dictionary relation representing the adjency table of the digraph relation, a gamma and a notGamma dictionary containing the direct neighbourhood of each action.

As mentioned previously, the Digraph class provides some generic show… methods for exploring a given Digraph object, like the showShort(), showAll(), showRelationTable() and the showNeighborhoods() methods.

1>>> rdg.showAll()

2 *----- show detail -------------*

3 Digraph : tutRandValDigraph

4 *---- Actions ----*

5 ['1', '2', '3', '4', '5', '6', '7']

6 *---- Characteristic valuation domain ----*

7 {'med': Decimal('0.0'), 'hasIntegerValuation': False,

8 'min': Decimal('-1.0'), 'max': Decimal('1.0')}

9 * ---- Relation Table -----

10 r(xSy) | '1' '2' '3' '4' '5' '6' '7'

11 -------|-------------------------------------------

12 '1' | 0.00 -0.48 0.70 0.86 0.30 0.38 0.44

13 '2' | -0.22 0.00 -0.38 0.50 0.80 -0.54 0.02

14 '3' | -0.42 0.08 0.00 0.70 -0.56 0.84 -1.00

15 '4' | 0.44 -0.40 -0.62 0.00 0.04 0.66 0.76

16 '5' | 0.32 -0.48 -0.46 0.64 0.00 -0.22 -0.52

17 '6' | -0.84 0.00 -0.40 -0.96 -0.18 0.00 -0.22

18 '7' | 0.88 0.72 0.82 0.52 -0.84 0.04 0.00

19 *--- Connected Components ---*

20 1: ['1', '2', '3', '4', '5', '6', '7']

21 Neighborhoods:

22 Gamma:

23 '1': in => {'5', '7', '4'}, out => {'5', '7', '6', '3', '4'}

24 '2': in => {'7', '3'}, out => {'5', '7', '4'}

25 '3': in => {'7', '1'}, out => {'6', '2', '4'}

26 '4': in => {'5', '7', '1', '2', '3'}, out => {'5', '7', '1', '6'}

27 '5': in => {'1', '2', '4'}, out => {'1', '4'}

28 '6': in => {'7', '1', '3', '4'}, out => set()

29 '7': in => {'1', '2', '4'}, out => {'1', '2', '3', '4', '6'}

30 Not Gamma:

31 '1': in => {'6', '2', '3'}, out => {'2'}

32 '2': in => {'5', '1', '4'}, out => {'1', '6', '3'}

33 '3': in => {'5', '6', '2', '4'}, out => {'5', '7', '1'}

34 '4': in => {'6'}, out => {'2', '3'}

35 '5': in => {'7', '6', '3'}, out => {'7', '6', '2', '3'}

36 '6': in => {'5', '2'}, out => {'5', '7', '1', '3', '4'}

37 '7': in => {'5', '6', '3'}, out => {'5'}

Warning

Mind that most Digraph class methods will ignore the reflexive links by considering that they are indeterminate, i.e. the characteristic value  for all action x is set to the median, i.e. indeterminate value 0.0 in this case (see Listing 1.5 Lines 12-18 and [BIS-2004a]).

for all action x is set to the median, i.e. indeterminate value 0.0 in this case (see Listing 1.5 Lines 12-18 and [BIS-2004a]).

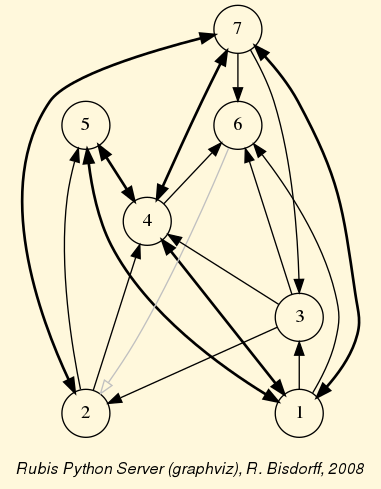

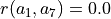

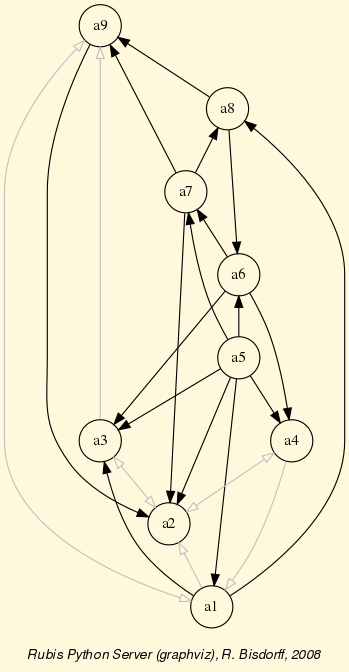

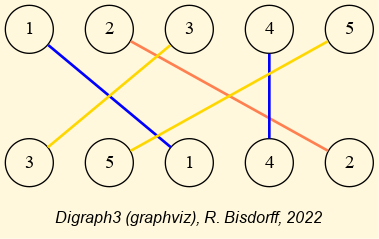

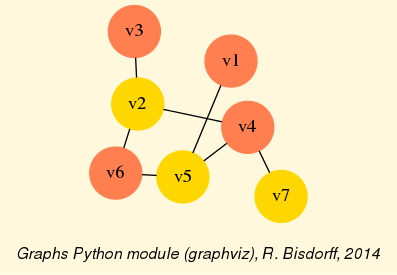

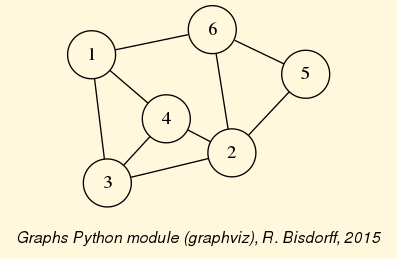

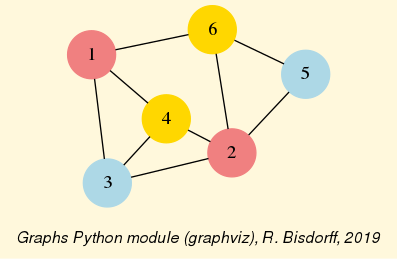

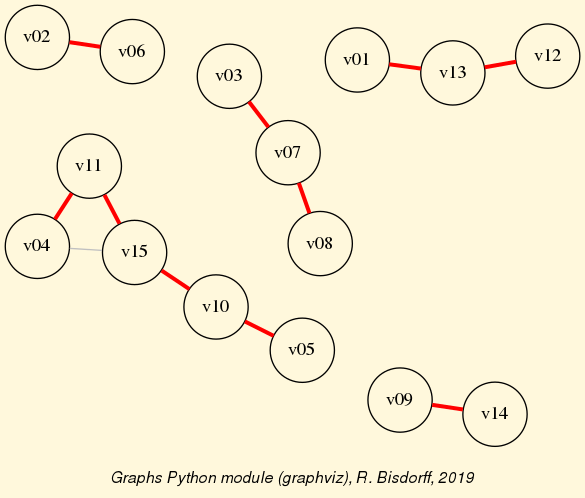

1.2.2.2. Graphviz drawings

We may even get a better insight into the Digraph object rdg by looking at a graphviz drawing [1] .

1>>> rdg.exportGraphViz('tutRandValDigraph')

2 *---- exporting a dot file for GraphViz tools ---------*

3 Exporting to tutRandValDigraph.dot

4 dot -Grankdir=BT -Tpng tutRandValDigraph.dot -o tutRandValDigraph.png

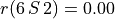

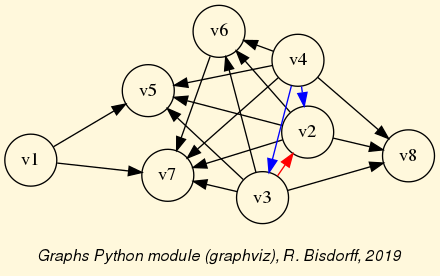

Fig. 1.4 The tutorial random valuation digraph

Double links are drawn in bold black with an arrowhead at each end, whereas single asymmetric links are drawn in black with an arrowhead showing the direction of the link. Notice the undetermined relational situation ( ) observed between nodes ‘6’ and ‘2’. The corresponding link is marked in gray with an open arrowhead in the drawing (see Fig. 1.4).

) observed between nodes ‘6’ and ‘2’. The corresponding link is marked in gray with an open arrowhead in the drawing (see Fig. 1.4).

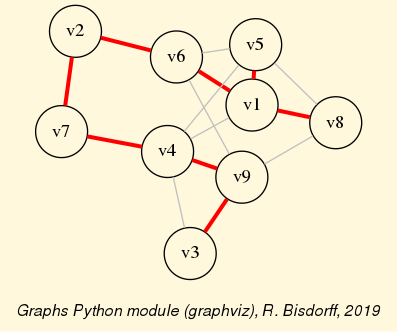

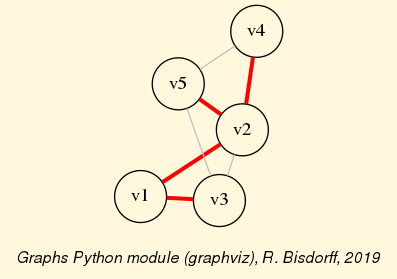

1.2.2.3. Asymmetric and symmetric parts

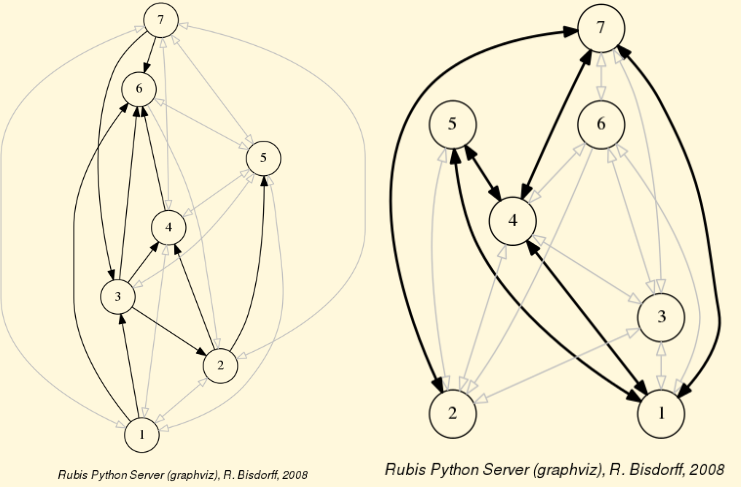

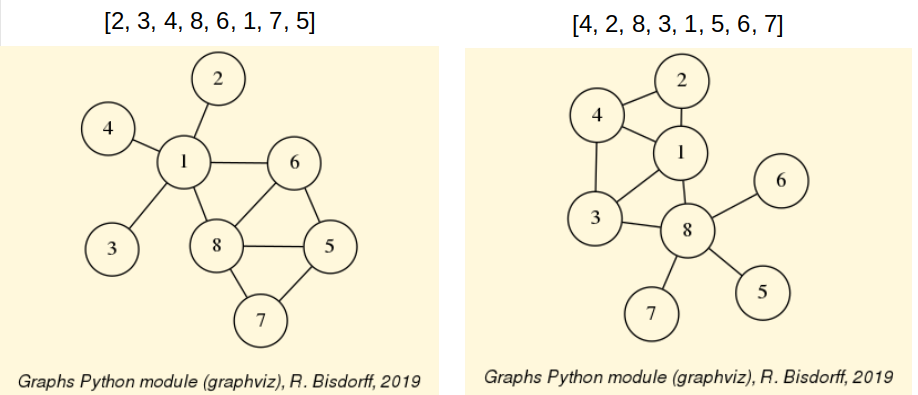

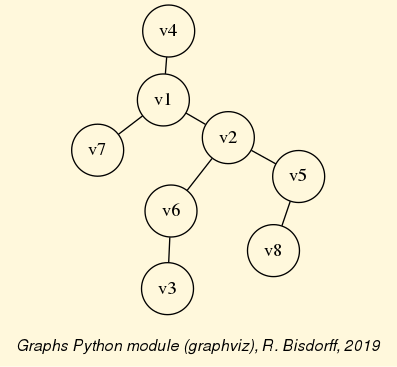

We may now extract both the symmetric as well as the asymmetric part of digraph dg with the help of two corresponding constructors (see Fig. 1.5).

1>>> from digraphs import AsymmetricPartialDigraph,

2... SymmetricPartialDigraph

3

4>>> asymDg = AsymmetricPartialDigraph(rdg)

5>>> asymDg.exportGraphViz()

6>>> symDg = SymmetricPartialDigraph(rdg)

7>>> symDg.exportGraphViz()

Fig. 1.5 Asymmetric and symmetric part of the tutorial random valuation digraph

Note

The constructor of the partial objects asymDg and symDg puts to the indeterminate characteristic value all not-asymmetric, respectively not-symmetric links between nodes (see Fig. 1.5).

Here below, for illustration the source code of the relation constructor of the AsymmetricPartialDigraph class.

1 def _constructRelation(self):

2 actions = self.actions

3 Min = self.valuationdomain['min']

4 Max = self.valuationdomain['max']

5 Med = self.valuationdomain['med']

6 relationIn = self.relation

7 relationOut = {}

8 for a in actions:

9 relationOut[a] = {}

10 for b in actions:

11 if a != b:

12 if relationIn[a][b] >= Med and relationIn[b][a] <= Med:

13 relationOut[a][b] = relationIn[a][b]

14 elif relationIn[a][b] <= Med and relationIn[b][a] >= Med:

15 relationOut[a][b] = relationIn[a][b]

16 else:

17 relationOut[a][b] = Med

18 else:

19 relationOut[a][b] = Med

20 return relationOut

1.2.2.4. Border and inner parts

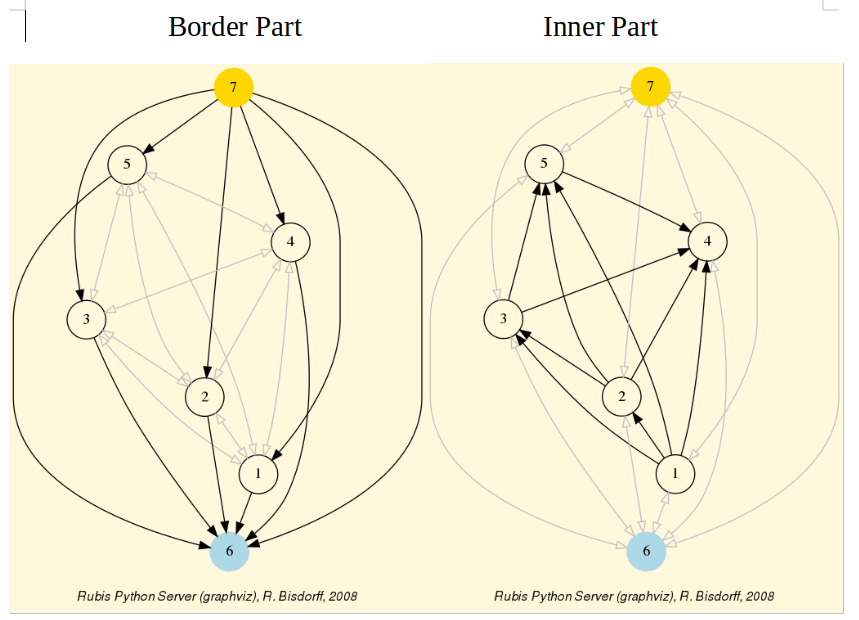

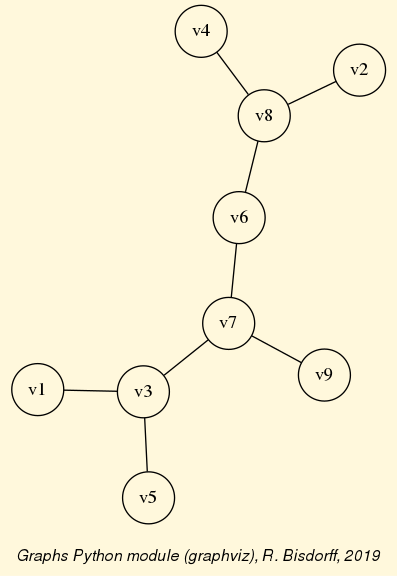

We may also extract the border -the part of a digraph induced by the union of its initial and terminal prekernels (see tutorial On computing digraph kernels)- as well as, the inner part -the complement of the border- with the help of two corresponding class constructors: GraphBorder and GraphInner (see Listing 1.6).

Let us illustrate these parts on a linear ordering obtained from the tutorial random valuation digraph rdg with the NetFlows ranking rule (see Listing 1.6 Line 2-3).

1>>> from digraphs import GraphBorder, GraphInner

2>>> from linearOrders import NetFlowsOrder

3>>> nf = NetFlowsOrder(rdg)

4>>> nf.netFlowsOrder

5 ['6', '4', '5', '3', '2', '1', '7']

6>>> bnf = GraphBorder(nf)

7>>> bnf.exportGraphViz(worstChoice=['6'],bestChoice=['7'])

8>>> inf = GraphInner(nf)

9>>> inf.exportGraphViz(worstChoice=['6'],bestChoice=['7'])

Fig. 1.6 Border and inner part of a linear order oriented by terminal and initial kernels

We may orient the graphviz drawings in Fig. 1.6 with the terminal node 6 (worstChoice parameter) and initial node 7 (bestChoice parameter), see Listing 1.6 Lines 7 and 9).

Note

The constructor of the partial digraphs bnf and inf (see Listing 1.6 Lines 3 and 6) puts to the indeterminate characteristic value all links not in the border, respectively not in the inner part (see Fig. 1.7).

Being much denser than a linear order, the actual inner part of our tutorial random valuation digraph dg is reduced to a single arc between nodes 3 and 4 (see Fig. 1.7).

Fig. 1.7 Border and inner part of the tutorial random valuation digraph rdg

Indeed, a complete digraph on the limit has no inner part (privacy!) at all, whereas empty and indeterminate digraphs admit both, an empty border and an empty inner part.

1.2.2.5. Fusion by epistemic disjunction

We may recover object rdg from both partial objects asymDg and symDg, or as well from the border bg and the inner part ig, with a bipolar fusion constructor, also called epistemic disjunction, available via the FusionDigraph class (see Listing 1.4 Lines 12- 21).

1>>> from digraphs import FusionDigraph

2>>> fusDg = FusionDigraph(asymDg,symDg,operator='o-max')

3>>> # fusDg = FusionDigraph(bg,ig,operator='o-max')

4>>> fusDg.showRelationTable()

5 * ---- Relation Table -----

6 r(xSy) | '1' '2' '3' '4' '5' '6' '7'

7 -------|------------------------------------------

8 '1' | 0.00 -0.48 0.70 0.86 0.30 0.38 0.44

9 '2' | -0.22 0.00 -0.38 0.50 0.80 -0.54 0.02

10 '3' | -0.42 0.08 0.00 0.70 -0.56 0.84 -1.00

11 '4' | 0.44 -0.40 -0.62 0.00 0.04 0.66 0.76

12 '5' | 0.32 -0.48 -0.46 0.64 0.00 -0.22 -0.52

13 '6' | -0.84 0.00 -0.40 -0.96 -0.18 0.00 -0.22

14 '7' | 0.88 0.72 0.82 0.52 -0.84 0.04 0.00

The epistemic fusion operator o-max (see Listing 1.7 Line 2) works as follows.

Let r and r’ characterise two bipolar-valued epistemic situations.

o-max(r, r’ ) = max(r, r’ ) when both r and r’ are more or less valid or indeterminate;

o-max(r, r’ ) = min(r, r’ ) when both r and r’ are more or less invalid or indeterminate;

o-max(r, r’ ) = indeterminate otherwise.

1.2.2.6. Dual, converse and codual digraphs

We may as readily compute the dual (negated relation [14]), the converse (transposed relation) and the codual (transposed and negated relation) of the digraph instance rdg.

1>>> from digraphs import DualDigraph, ConverseDigraph, CoDualDigraph

2>>> ddg = DualDigraph(rdg)

3>>> ddg.showRelationTable()

4 -r(xSy) | '1' '2' '3' '4' '5' '6' '7'

5 --------|------------------------------------------

6 '1 ' | 0.00 0.48 -0.70 -0.86 -0.30 -0.38 -0.44

7 '2' | 0.22 0.00 0.38 -0.50 0.80 0.54 -0.02

8 '3' | 0.42 0.08 0.00 -0.70 0.56 -0.84 1.00

9 '4' | -0.44 0.40 0.62 0.00 -0.04 -0.66 -0.76

10 '5' | -0.32 0.48 0.46 -0.64 0.00 0.22 0.52

11 '6' | 0.84 0.00 0.40 0.96 0.18 0.00 0.22

12 '7' | 0.88 -0.72 -0.82 -0.52 0.84 -0.04 0.00

13>>> cdg = ConverseDigraph(rdg)

14>>> cdg.showRelationTable()

15 * ---- Relation Table -----

16 r(ySx) | '1' '2' '3' '4' '5' '6' '7'

17 --------|------------------------------------------

18 '1' | 0.00 -0.22 -0.42 0.44 0.32 -0.84 0.88

19 '2' | -0.48 0.00 0.08 -0.40 -0.48 0.00 0.72

20 '3' | 0.70 -0.38 0.00 -0.62 -0.46 -0.40 0.82

21 '4' | 0.86 0.50 0.70 0.00 0.64 -0.96 0.52

22 '5' | 0.30 0.80 -0.56 0.04 0.00 -0.18 -0.84

23 '6' | 0.38 -0.54 0.84 0.66 -0.22 0.00 0.04

24 '7' | 0.44 0.02 -1.00 0.76 -0.52 -0.22 0.00

25>>> cddg = CoDualDigraph(rdg)

26>>> cddg.showRelationTable()

27 * ---- Relation Table -----

28 -r(ySx) | '1' '2' '3' '4' '5' '6' '7'

29 --------|------------------------------------------

30 '1' | 0.00 0.22 0.42 -0.44 -0.32 0.84 -0.88

31 '2' | 0.48 0.00 -0.08 0.40 0.48 0.00 -0.72

32 '3' | -0.70 0.38 0.00 0.62 0.46 0.40 -0.82

33 '4' | -0.86 -0.50 -0.70 0.00 -0.64 0.96 -0.52

34 '5' | -0.30 -0.80 0.56 -0.04 0.00 0.18 0.84

35 '6' | -0.38 0.54 -0.84 -0.66 0.22 0.00 -0.04

36 '7' | -0.44 -0.02 1.00 -0.76 0.52 0.22 0.00

Computing the dual, respectively the converse, may also be done with prefixing the __neg__ (-) or the __invert__ (~) operator. The codual of a Digraph object may, hence, as well be computed with a composition (in either order) of both operations.

1>>> ddg = -rdg # dual of rdg

2>>> cdg = ~rdg # converse of rdg

3>>> cddg = ~(-rdg) # = -(~rdg) codual of rdg

4>>> (-(~rdg)).showRelationTable()

5 * ---- Relation Table -----

6 -r(ySx) | '1' '2' '3' '4' '5' '6' '7'

7 --------|------------------------------------------

8 '1' | 0.00 0.22 0.42 -0.44 -0.32 0.84 -0.88

9 '2' | 0.48 0.00 -0.08 0.40 0.48 0.00 -0.72

10 '3' | -0.70 0.38 0.00 0.62 0.46 0.40 -0.82

11 '4' | -0.86 -0.50 -0.70 0.00 -0.64 0.96 -0.52

12 '5' | -0.30 -0.80 0.56 -0.04 0.00 0.18 0.84

13 '6' | -0.38 0.54 -0.84 -0.66 0.22 0.00 -0.04

14 '7' | -0.44 -0.02 1.00 -0.76 0.52 0.22 0.00

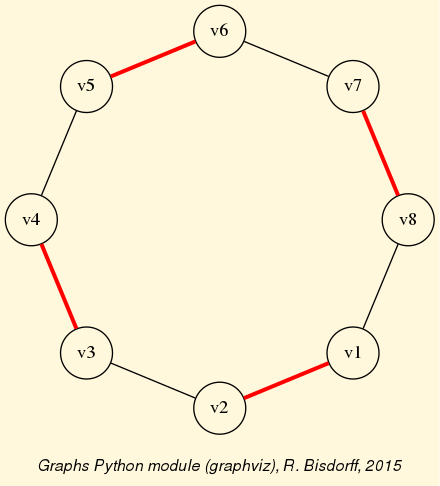

1.2.2.7. Symmetric and transitive closures

Symmetric and transitive closures, by default in-site constructors, are also available (see Fig. 1.8). Note that it is a good idea, before going ahead with these in-site operations, who irreversibly modify the original rdg object, to previously make a backup version of rdg. The simplest storage method, always provided by the generic save(), writes out in a named file the python content of the Digraph object in string representation.

1>>> rdg.save('tutRandValDigraph')

2>>> rdg.closeSymmetric(InSite=True)

3>>> rdg.closeTransitive(InSite=True)

4>>> rdg.exportGraphViz('strongComponents')

Fig. 1.8 Symmetric and transitive in-site closures

The closeSymmetric() method (see Listing 1.9 Line 2), of complexity  where n denotes the digraph’s order, changes, on the one hand, all single pairwise links it may detect into double links by operating a disjunction of the pairwise relations. On the other hand, the

where n denotes the digraph’s order, changes, on the one hand, all single pairwise links it may detect into double links by operating a disjunction of the pairwise relations. On the other hand, the closeTransitive() method (see Listing 1.9 Line 3), implements the Roy-Warshall transitive closure algorithm of complexity  . ([17])

. ([17])

Note

The same closeTransitive() method with a Reverse = True flag may be readily used for eliminating all transitive arcs from a transitive digraph instance. We make usage of this feature when drawing Hasse diagrams of TransitiveDigraph objects.

1.2.2.8. Strong components

As the original digraph rdg was connected (see above the result of the showShort() command), both the symmetric and the transitive closures operated together, will necessarily produce a single strong component, i.e. a complete digraph. We may sometimes wish to collapse all strong components in a given digraph and construct the so collapsed digraph. Using the StrongComponentsCollapsedDigraph constructor here will render a single hyper-node gathering all the original nodes (see Line 7 below).

1>>> from digraphs import StrongComponentsCollapsedDigraph

2>>> sc = StrongComponentsCollapsedDigraph(dg)

3>>> sc.showAll()

4 *----- show detail -----*

5 Digraph : tutRandValDigraph_Scc

6 *---- Actions ----*

7 ['_7_1_2_6_5_3_4_']

8 * ---- Relation Table -----

9 S | 'Scc_1'

10 -------|---------

11 'Scc_1' | 0.00

12 short content

13 Scc_1 _7_1_2_6_5_3_4_

14 Neighborhoods:

15 Gamma :

16 'frozenset({'7', '1', '2', '6', '5', '3', '4'})': in => set(), out => set()

17 Not Gamma :

18 'frozenset({'7', '1', '2', '6', '5', '3', '4'})': in => set(), out => set()

1.2.2.9. CSV storage

Sometimes it is required to exchange the graph valuation data in CSV format with a statistical package like R. For this purpose it is possible to export the digraph data into a CSV file. The valuation domain is hereby normalized by default to the range [-1,1] and the diagonal put by default to the minimal value -1.

1>>> rdg = Digraph('tutRandValDigraph')

2>>> rdg.saveCSV('tutRandValDigraph')

3 # content of file tutRandValDigraph.csv

4 "d","1","2","3","4","5","6","7"

5 "1",-1.0,0.48,-0.7,-0.86,-0.3,-0.38,-0.44

6 "2",0.22,-1.0,0.38,-0.5,-0.8,0.54,-0.02

7 "3",0.42,-0.08,-1.0,-0.7,0.56,-0.84,1.0

8 "4",-0.44,0.4,0.62,-1.0,-0.04,-0.66,-0.76

9 "5",-0.32,0.48,0.46,-0.64,-1.0,0.22,0.52

10 "6",0.84,0.0,0.4,0.96,0.18,-1.0,0.22

11 "7",-0.88,-0.72,-0.82,-0.52,0.84,-0.04,-1.0

It is possible to reload a Digraph instance from its previously saved CSV file content.

1>>> from digraphs import CSVDigraph

2>>> rdgcsv = CSVDigraph('tutRandValDigraph')

3>>> rdgcsv.showRelationTable(ReflexiveTerms=False)

4 * ---- Relation Table -----

5 r(xSy) | '1' '2' '3' '4' '5' '6' '7'

6 -------|------------------------------------------------------------

7 '1' | - -0.48 0.70 0.86 0.30 0.38 0.44

8 '2' | -0.22 - -0.38 0.50 0.80 -0.54 0.02

9 '3' | -0.42 0.08 - 0.70 -0.56 0.84 -1.00

10 '4' | 0.44 -0.40 -0.62 - 0.04 0.66 0.76

11 '5' | 0.32 -0.48 -0.46 0.64 - -0.22 -0.52

12 '6' | -0.84 0.00 -0.40 -0.96 -0.18 - -0.22

13 '7' | 0.88 0.72 0.82 0.52 -0.84 0.04 -

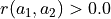

It is as well possible to show a colored version of the valued relation table in a system browser window tab (see Fig. 1.9).

1>>> rdgcsv.showHTMLRelationTable(tableTitle="Tutorial random digraph")

Fig. 1.9 The valued relation table shown in a browser window

Positive arcs are shown in green and negative arcs in red. Indeterminate -zero-valued- links, like the reflexive diagonal ones or the link between node 6 and node 2, are shown in gray.

1.2.2.10. Complete, empty and indeterminate digraphs

Let us finally mention some special universal classes of digraphs that are readily available in the digraphs module, like the CompleteDigraph, the EmptyDigraph and the IndeterminateDigraph classes, which put all characteristic values respectively to the maximum, the minimum or the median indeterminate characteristic value.

1>>> from digraphs import CompleteDigraph,EmptyDigraph,IndeterminateDigraph

2>>> e = EmptyDigraph(order=5)

3>>> e.showRelationTable()

4 * ---- Relation Table -----

5 S | '1' '2' '3' '4' '5'

6 ---- -|-----------------------------------

7 '1' | -1.00 -1.00 -1.00 -1.00 -1.00

8 '2' | -1.00 -1.00 -1.00 -1.00 -1.00

9 '3' | -1.00 -1.00 -1.00 -1.00 -1.00

10 '4' | -1.00 -1.00 -1.00 -1.00 -1.00

11 '5' | -1.00 -1.00 -1.00 -1.00 -1.00

12 >>> e.showNeighborhoods()

13 Neighborhoods:

14 Gamma :

15 '1': in => set(), out => set()

16 '2': in => set(), out => set()

17 '5': in => set(), out => set()

18 '3': in => set(), out => set()

19 '4': in => set(), out => set()

20 Not Gamma :

21 '1': in => {'2', '4', '5', '3'}, out => {'2', '4', '5', '3'}

22 '2': in => {'1', '4', '5', '3'}, out => {'1', '4', '5', '3'}

23 '5': in => {'1', '2', '4', '3'}, out => {'1', '2', '4', '3'}

24 '3': in => {'1', '2', '4', '5'}, out => {'1', '2', '4', '5'}

25 '4': in => {'1', '2', '5', '3'}, out => {'1', '2', '5', '3'}

26>>> i = IndeterminateDigraph()

27 * ---- Relation Table -----

28 S | '1' '2' '3' '4' '5'

29 ------|------------------------------

30 '1' | 0.00 0.00 0.00 0.00 0.00

31 '2' | 0.00 0.00 0.00 0.00 0.00

32 '3' | 0.00 0.00 0.00 0.00 0.00

33 '4' | 0.00 0.00 0.00 0.00 0.00

34 '5' | 0.00 0.00 0.00 0.00 0.00

35>>> i.showNeighborhoods()

36 Neighborhoods:

37 Gamma :

38 '1': in => set(), out => set()

39 '2': in => set(), out => set()

40 '5': in => set(), out => set()

41 '3': in => set(), out => set()

42 '4': in => set(), out => set()

43 Not Gamma :

44 '1': in => set(), out => set()

45 '2': in => set(), out => set()

46 '5': in => set(), out => set()

47 '3': in => set(), out => set()

48 '4': in => set(), out => set()

Note

Mind the subtle difference between the neighborhoods of an empty and the neighborhoods of an indeterminate digraph instance. In the first kind, the neighborhoods are known to be completely empty (see Listing 1.10 Lines 20-25) whereas, in the latter, nothing is known about the actual neighborhoods of the nodes (see Listing 1.10 Lines 43-48). These two cases illustrate why in the case of bipolar-valued digraphs, we may need both a gamma and a notGamma attribute.

Back to Content Table

1.2.3. Working with the outrankingDigraphs module

“The rule for the combination of independent concurrent arguments takes a very simple form when expressed in terms of the intensity of belief … It is this: Take the sum of all the feelings of belief which would be produced separately by all the arguments pro, subtract from that the similar sum for arguments con, and the remainder is the feeling of belief which ought to have the whole. This is a proceeding which men often resort to, under the name of balancing reasons.”

—C.S. Peirce, The probability of induction (1878)

See also

The technical documentation of the outrankingDigraphs module.

1.2.3.1. Outranking digraph model

In this Digraph3 module, the BipolarOutrankingDigraph class from the outrankingDigraphs module provides our standard outranking digraph constructor. Such an instance represents a hybrid object of both, the PerformanceTableau type and the OutrankingDigraph type. A given object consists hence in:

an ordered dictionary of decision actions describing the potential decision actions or alternatives with ‘name’ and ‘comment’ attributes,

a possibly empty ordered dictionary of decision objectives with ‘name’ and ‘comment attributes, describing the multiple preference dimensions involved in the decision problem,

a dictionary of performance criteria describing preferentially independent and non-redundant decimal-valued functions used for measuring the performance of each potential decision action with respect to a decision objective,

a double dictionary evaluation gathering performance grades for each decision action or alternative on each criterion function.

the digraph valuationdomain, a dictionary with three entries: the minimum (-1.0, certainly outranked), the median (0.0, indeterminate) and the maximum characteristic value (+1.0, certainly outranking),

the outranking relation : a double dictionary defined on the Cartesian product of the set of decision alternatives capturing the credibility of the pairwise outranking situation computed on the basis of the performance differences observed between couples of decision alternatives on the given family of criteria functions.

Let us construct, for instance, a random bipolar-valued outranking digraph with seven decision actions denotes a1, a2, …, a7. We need therefore to first generate a corresponding random performance tableaux (see below).

1>>> from outrankingDigraphs import *

2>>> pt = RandomPerformanceTableau(numberOfActions=7,

3... seed=100)

4

5>>> pt

6*------- PerformanceTableau instance description ------*

7 Instance class : RandomPerformanceTableau

8 Seed : 100

9 Instance name : randomperftab

10 # Actions : 7

11 # Criteria : 7

12 NaN proportion (%) : 6.1

13>>> pt.showActions()

14 *----- show digraphs actions --------------*

15 key: a1

16 name: action #1

17 comment: RandomPerformanceTableau() generated.

18 key: a2

19 name: action #2

20 comment: RandomPerformanceTableau() generated.

21 ...

22 ...

23 key: a7

24 name: action #7

25 comment: RandomPerformanceTableau() generated.

In this example we consider furthermore a family of seven equisignificant cardinal criteria functions g1, g2, …, g7, measuring the performance of each alternative on a rational scale from 0.0 (worst) to 100.00 (best). In order to capture the grading procedure’s potential uncertainty and imprecision, each criterion function g1 to g7 admits three performance discrimination thresholds of 2.5, 5.0 and 80 pts for warranting respectively any indifference, preference or considerable performance difference situation.

1>>> pt.showCriteria()

2 *---- criteria -----*

3 g1 'RandomPerformanceTableau() instance'

4 Scale = [0.0, 100.0]

5 Weight = 1.0

6 Threshold ind : 2.50 + 0.00x ; percentile: 4.76

7 Threshold pref : 5.00 + 0.00x ; percentile: 9.52

8 Threshold veto : 80.00 + 0.00x ; percentile: 95.24

9 g2 'RandomPerformanceTableau() instance'

10 Scale = [0.0, 100.0]

11 Weight = 1.0

12 Threshold ind : 2.50 + 0.00x ; percentile: 6.67

13 Threshold pref : 5.00 + 0.00x ; percentile: 6.67

14 Threshold veto : 80.00 + 0.00x ; percentile: 100.00

15 ...

16 ...

17 g7 'RandomPerformanceTableau() instance'

18 Scale = [0.0, 100.0]

19 Weight = 1.0

20 Threshold ind : 2.50 + 0.00x ; percentile: 0.00

21 Threshold pref : 5.00 + 0.00x ; percentile: 4.76

22 Threshold veto : 80.00 + 0.00x ; percentile: 100.00

On criteria function g1 (see Lines 6-8 above) we observe, for instance, about 5% of indifference, about 90% of preference and about 5% of considerable performance difference situations. The individual performance evaluation of all decision alternative on each criterion are gathered in a performance tableau.

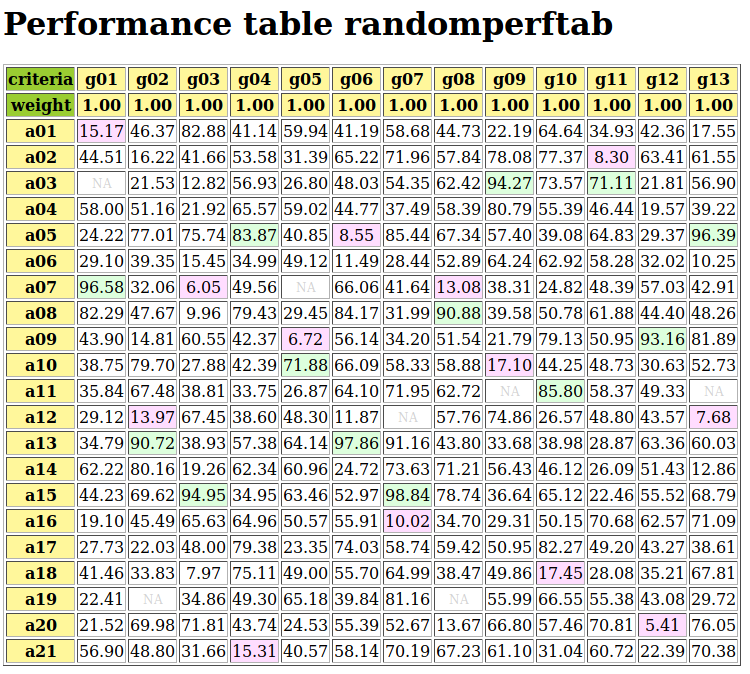

1>>> pt.showPerformanceTableau(Transposed=True,ndigits=1)

2 *---- performance tableau -----*

3 criteria | weights | 'a1' 'a2' 'a3' 'a4' 'a5' 'a6' 'a7'

4 ---------|----------|-----------------------------------------

5 'g1' | 1 | 15.2 44.5 57.9 58.0 24.2 29.1 96.6

6 'g2' | 1 | 82.3 43.9 NA 35.8 29.1 34.8 62.2

7 'g3' | 1 | 44.2 19.1 27.7 41.5 22.4 21.5 56.9

8 'g4' | 1 | 46.4 16.2 21.5 51.2 77.0 39.4 32.1

9 'g5' | 1 | 47.7 14.8 79.7 67.5 NA 90.7 80.2

10 'g6' | 1 | 69.6 45.5 22.0 33.8 31.8 NA 48.8

11 'g7' | 1 | 82.9 41.7 12.8 21.9 75.7 15.4 6.0

It is noteworthy to mention the three missing data (NA) cases: action a3 is missing, for instance, a grade on criterion g2 (see Line 6 above).

1.2.3.2. The bipolar-valued outranking digraph

Given the previous random performance tableau pt, the BipolarOutrankingDigraph constructor computes the corresponding bipolar-valued outranking digraph.

1>>> odg = BipolarOutrankingDigraph(pt)

2>>> odg

3 *------- Object instance description ------*

4 Instance class : BipolarOutrankingDigraph

5 Instance name : rel_randomperftab

6 # Actions : 7

7 # Criteria : 7

8 Size : 20

9 Determinateness (%) : 63.27

10 Valuation domain : [-1.00;1.00]

11 Attributes : [

12 'name', 'actions',

13 'criteria', 'evaluation', 'NA',

14 'valuationdomain', 'relation',

15 'order', 'gamma', 'notGamma', ...

16 ]

The resulting digraph contains 20 positive (valid) outranking realtions. And, the mean majority criteria significance support of all the pairwise outranking situations is 63.3% (see Listing 1.11 Lines 8-9). We may inspect the complete [-1.0,+1.0]-valued adjacency table as follows.

1>>> odg.showRelationTable()

2 * ---- Relation Table -----

3 r(x,y)| 'a1' 'a2' 'a3' 'a4' 'a5' 'a6' 'a7'

4 ------|-------------------------------------------------

5 'a1' | +1.00 +0.71 +0.29 +0.29 +0.29 +0.29 +0.00

6 'a2' | -0.71 +1.00 -0.29 -0.14 +0.14 +0.29 -0.57

7 'a3' | -0.29 +0.29 +1.00 -0.29 -0.14 +0.00 -0.29

8 'a4' | +0.00 +0.14 +0.57 +1.00 +0.29 +0.57 -0.43

9 'a5' | -0.29 +0.00 +0.14 +0.00 +1.00 +0.29 -0.29

10 'a6' | -0.29 +0.00 +0.14 -0.29 +0.14 +1.00 +0.00

11 'a7' | +0.00 +0.71 +0.57 +0.43 +0.29 +0.00 +1.00

12 Valuation domain: [-1.0; 1.0]

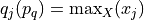

Considering the given performance tableau pt, the BipolarOutrankingDigraph class constructor computes the characteristic value  of a pairwise outranking relation “

of a pairwise outranking relation “ ” (see [BIS-2013], [ADT-L7]) in a default normalised valuation domain [-1.0,+1.0] with the median value 0.0 acting as indeterminate characteristic value. The semantics of

” (see [BIS-2013], [ADT-L7]) in a default normalised valuation domain [-1.0,+1.0] with the median value 0.0 acting as indeterminate characteristic value. The semantics of  are the following.

are the following.

When

, it is more True than False that x outranks y, i.e. alternative x is at least as well performing than alternative y on a weighted majority of criteria and there is no considerable negative performance difference observed in disfavour of x,

When

, it is more False than True that x outranks y, i.e. alternative x is not at least as well performing on a weighted majority of criteria than alternative y and there is no considerable positive performance difference observed in favour of x,

When

, it is indeterminate whether x outranks y or not.

1.2.3.3. Pairwise comparisons

From above given semantics, we may consider (see Line 5 above) that a1 outranks a2 ( ), but not a7 (

), but not a7 ( ). In order to comprehend the characteristic values shown in the relation table above, we may furthermore inspect the details of the pairwise multiple criteria comparison between alternatives a1 and a2.

). In order to comprehend the characteristic values shown in the relation table above, we may furthermore inspect the details of the pairwise multiple criteria comparison between alternatives a1 and a2.

1>>> odg.showPairwiseComparison('a1','a2')

2 *------------ pairwise comparison ----*

3 Comparing actions : (a1, a2)

4 crit. wght. g(x) g(y) diff | ind pref r()

5 ------------------------------- --------------------

6 g1 1.00 15.17 44.51 -29.34 | 2.50 5.00 -1.00

7 g2 1.00 82.29 43.90 +38.39 | 2.50 5.00 +1.00

8 g3 1.00 44.23 19.10 +25.13 | 2.50 5.00 +1.00

9 g4 1.00 46.37 16.22 +30.15 | 2.50 5.00 +1.00

10 g5 1.00 47.67 14.81 +32.86 | 2.50 5.00 +1.00

11 g6 1.00 69.62 45.49 +24.13 | 2.50 5.00 +1.00

12 g7 1.00 82.88 41.66 +41.22 | 2.50 5.00 +1.00

13 ----------------------------------------

14 Valuation in range: -7.00 to +7.00; r(x,y): +5/7 = +0.71

The outranking characteristic value  represents the majority margin resulting from the difference between the weights of the criteria in favor and the weights of the criteria in disfavor of the statement that alternative a1 is at least as well performing as alternative a2. No considerable performance difference being observed above, no veto or counter-veto situation is triggered in this pairwise comparison. Such a situation is, however, observed for instance when we pairwise compare the performances of alternatives a1 and a7.

represents the majority margin resulting from the difference between the weights of the criteria in favor and the weights of the criteria in disfavor of the statement that alternative a1 is at least as well performing as alternative a2. No considerable performance difference being observed above, no veto or counter-veto situation is triggered in this pairwise comparison. Such a situation is, however, observed for instance when we pairwise compare the performances of alternatives a1 and a7.

1>>> odg.showPairwiseComparison('a1','a7')

2 *------------ pairwise comparison ----*

3 Comparing actions : (a1, a7)

4 crit. wght. g(x) g(y) diff | ind pref r() | v veto

5 ------------------------------- ------------------ -----------

6 g1 1.00 15.17 96.58 -81.41 | 2.50 5.00 -1.00 | 80.00 -1.00

7 g2 1.00 82.29 62.22 +20.07 | 2.50 5.00 +1.00 |

8 g3 1.00 44.23 56.90 -12.67 | 2.50 5.00 -1.00 |

9 g4 1.00 46.37 32.06 +14.31 | 2.50 5.00 +1.00 |

10 g5 1.00 47.67 80.16 -32.49 | 2.50 5.00 -1.00 |

11 g6 1.00 69.62 48.80 +20.82 | 2.50 5.00 +1.00 |

12 g7 1.00 82.88 6.05 +76.83 | 2.50 5.00 +1.00 |

13 ----------------------------------------

14 Valuation in range: -7.00 to +7.00; r(x,y)= +1/7 => 0.0

This time, we observe a 57.1% majority of criteria significance [(1/7 + 1)/2 = 0.571] warranting an as well as performing situation. Yet, we also observe a considerable negative performance difference on criterion g1 (see first row in the relation table above). Both contradictory facts trigger eventually an indeterminate outranking situation [BIS-2013].

1.2.3.4. Recoding the digraph valuation

All outranking digraphs, being of root type Digraph, inherit the methods available under this latter class. The characteristic valuation domain of a digraph may, for instance, be recoded with the recodeValutaion() method below to the integer range [-7,+7], i.e. plus or minus the global significance of the family of criteria considered in this example instance.

1>>> odg.recodeValuation(-7,+7)

2>>> odg.valuationdomain['hasIntegerValuation'] = True

3>>> Digraph.showRelationTable(odg,ReflexiveTerms=False)

4 * ---- Relation Table -----

5 r(x,y) | 'a1' 'a2' 'a3' 'a4' 'a5' 'a6' 'a7'

6 ---------|------------------------------------------

7 'a1' | 0 5 2 2 2 2 0

8 'a2' | -5 0 -1 -1 1 2 -4

9 'a3' | -1 2 0 -1 -1 0 -1

10 'a4' | 0 1 4 0 2 4 -3

11 'a5' | -1 0 1 0 0 2 -1

12 'a6' | -1 0 1 -1 1 0 0

13 'a7' | 0 5 4 3 2 0 0

14 Valuation domain: [-7;+7]

Warning

Notice that the reflexive self comparison characteristic  is set above by default to the median indeterminate valuation value 0; the reflexive terms of binary relation being generally ignored in most of the Digraph3 resources.

is set above by default to the median indeterminate valuation value 0; the reflexive terms of binary relation being generally ignored in most of the Digraph3 resources.

1.2.3.5. The strict outranking digraph

From the theory (see [BIS-2013], [ADT-L7] ) we know that a bipolar-valued outranking digraph is weakly complete, i.e. if  then

then  . A bipolar-valued outranking relation verifies furthermore the coduality principle: the dual (strict negation - [14]) of the converse (inverse ~) of the outranking relation corresponds to its strict outranking part.

. A bipolar-valued outranking relation verifies furthermore the coduality principle: the dual (strict negation - [14]) of the converse (inverse ~) of the outranking relation corresponds to its strict outranking part.

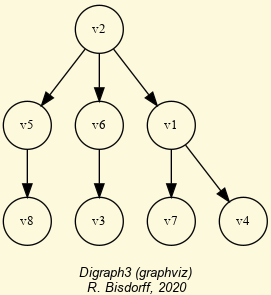

We may visualize the codual (strict) outranking digraph with a graphviz drawing [1].

1>>> cdodg = -(~odg)

2>>> cdodg.exportGraphViz('codualOdg')

3 *---- exporting a dot file for GraphViz tools ---------*

4 Exporting to codualOdg.dot

5 dot -Grankdir=BT -Tpng codualOdg.dot -o codualOdg.png

Fig. 1.10 Codual digraph

It becomes readily clear now from the picture above that both alternatives a1 and a7 are not outranked by any other alternatives. Hence, a1 and a7 appear as weak Condorcet winner and may be recommended as potential best decision actions in this illustrative preference modelling exercise.

Many more tools for exploiting bipolar-valued outranking digraphs are available in the Digraph3 resources (see the technical documentation of the outrankingDigraphs module and the perfTabs module).

In this tutorial we have constructed a random outranking digraph with the help of a random performance tableau instance. The next Digraph3 tutorial presents now different models of random performance tableaux illustrating various types of decision problems.

Back to Content Table

1.3. Evaluation and decision methods and tools

This is the methodological part of the tutorials.

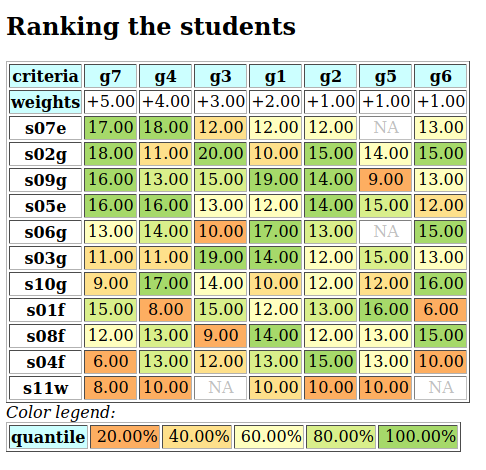

1.3.1. Computing a first choice recommendation

“The goal of our research was to design a resolution method … that is easy to put into practice, that requires as few and reliable hypotheses as possible, and that meets the needs [of the decision maker] … “

—B. Roy et al. (1966)

See also

Lecture 7 notes from the MICS Algorithmic Decision Theory course: [ADT-L7].

1.3.1.1. What site to choose ?

A SME, specialized in printing and copy services, has to move into new offices, and its CEO has gathered seven potential office sites (see Table 1.1).

ID |

Name |

Address |

Comment |

|---|---|---|---|

A |

Ave |

Avenue de la liberté |

High standing city center |

B |

Bon |

Bonnevoie |

Industrial environment |

C |

Ces |

Cessange |

Residential suburb location |

D |

Dom |

Dommeldange |

Industrial suburb environment |

E |

Bel |

Esch-Belval |

New and ambitious urbanization far from the city |

F |

Fen |

Fentange |

Out in the countryside |

G |

Gar |

Avenue de la Gare |

Main city shopping street |

Three decision objectives are guiding the CEO’s choice:

minimize the yearly costs induced by the moving,

maximize the future turnover of the SME,

maximize the new working conditions.

The decision consequences to take into account for evaluating the potential new office sites with respect to each of the three objectives are modelled by the following coherent family of criteria [26].

Objective |

ID |

Name |

Comment |

|---|---|---|---|

Yearly costs |

C |

Costs |

Annual rent, charges, and cleaning |

Future turnover |

St |

Standing |

Image and presentation |

Future turnover |

V |

Visibility |

Circulation of potential customers |

Future turnover |

Pr |

Proximity |

Distance from town center |

Working conditions |

W |

Space |

Working space |

Working conditions |

Cf |

Comfort |

Quality of office equipment |

Working conditions |

P |

Parking |

Available parking facilities |

The evaluation of the seven potential sites on each criterion are gathered in the following performance tableau.

Criterion |

weight |

A |

B |

C |

D |

E |

F |

G |

|---|---|---|---|---|---|---|---|---|

Costs |

45.0 |

35.0K€ |

17.8K€ |

6.7K€ |

14.1K€ |

34.8K€ |

18.6K€ |

12.0K€ |

Prox |

32.0 |

100 |

20 |

80 |

70 |

40 |

0 |

60 |

Visi |

26.0 |

60 |

80 |

70 |

50 |

60 |

0 |

100 |

Stan |

23.0 |

100 |

10 |

0 |

30 |

90 |

70 |

20 |

Wksp |

10.0 |

75 |

30 |

0 |

55 |

100 |

0 |

50 |

Wkcf |

6.0 |

0 |

100 |

10 |

30 |

60 |

80 |

50 |

Park |

3.0 |

90 |

30 |

100 |

90 |

70 |

0 |

80 |

Except the Costs criterion, all other criteria admit for grading a qualitative satisfaction scale from 0% (worst) to 100% (best). We may thus notice in Table 1.3 that site A is the most expensive, but also 100% satisfying the Proximity as well as the Standing criterion. Whereas the site C is the cheapest one; providing however no satisfaction at all on both the Standing and the Working Space criteria.

In Table 1.3 we may also see that the Costs criterion admits the highest significance (45.0), followed by the Future turnover criteria (32.0 + 26.0 + 23.0 = 81.0), The Working conditions criteria are the less significant (10.0 + 6.0, + 3.0 = 19.0). It follows that the CEO considers maximizing the future turnover the most important objective (81.0), followed by the minizing yearly Costs objective (45.0), and less important, the maximizing working conditions objective (19.0).

Concerning yearly costs, we suppose that the CEO is indifferent up to a performance difference of 1000€, and he actually prefers a site if there is at least a positive difference of 2500€. The grades observed on the six qualitative criteria (measured in percentages of satisfaction) are very subjective and rather imprecise. The CEO is hence indifferent up to a satisfaction difference of 10%, and he claims a significant preference when the satisfaction difference is at least of 20%. Furthermore, a satisfaction difference of 80% represents for him a considerably large performance difference, triggering a veto situation the case given (see [BIS-2013]).

In view of Table 1.3, what is now the office site we may recommend to the CEO as best choice ?

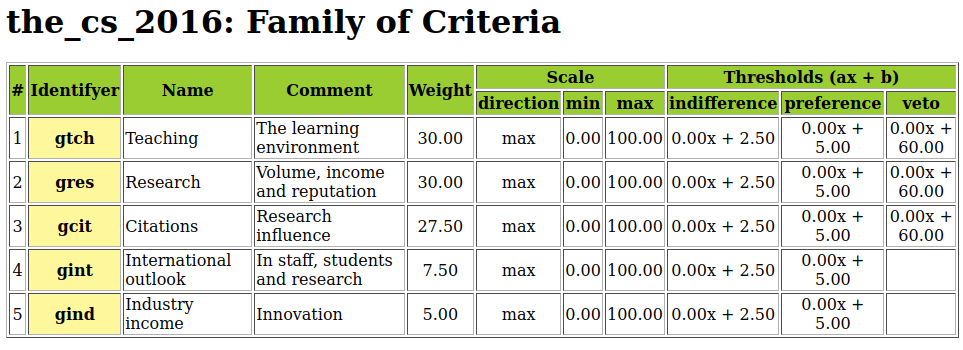

1.3.1.2. The performance tableau

A Python encoded performance tableau is available for downloading here officeChoice.py.

We may inspect the performance tableau data with the computing resources provided by the perfTabs module.

1>>> from perfTabs import PerformanceTableau

2>>> t = PerformanceTableau('officeChoice')

3>>> t

4 *------- PerformanceTableau instance description ------*

5 Instance class : PerformanceTableau

6 Instance name : officeChoice

7 # Actions : 7

8 # Objectives : 3

9 # Criteria : 7

10 NaN proportion (%) : 0.0

11 Attributes : ['name', 'actions', 'objectives',

12 'criteria', 'weightPreorder',

13 'NA', 'evaluation']

14>>> t.showPerformanceTableau()

15 *---- performance tableau -----*

16 Criteria | 'C' 'Cf' 'P' 'Pr' 'St' 'V' 'W'

17 Weights | 45.00 6.00 3.00 32.00 23.00 26.00 10.00

18 ---------|---------------------------------------------------------

19 'Ave' | -35000.00 0.00 90.00 100.00 100.00 60.00 75.00

20 'Bon' | -17800.00 100.00 30.00 20.00 10.00 80.00 30.00

21 'Ces' | -6700.00 10.00 100.00 80.00 0.00 70.00 0.00

22 'Dom' | -14100.00 30.00 90.00 70.00 30.00 50.00 55.00

23 'Bel' | -34800.00 60.00 70.00 40.00 90.00 60.00 100.00

24 'Fen' | -18600.00 80.00 0.00 0.00 70.00 0.00 0.00

25 'Gar' | -12000.00 50.00 80.00 60.00 20.00 100.00 50.00

We thus recover all the input data. To measure the actual preference discrimination we observe on each criterion, we may use the showCriteria() method.

1>>> t.showCriteria(IntegerWeights=True)

2 *---- criteria -----*

3 C 'Costs'

4 Scale = (Decimal('0.00'), Decimal('50000.00'))

5 Weight = 45

6 Threshold ind : 1000.00 + 0.00x ; percentile: 9.5

7 Threshold pref : 2500.00 + 0.00x ; percentile: 14.3

8 Cf 'Comfort'

9 Scale = (Decimal('0.00'), Decimal('100.00'))

10 Weight = 6

11 Threshold ind : 10.00 + 0.00x ; percentile: 9.5

12 Threshold pref : 20.00 + 0.00x ; percentile: 28.6

13 Threshold veto : 80.00 + 0.00x ; percentile: 90.5

14 ...

On the Costs criterion, 9.5% of the performance differences are considered insignificant and 14.3% below the preference discrimination threshold (lines 6-7). On the qualitative Comfort criterion, we observe again 9.5% of insignificant performance differences (line 11). Due to the imprecision in the subjective grading, we notice here 28.6% of performance differences below the preference discrimination threshold (Line 12). Furthermore, 100.0 - 90.5 = 9.5% of the performance differences are judged considerably large (Line 13); 80% and more of satisfaction differences triggering in fact a veto situation. Same information is available for all the other criteria.

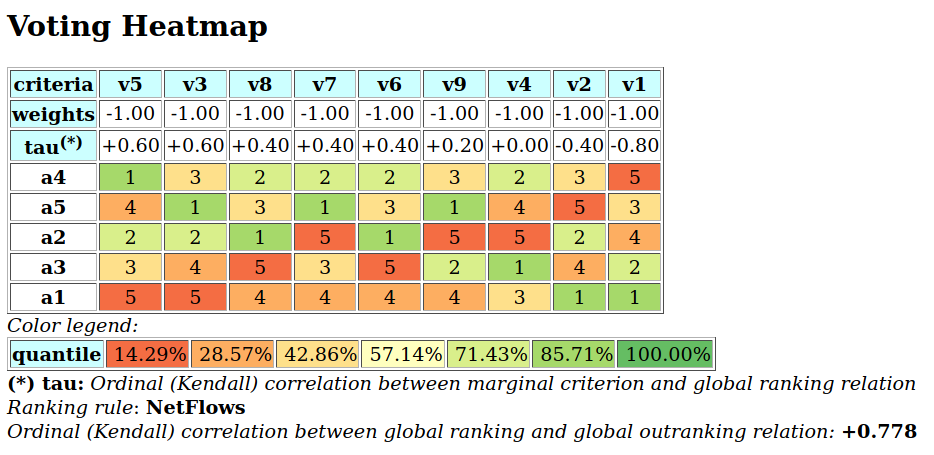

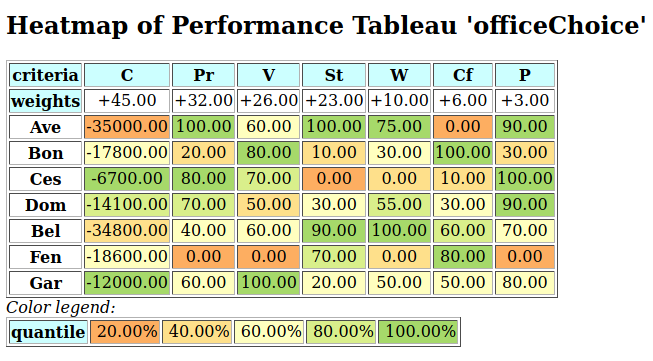

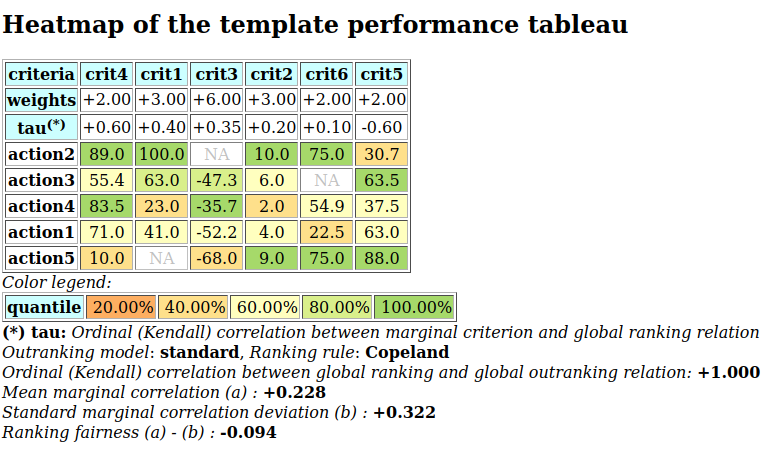

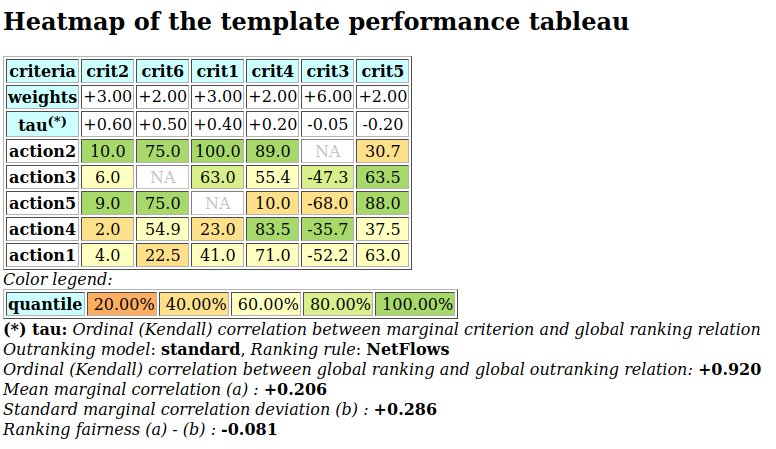

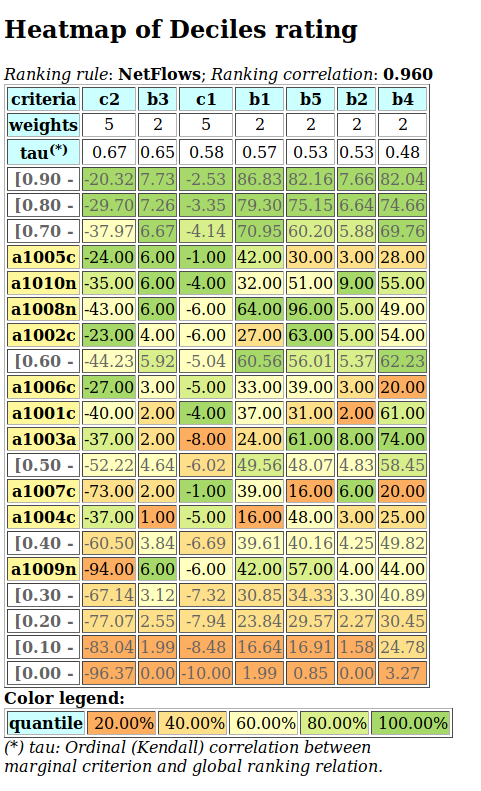

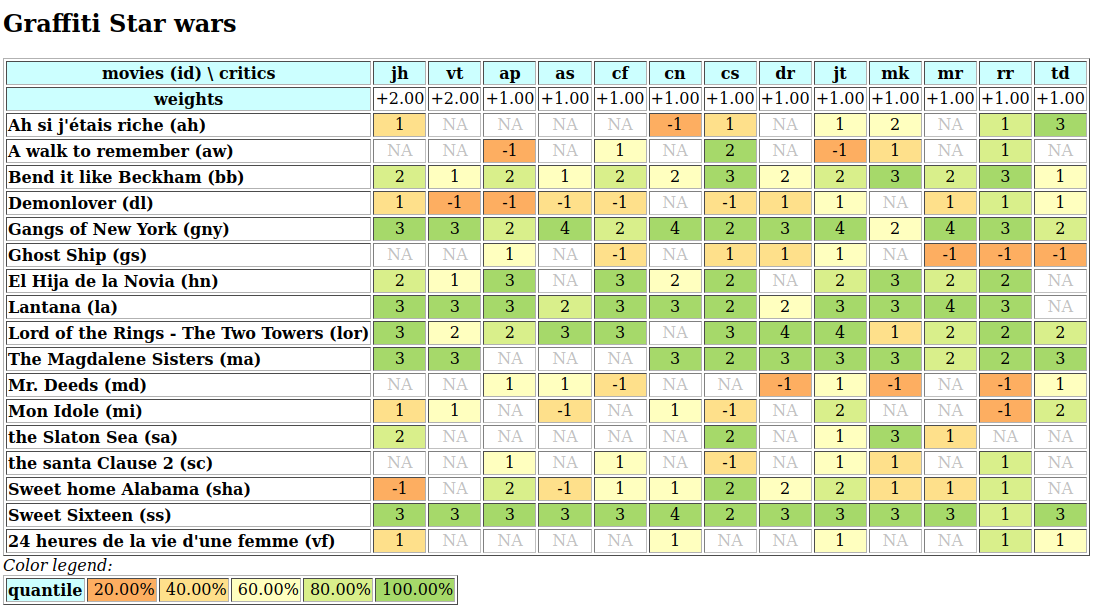

A colorful comparison of all the performances is shown on Fig. 1.11 by the heatmap statistics, illustrating the respective quantile class of each performance. As the set of potential alternatives is tiny, we choose here a classification into performance quintiles.

>>> t.showHTMLPerformanceHeatmap(colorLevels=5,

... rankingRule=None)

Fig. 1.11 Unranked heatmap of the office choice performance tableau

Site Ave shows extreme and contradictory performances: highest Costs and no Working Comfort on one hand, and total satisfaction with respect to Standing, Proximity and Parking facilities on the other hand. Similar, but opposite, situation is given for site Ces: unsatisfactory Working Space, no Standing and no Working Comfort on the one hand, and lowest Costs, best Proximity and Parking facilities on the other hand. Contrary to these contradictory alternatives, we observe two appealing compromise decision alternatives: sites Dom and Gar. Finally, site Fen is clearly the less satisfactory alternative of all.

1.3.1.3. The outranking digraph

To help now the CEO choosing the best site, we are going to compute pairwise outrankings (see [BIS-2013]) on the set of potential sites. For two sites x and y, the situation “x outranks y”, denoted (x S y), is given if there is:

a significant majority of criteria concordantly supporting that site x is at least as satisfactory as site y, and

no considerable counter-performance observed on any discordant criterion.

The credibility of each pairwise outranking situation (see [BIS-2013]), denoted r(x S y), is measured in a bipolar significance valuation [-1.00, 1.00], where positive terms r(x S y) > 0.0 indicate a validated, and negative terms r(x S y) < 0.0 indicate a non-validated outrankings; whereas the median value r(x S y) = 0.0 represents an indeterminate situation (see [BIS-2004a]).

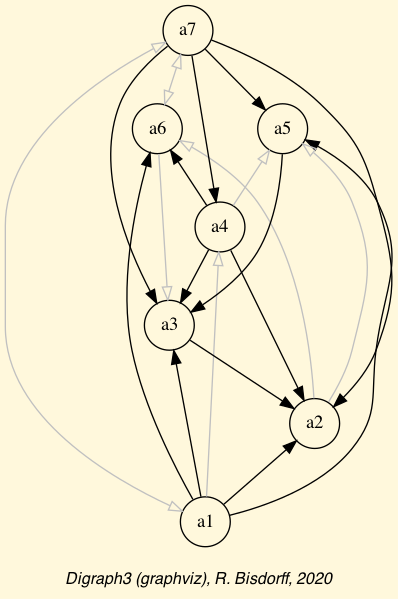

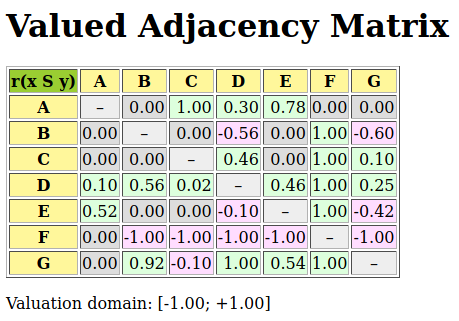

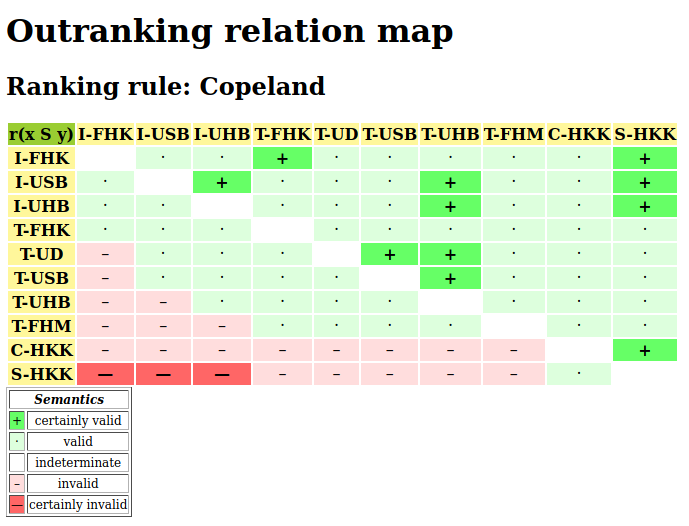

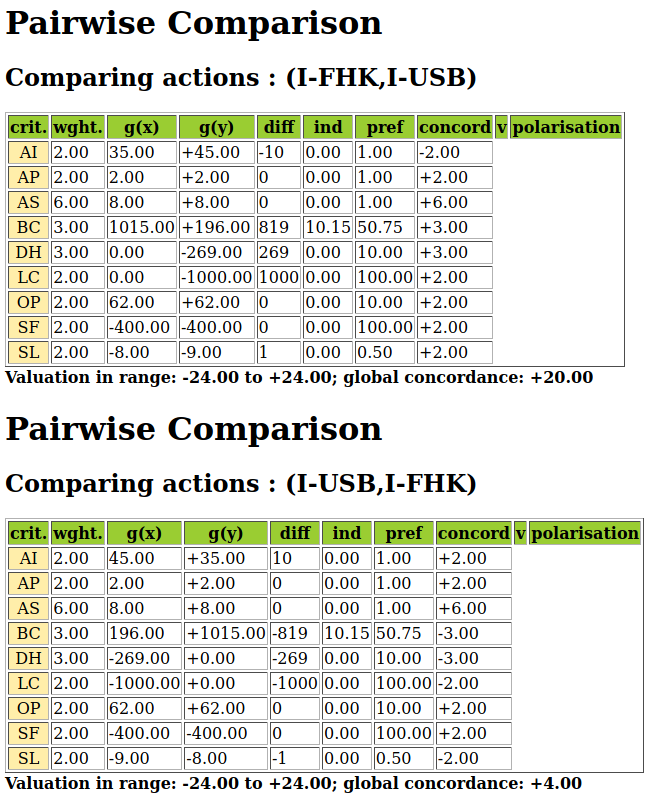

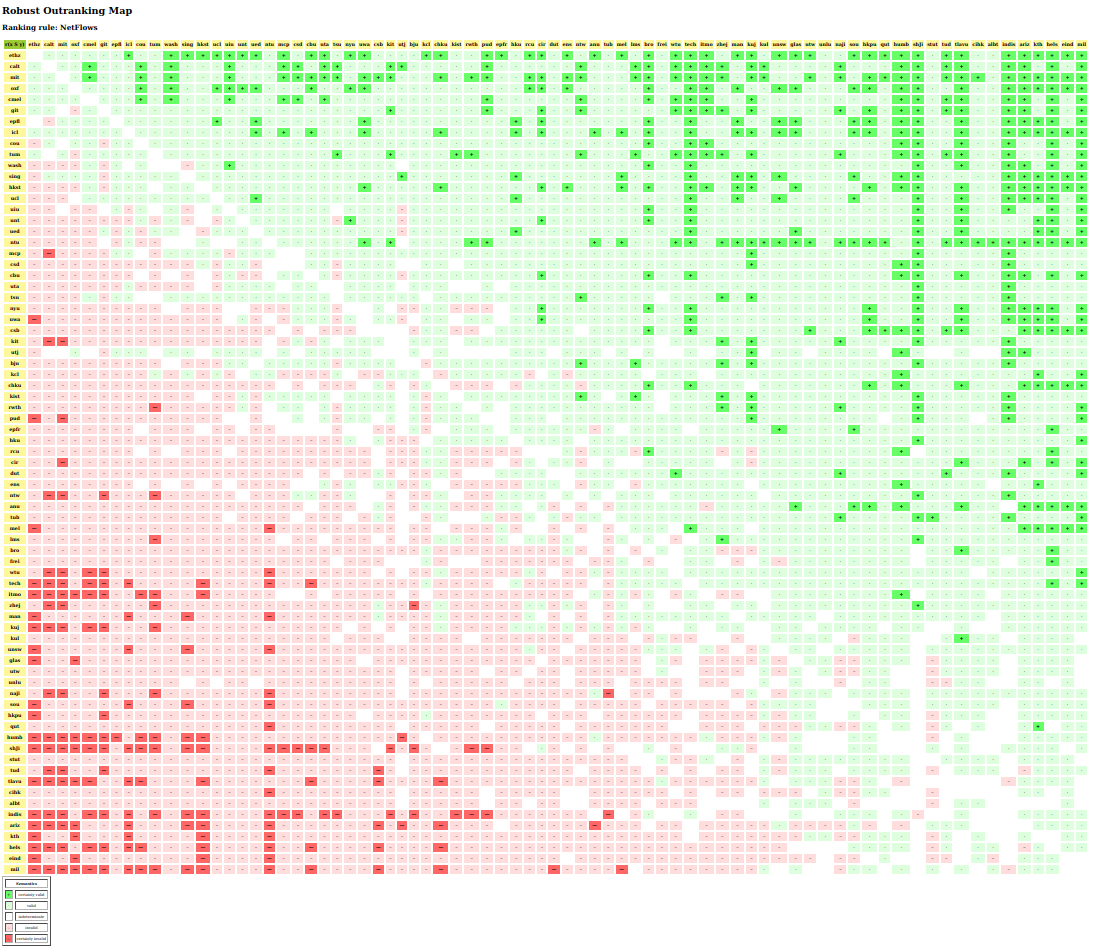

For computing such a bipolar-valued outranking digraph from the given performance tableau t, we use the BipolarOutrankingDigraph constructor from the outrankingDigraphs module. The showHTMLRelationTable method shows here the resulting bipolar-valued adjacency matrix in a system browser window (see Fig. 1.12).

1>>> from outrankingDigraphs import BipolarOutrankingDigraph

2>>> g = BipolarOutrankingDigraph(t)

3>>> g.showHTMLRelationTable()

Fig. 1.12 The office choice outranking digraph

In Fig. 1.12 we may notice that Alternative D is positively outranking all other potential office sites (a Condorcet winner). Yet, alternatives A (the most expensive) and C (the cheapest) are not outranked by any other site; they are in fact weak Condorcet winners.

1>>> g.computeCondorcetWinners()

2 ['D']

3>>> g.computeWeakCondorcetWinners()

4 ['A', 'C', 'D']

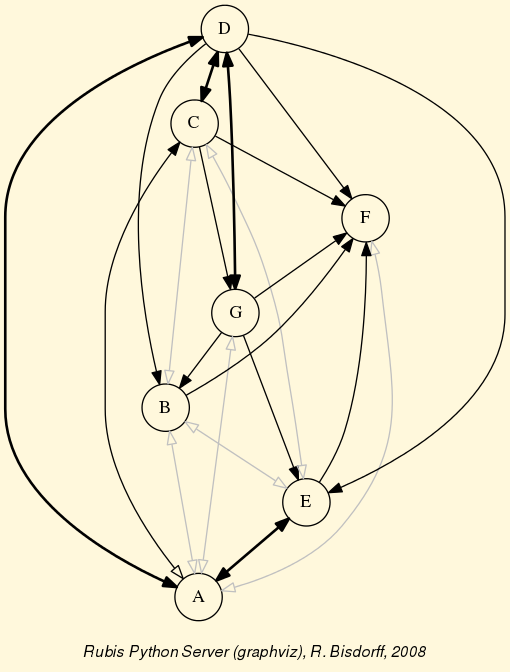

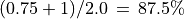

We may get even more insight in the apparent outranking situations when looking at the Condorcet digraph (see Fig. 1.13).

1>>> g.exportGraphViz('officeChoice')

2 *---- exporting a dot file for GraphViz tools ---------*

3 Exporting to officeChoice.dot

4 dot -Grankdir=BT -Tpng officeChoice.dot -o officeChoice.png

Fig. 1.13 The office choice outranking digraph

One may check that the outranking digraph g does not admit in fact any cyclic strict preference situation.

1>>> g.computeChordlessCircuits()

2 []

3>>> g.showChordlessCircuits()

4 No circuits observed in this digraph.

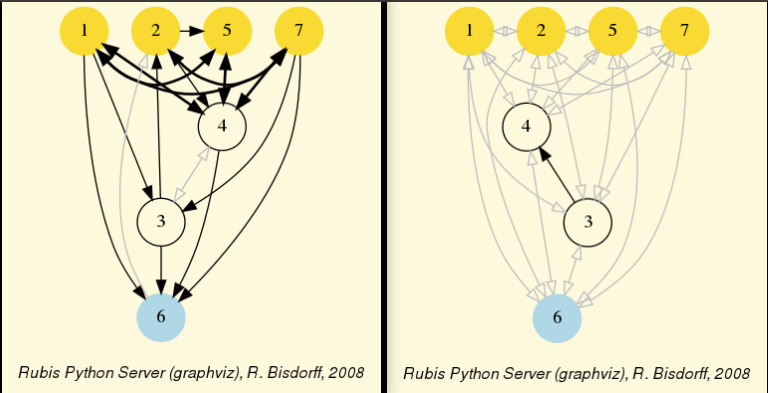

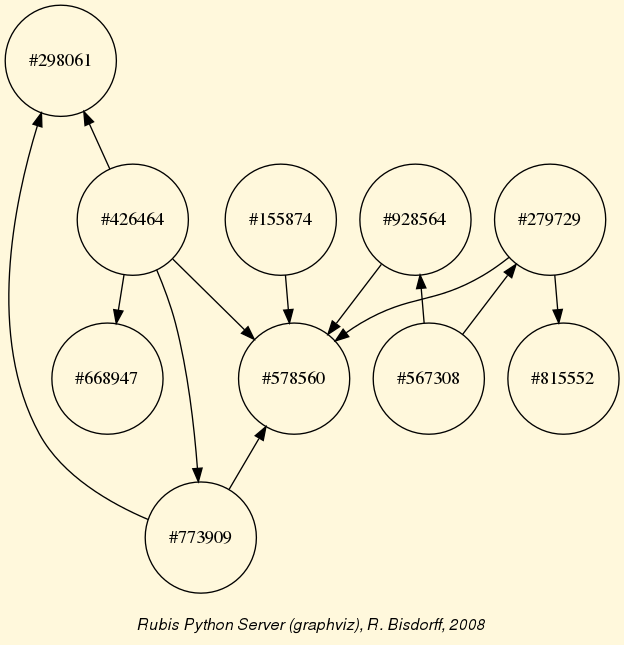

1.3.1.4. The Rubis best choice recommendation

Following the Rubis outranking method (see [BIS-2008]), potential first choice recommendations are determined by the outranking prekernels –weakly independent and strictly outranking choices– of the outranking digraph (see the tutorial on computing digraph kernels). The case given, we previously need to break open all chordless odd circuits at their weakest link.

1>>> from digraphs import BrokenCocsDigraph

2>>> bcg = BrokenCocsDigraph(g)

3>>> bcg.brokenLinks

4 set()

As we observe indeed no such chordless circuits here, we may directly compute the prekernels of the outranking digraph g.

1>>> g.showPreKernels()

2 *--- Computing preKernels ---*

3 Dominant preKernels :

4 ['D']

5 independence : 1.0

6 dominance : 0.02

7 absorbency : -1.0

8 covering : 1.000

9 ['B', 'E', 'C']

10 independence : 0.00

11 dominance : 0.10

12 absorbency : -1.0

13 covering : 0.500

14 ['A', 'G']

15 independence : 0.00

16 dominance : 0.78

17 absorbency : 0.00

18 covering : 0.700

19 Absorbent preKernels :

20 ['F', 'A']

21 independence : 0.00

22 dominance : 0.00

23 absorbency : 1.0

24 covering : 0.700

25 *----- statistics -----

26 graph name: rel_officeChoice.xml

27 number of solutions

28 dominant kernels : 3

29 absorbent kernels: 1

30 cardinality frequency distributions

31 cardinality : [0, 1, 2, 3, 4, 5, 6, 7]

32 dominant kernel : [0, 1, 1, 1, 0, 0, 0, 0]

33 absorbent kernel: [0, 0, 1, 0, 0, 0, 0, 0]

34 Execution time : 0.00018 sec.

35 Results in sets: dompreKernels and abspreKernels.

We notice in Listing 1.12 three potential first choice recommendations: the Condorcet winner D (Line 4), the triplet B, C and E (Line 9), and finally the pair A and G (Line 14). The best choice recommendation is now given by the most determined prekernel; the one supported by the most significant criteria coalition. This result is shown with the showBestChoiceRecommendation() method. Notice that this method actually works by default on the broken chords digraph bcg.

1>>> g.showBestChoiceRecommendation(CoDual=False)

2 *****************************************

3 Rubis best choice recommendation(s) (BCR)

4 (in decreasing order of determinateness)

5 Credibility domain: [-1.00,1.00]

6 === >> potential first choice(s)

7 * choice : ['D']

8 independence : 1.00

9 dominance : 0.02

10 absorbency : -1.00

11 covering (%) : 100.00

12 determinateness (%) : 51.03

13 - most credible action(s) = { 'D': 0.02, }

14 === >> potential first choice(s)

15 * choice : ['A', 'G']

16 independence : 0.00

17 dominance : 0.78

18 absorbency : 0.00

19 covering (%) : 70.00

20 determinateness (%) : 50.00

21 - most credible action(s) = { }

22 === >> potential first choice(s)

23 * choice : ['B', 'C', 'E']

24 independence : 0.00

25 dominance : 0.10

26 absorbency : -1.00

27 covering (%) : 50.00

28 determinateness (%) : 50.00

29 - most credible action(s) = { }

30 === >> potential last choice(s)

31 * choice : ['A', 'F']

32 independence : 0.00

33 dominance : 0.00

34 absorbency : 1.00

35 covered (%) : 70.00

36 determinateness (%) : 50.00

37 - most credible action(s) = { }

38 Execution time: 0.014 seconds

We notice in Listing 1.13 (Line 7) above that the most significantly supported best choice recommendation is indeed the Condorcet winner D supported by a majority of 51.03% of the criteria significance (see Line 12). Both other potential first choice recommendations, as well as the potential last choice recommendation, are not positively validated as best, resp. worst choices. They may or may not be considered so. Alternative A, with extreme contradictory performances, appears both, in a first and a last choice recommendation (see Lines 15 and 31) and seams hence not actually comparable to its competitors.

1.3.1.5. Computing strict best choice recommendations

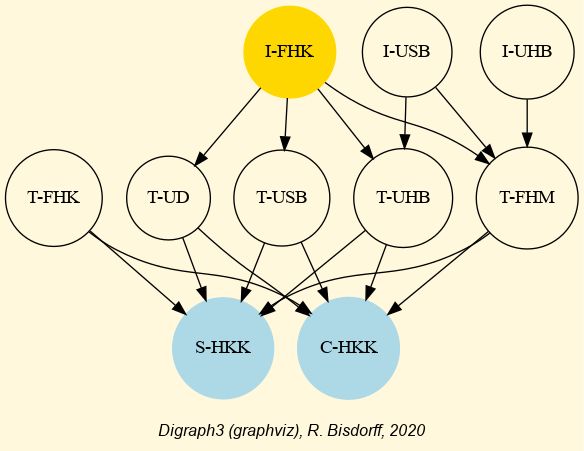

When comparing now the performances of alternatives D and G on a pairwise perspective (see below), we notice that, with the given preference discrimination thresholds, alternative G is actually certainly at least as good as alternative D: r(G outranks D) = +145/145 = +1.0.

1>>> g.showPairwiseComparison('G','D')

2 *------------ pairwise comparison ----*

3 Comparing actions : (G, D)

4 crit. wght. g(x) g(y) diff. | ind pref concord |

5 =========================================================================

6 C 45.00 -12000.00 -14100.00 +2100.00 | 1000.00 2500.00 +45.00 |

7 Cf 6.00 50.00 30.00 +20.00 | 10.00 20.00 +6.00 |

8 P 3.00 80.00 90.00 -10.00 | 10.00 20.00 +3.00 |

9 Pr 32.00 60.00 70.00 -10.00 | 10.00 20.00 +32.00 |

10 St 23.00 20.00 30.00 -10.00 | 10.00 20.00 +23.00 |

11 V 26.00 100.00 50.00 +50.00 | 10.00 20.00 +26.00 |

12 W 10.00 50.00 55.00 -5.00 | 10.00 20.00 +10.00 |

13 =========================================================================

14 Valuation in range: -145.00 to +145.00; global concordance: +145.00

However, we must as well notice that the cheapest alternative C is in fact strictly outranking alternative G: r(C outranks G) = +15/145 > 0.0, and r(G outranks C) = -15/145 < 0.0.

1>>> g.showPairwiseComparison('C','G')

2 *------------ pairwise comparison ----*

3 Comparing actions : (C, G)/(G, C)

4 crit. wght. g(x) g(y) diff. | ind. pref. (C,G)/(G,C) |

5 ==========================================================================

6 C 45.00 -6700.00 -12000.00 +5300.00 | 1000.00 2500.00 +45.00/-45.00 |

7 Cf 6.00 10.00 50.00 -40.00 | 10.00 20.00 -6.00/ +6.00 |

8 P 3.00 100.00 80.00 +20.00 | 10.00 20.00 +3.00/ -3.00 |

9 Pr 32.00 80.00 60.00 +20.00 | 10.00 20.00 +32.00/-32.00 |

10 St 23.00 0.00 20.00 -20.00 | 10.00 20.00 -23.00/+23.00 |

11 V 26.00 70.00 100.00 -30.00 | 10.00 20.00 -26.00/+26.00 |

12 W 10.00 0.00 50.00 -50.00 | 10.00 20.00 -10.00/+10.00 |

13 =========================================================================

14 Valuation in range: -145.00 to +145.00; global concordance: +15.00/-15.00

To model these strict outranking situations, we may recompute the best choice recommendation on the codual, the converse (~) of the dual (-) [14], of the outranking digraph instance g (see [BIS-2013]), as follows.

1>>> g.showBestChoiceRecommendation(

2... CoDual=True,

3... ChoiceVector=True)

4

5 * --- First and last choice recommendation(s) ---*

6 (in decreasing order of determinateness)

7 Credibility domain: [-1.00,1.00]

8 === >> potential first choice(s)

9 * choice : ['A', 'C', 'D']

10 independence : 0.00

11 dominance : 0.10

12 absorbency : 0.00

13 covering (%) : 41.67

14 determinateness (%) : 50.59

15 - characteristic vector = { 'D': 0.02, 'G': 0.00, 'C': 0.00,

16 'A': 0.00, 'F': -0.02, 'E': -0.02,

17 'B': -0.02, }

18 === >> potential last choice(s)

19 * choice : ['A', 'F']

20 independence : 0.00

21 dominance : -0.52

22 absorbency : 1.00

23 covered (%) : 50.00

24 determinateness (%) : 50.00

25 - characteristic vector = { 'G': 0.00, 'F': 0.00, 'E': 0.00,

26 'D': 0.00, 'C': 0.00, 'B': 0.00,

27 'A': 0.00, }

It is interesting to notice in Listing 1.14 (Line 9) that the strict best choice recommendation consists in the set of weak Condorcet winners: ‘A’, ‘C’ and ‘D’. In the corresponding characteristic vector (see Line 15-17), representing the bipolar credibility degree with which each alternative may indeed be considered a best choice (see [BIS-2006a], [BIS-2006b]), we find confirmed that alternative D is the only positively validated one, whereas both extreme alternatives - A (the most expensive) and C (the cheapest) - stay in an indeterminate situation. They may be potential first choice candidates besides D. Notice furthermore that compromise alternative G, while not actually included in an outranking prekernel, shows as well an indeterminate situation with respect to being or not being a potential first choice candidate.

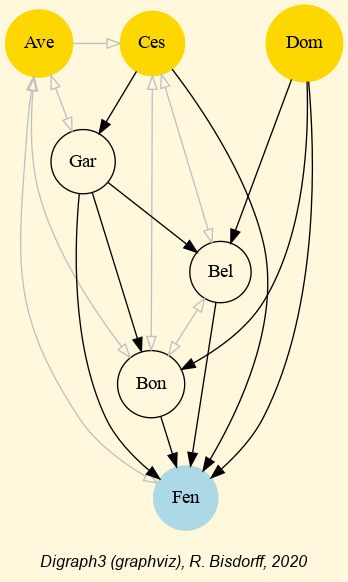

We may also notice (see Line 17 and Line 21) that both alternatives A and F are reported as certainly strict outranked choices, hence as potential last choice recommendation . This confirms again the global incomparability status of alternative A (see Fig. 1.14).

1>>> gcd = ~(-g) # codual of g

2>>> gcd.exportGraphViz(fileName='bestChoiceChoice',

3... fistChoice=['A','C','D'],

4... lastChoice=['F'])

5 *---- exporting a dot file for GraphViz tools ---------*

6 Exporting to bestOfficeChoice.dot

7 dot -Grankdir=BT -Tpng bestOfficeChoice.dot -o bestOfficeChoice.png

Fig. 1.14 Best office choice recommendation from strict outranking digraph

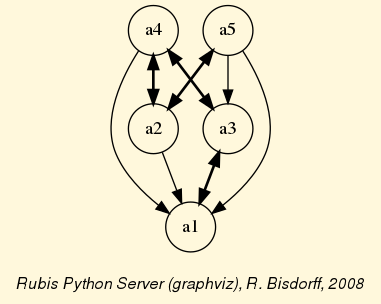

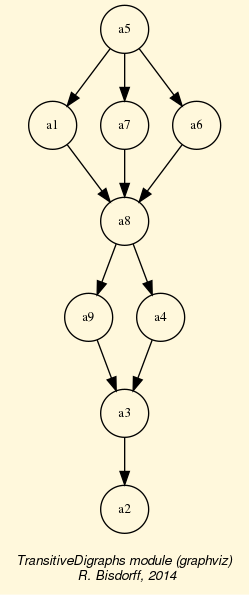

1.3.1.6. Weakly ordering the outranking digraph

To get a more complete insight in the overall strict outranking situations, we may use the RankingByChoosingDigraph constructor imported from the transitiveDigraphs module, for computing a ranking-by-choosing result from the codual, i.e. the strict outranking digraph instance gcd (see above).

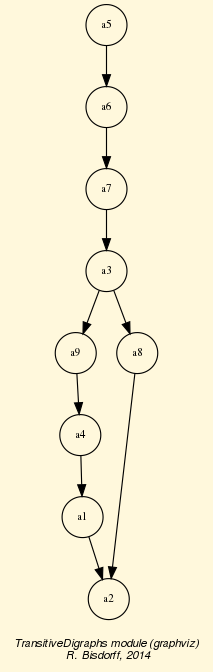

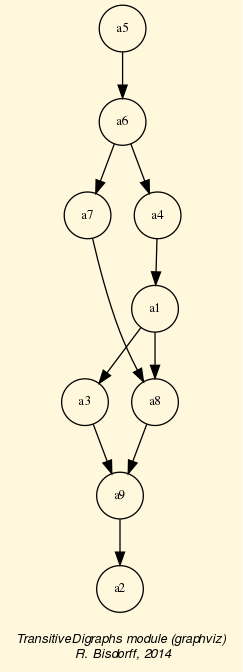

1>>> from transitiveDigraphs import RankingByChoosingDigraph

2>>> rbc = RankingByChoosingDigraph(gcd)

3 Threading ... ## multiprocessing if 2 cores are available

4 Exiting computing threads

5>>> rbc.showRankingByChoosing()

6 Ranking by Choosing and Rejecting

7 1st ranked ['D']

8 2nd ranked ['C', 'G']

9 2nd last ranked ['B', 'C', 'E']

10 1st last ranked ['A', 'F']

11>>> rbc.exportGraphViz('officeChoiceRanking')

12 *---- exporting a dot file for GraphViz tools ---------*

13 Exporting to officeChoiceRanking.dot

14 0 { rank = same; A; C; D; }

15 1 { rank = same; G; }

16 2 { rank = same; E; B; }

17 3 { rank = same; F; }

18 dot -Grankdir=TB -Tpng officeChoiceRanking.dot -o officeChoiceRanking.png

Fig. 1.15 Ranking-by-choosing from the office choice outranking digraph

In this ranking-by-choosing method, where we operate the epistemic fusion of iterated (strict) first and last choices, compromise alternative D is now ranked before compromise alternative G. If the computing node supports multiple processor cores, first and last choosing iterations are run in parallel. The overall partial ordering result shows again the important fact that the most expensive site A, and the cheapest site C, both appear incomparable with most of the other alternatives, as is apparent from the Hasse diagram of the ranking-by-choosing relation (see Fig. 1.15).

The best choice recommendation appears hence depending on the very importance the CEO is attaching to each of the three decision objectives he is considering. In the setting here, where he considers that maximizing the future turnover is the most important objective followed by minimizing the Costs and, less important, maximizing the working conditions, site D represents actually the best compromise. However, if Costs do not play much a role, it would be perhaps better to decide to move to the most advantageous site A; or if, on the contrary, Costs do matter a lot, moving to the cheapest alternative C could definitely represent a more convincing recommendation.

It might be worth, as an exercise, to modify these criteria significance weights in the ‘officeChoice.py’ data file in such a way that

all criteria under an objective appear equi-significant, and

all three decision objectives are considered equally important.

What will become the best choice recommendation under this working hypothesis?

See also

Lecture 7 notes from the MICS Algorithmic Decision Theory course: [ADT-L7].

Back to Content Table

1.3.2. How to create a new performance tableau instance

In this tutorial we illustrate a way of creating a new PerformanceTableau instance by editing a template with 5 decision alternatives, 3 decision objectives and 6 performance criteria.

1.3.2.1. Editing a template file

For this purpose we provide the following perfTab_Template.py file in the examples directory of the Digraph3 resources.

1#########################################################

2# Digraph3 documentation

3# Template for creating a new PerformanceTableau instance

4# (C) R. Bisdorff Mar 2021

5# Digraph3/examples/perfTab_Template.py

6##########################################################

7from decimal import Decimal

8from collections import OrderedDict

9#####

10# edit the decision actions

11# avoid special characters, like '_', '/' or ':',

12# in action identifiers and short names

13actions = OrderedDict([

14 ('a1', {

15 'shortName': 'action1',

16 'name': 'decision alternative a1',

17 'comment': 'some specific features of this alternative',

18 }),

19 ...

20 ...

21])

22#####

23# edit the decision objectives

24# adjust the list of performance criteria

25# and the total weight (sum of the criteria weights)

26# per objective

27objectives = OrderedDict([

28 ('obj1', {

29 'name': 'decision objective obj1',

30 'comment': "some specific features of this objective",

31 'criteria': ['g1', 'g2'],

32 'weight': Decimal('6'),

33 }),

34 ...

35 ...

36 ])

37#####

38# edit the performance criteria

39# adjust the objective reference

40# Left Decimal of a threshold = constant part and

41# right Decimal = proportional part of the threshold

42criteria = OrderedDict([

43 ('g1', {

44 'shortName': 'crit1',

45 'name': "performance criteria 1",

46 'objective': 'obj1',

47 'preferenceDirection': 'max',

48 'comment': 'measurement scale type and unit',

49 'scale': (Decimal('0.0'), Decimal('100.0'),

50 'thresholds': {'ind': (Decimal('2.50'), Decimal('0.0')),

51 'pref': (Decimal('5.00'), Decimal('0.0')),

52 'veto': (Decimal('60.00'), Decimal('0.0'))

53 },

54 'weight': Decimal('3'),

55 }),

56 ...

57 ...

58 ])

59#####

60# default missing data symbol = -999

61NA = Decimal('-999')

62#####

63# edit the performance evaluations

64# criteria to be minimized take negative grades

65evaluation = {

66 'g1': {

67 'a1':Decimal("41.0"),

68 'a2':Decimal("100.0"),

69 'a3':Decimal("63.0"),

70 'a4':Decimal('23.0'),

71 'a5': NA,

72 },

73 # g2 is of ordinal type and scale 0-10

74 'g2': {

75 'a1':Decimal("4"),

76 'a2':Decimal("10"),

77 'a3':Decimal("6"),

78 'a4':Decimal('2'),

79 'a5':Decimal('9'),

80 },

81 # g3 has preferenceDirection = 'min'

82 'g3': {

83 'a1':Decimal("-52.2"),

84 'a2':NA,

85 'a3':Decimal("-47.3"),

86 'a4':Decimal('-35.7'),

87 'a5':Decimal('-68.00'),

88 },

89 ...

90 ...

91 }

92####################

The template file, shown in Listing 1.15, contains first the instructions to import the required Decimal and OrderedDict classes (see Lines 7-8). Four main sections are following: the potential decision actions, the decision objectives, the performance criteria, and finally the performance evaluation.

1.3.2.2. Editing the decision alternatives

Decision alternatives are stored in attribute actions under the OrderedDict format (see the OrderedDict description in the Python documentation).

Required attributes of each decision alternative, besides the object identifier, are: shortName, name and comment (see Lines 15-17). The shortName attribute is essentially used when showing the performance tableau or the performance heatmap in a browser view.

Note

Mind that graphviz drawings require digraph actions’ (nodes) identifier strings without any special characters like _ or /.

Decision actions descriptions are stored in the order of which they appear in the stored instance file. The OrderedDict object keeps this given order when iterating over the decision alternatives.

The random performance tableau models presented in the previous tutorial use the actions attribute for storing special features of the decision alternatives. The Cost-Benefit model, for instance, uses a type attribute for distinguishing between advantageous, neutral and cheap alternatives. The 3-Objectives model keeps a detailed record of the performance profile per decision objective and the corresponding random generators per performance criteria (see Lines 7- below).

1>>> t = Random3ObjectivesPerformanceTableau()

2>>> t.actions

3 OrderedDict([

4 ('p01', {'shortName': 'p01',

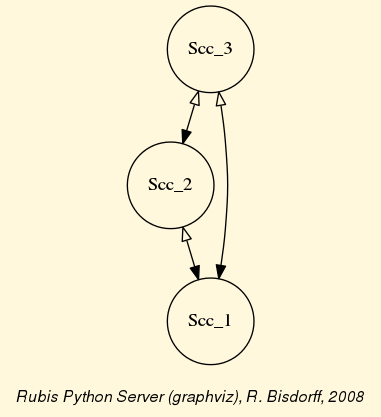

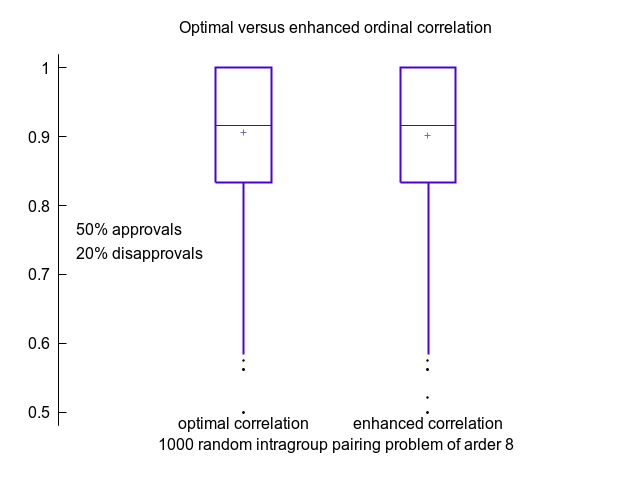

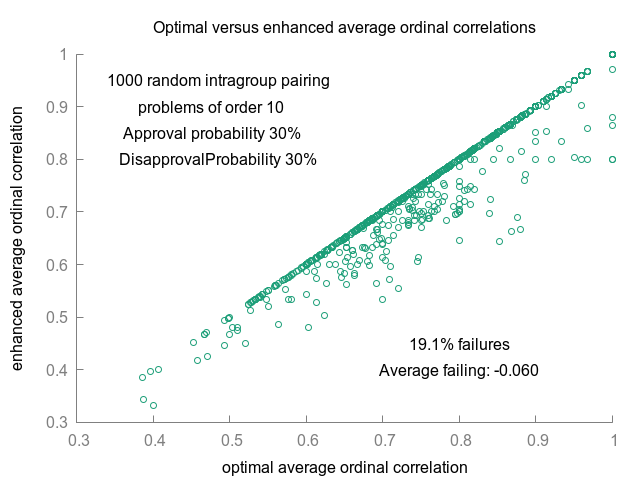

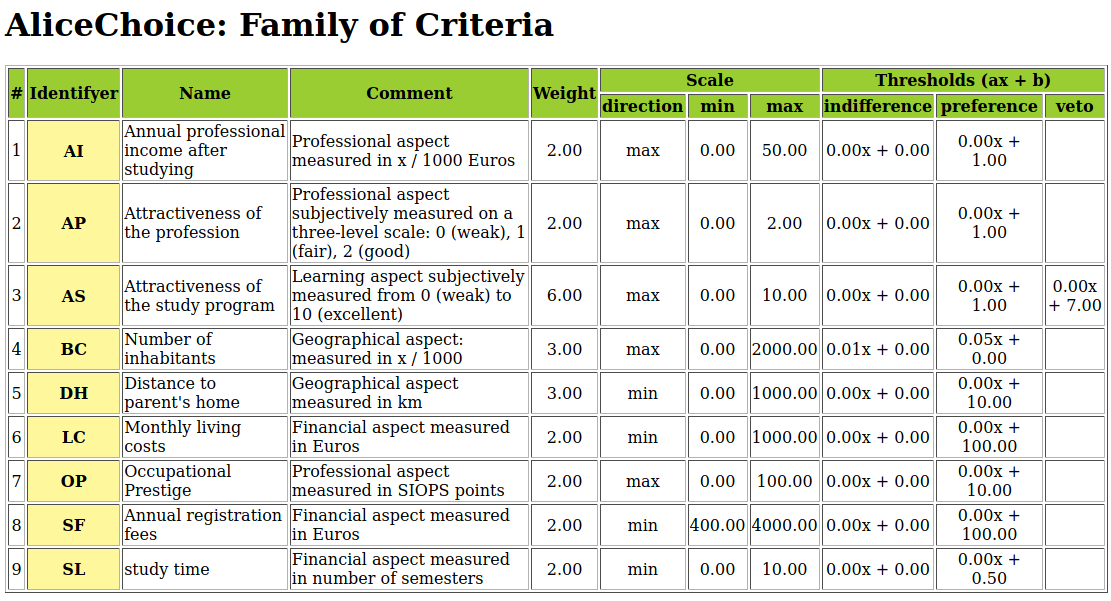

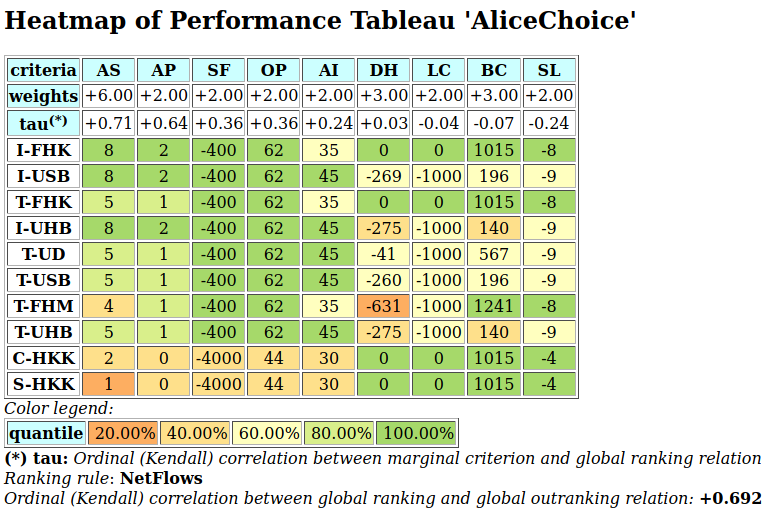

5 'name': 'action p01 Eco~ Soc- Env+',