2. Technical Reference of the Digraph3 modules

- Author:

Raymond Bisdorff, Emeritus Professor of Computer Science and Applied Mathematics, University of Luxembourg

- Url:

- Version:

Python 3.12 (release: 3.12.3)

- Copyright:

R. Bisdorff 2013-2024

- New:

Following a Python 3.12 recommendation, all multiprocessing resources have been refactored to use by default, instead of the traditional fork, the safer spawn threading start method. As a consequence, main program code of multiprocessing Digraph3 Python scripts must now start with a __name__==’__main__’ test in order to avoid its recursive execution in each started thread (see the

multiprocessingmodule).A

pairingsmodule for solving fair intergroup and intragroup pairing problemsA

dynamicProgrammingmodule for solving dynamic programming problemsThe

digraphsTools.computeSequenceAlignment()method implements the Needlemann % Wunsch dynamic programming algorithm for computing DNA sequence alignments

Preface

It is necessary to mention that the Digraph3 Python resources do not provide a professional Python software library. The collection of Python modules was not built following any professional software development methodology. The design of classes and methods was kept as simple and elementary as was opportune for the author. Sophisticated and cryptic overloading of classes, methods and variables is more or less avoided all over. A simple copy, paste and ad hoc customisation development strategy was generally preferred. As a consequence, the Digraph3 modules keep a large part of independence.

Furthermore, the development of the Digraph3 modules being spread over two decades, the programming style did evolve with growing experience and the changes and enhancement coming up with the ongoing new releases first, of the standard Python2, and later of the standard Python3 libraries. The backward compatibility requirements necessarily introduced so with time different notation and programming techniques.

Note

Two deviating features from the usually recommended Python source coding style must be mentioned. We do not use only lowercase function names and avoid all underscore separators because of Latex editing problems. Same rule is applied to variable names, except Boolean method parameters –like Debug or Comments– where we prefer using semiotic names starting with an uppercase letter.

2.1. Installation

Dowloading the Digraph3 resources

Three download options are given:

Recommended: With a browser access, download and extract the latest distribution zip archive either, from

By using a git client and cloning either, from github.com:

...$ git clone https://github.com/rbisdorff/Digraph3

Or, from sourceforge.net:

...$ git clone https://git.code.sf.net/p/digraph3/code Digraph3

On Linux or Mac OS, ..$ cd to the extracted <Digraph3> directory. From Python3.10.4 on, the distutils package and the direct usage of setup.py are deprecated. The instead recommended installation via the pip module is provided with:

../Digraph3$ make installPip

This make command launches in fact a ${PYTHON} -m pip -v install –upgrade –scr = . command that installs the Digraph3 modules in the running virtual environment (recommended option) or the user’s local site-packages directory. A system wide installation is possible with prefixing the make installPip commad with sudo. As of Python 3.11, it is necessary to previously install the wheel package ( …$ python3.11 -m pip install wheel).

For earlier Python3 version:

../Digraph3$ make installVenv

installs the Digraph3 modules in an activated virtual Python environment (the Python recommended option), or in the user’s local python3 site-packages.

Whereas:

../Digraph3$ make install

installs (with sudo ${PYTHON} setup.py) the Digraph3 modules system wide in the current running python environment. Python 3.8 (or later) environment is recommended (see the makefile for adapting to your PYTHON make constant).

If the cython (https://cython.org/) C-compiled modules for Big Data applications are required, it is necessary to previously install the cython package and, if not yet installed, the wheel package in the running Python environment:

...$ python3 -m pip install cython wheel

It is recommended to run a test suite:

.../Digraph3$ make tests

Test results are stored in the <Digraph3/test/results> directory. Notice that the python3 pytest package is therefore required:

...$ python3 -m pip install pytest pytest-xdist

A verbose (with stdout not captured) pytest suite may be run as follows:

.../Digraph3$ make verboseTests

Individual module pytest suites are also provided (see the makefile), like the one for the outrankingDigraphs module:

../Digraph3$ make outrankingDigraphsTests

When the GNU parallel shell tool is installed and multiple processor cores are detected, the tests may be executed in multiprocessing mode:

../Digraph3$ make pTests

If the pytest-xdist package is installed (see above), it is also possible to set as follws a number of pytests to be run in parallel (see the makefile):

../Digraph3$ make tests JOBS="-n 8"

The pytest module is by default ignoring Python run time warnings. It is possible to activate default warnings as follows (see the makefile):

../Digraph3$ make tests PYTHON="python3 -Wd"

Dependencies

To be fully functional, the Digraph3 resources mainly need the graphviz tools and the R statistics resources to be installed.

When exploring digraph isomorphisms, the nauty isomorphism testing program is required.

2.2. Organisation of the Digraph3 modules

The Digraph3 source code is split into several interdependent modules of which the digraphs.py module is the master module.

2.2.1. Basic modules

- digraphs module

Main part of the Digraph3 source code with the generic root Digraph class.

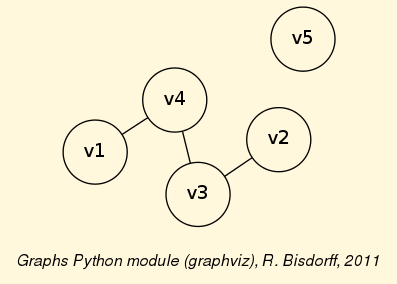

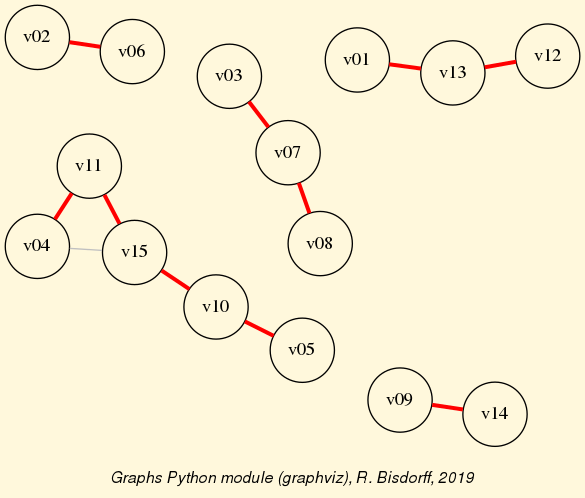

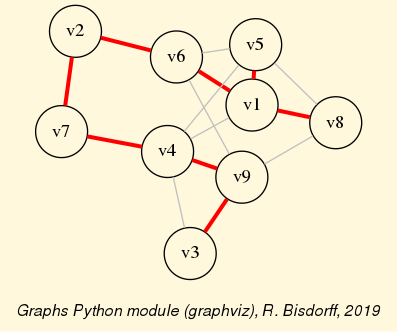

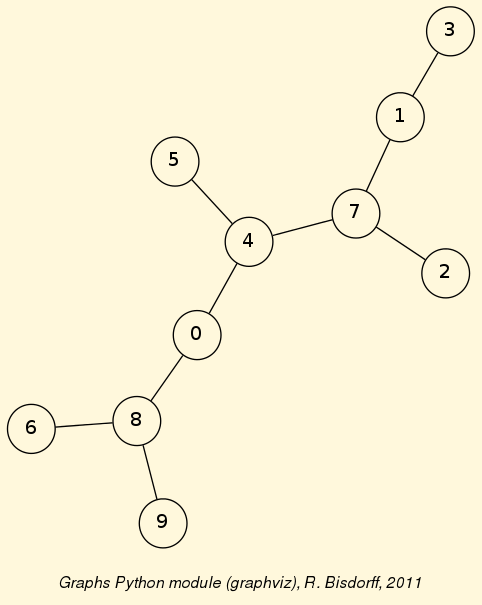

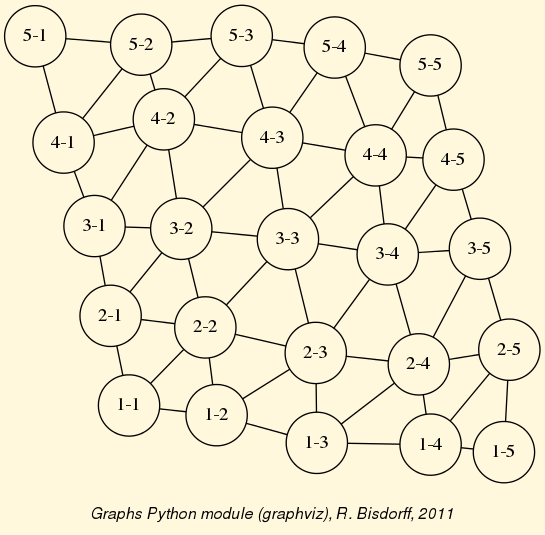

- graphs module

Resources for handling undirected graphs with the generic root Graph class and a brigde to the

digraphsmodule resources.

- perfTabs module

Tools for handling multiple criteria performance tableaux with the generic root PerformanceTableau class.

- outrankingDigraphs module

Root module for handling outranking digraphs with the abstract generic root

OutrankingDigraphclasss and the main BipolarOutrankingDigraph class. Notice that the outrankingDigraph class defines a hybrid object type, inheriting conjointly from theDigraphclass and thePerformanceTableauclass.

- votingProfiles module

Classes and methods for handling voting ballots and computing election results with main LinearVotingProfile class.

- pairings module

Classes and methods for computing fair pairings solutions with abstract generic root

Pairingclass.

- dynamicProgramming module

Classes and methods for solving dynamic programming problems with generic root

DynamicProgrammingDigraphclass.

2.2.2. Various Random generators

- randomDigraphs module

Various implemented random digraph models.

- randomPerfTabs module

Various implemented random performance tableau models.

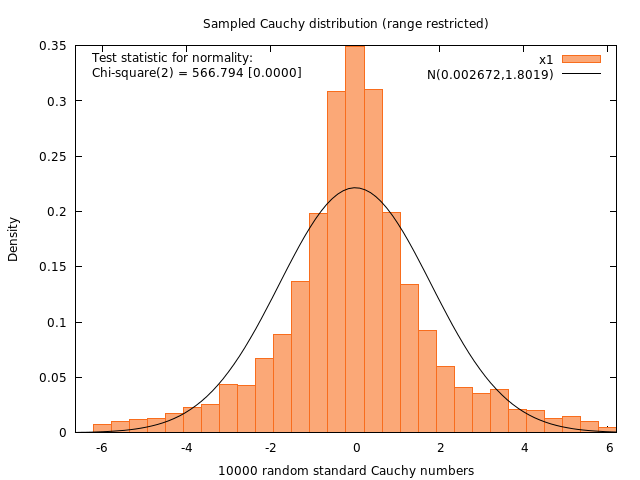

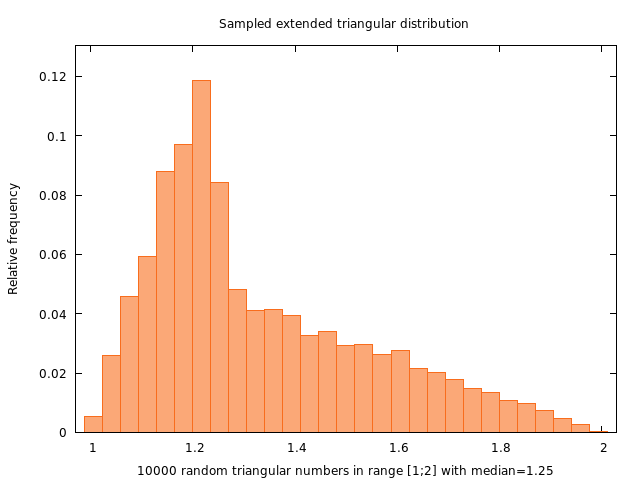

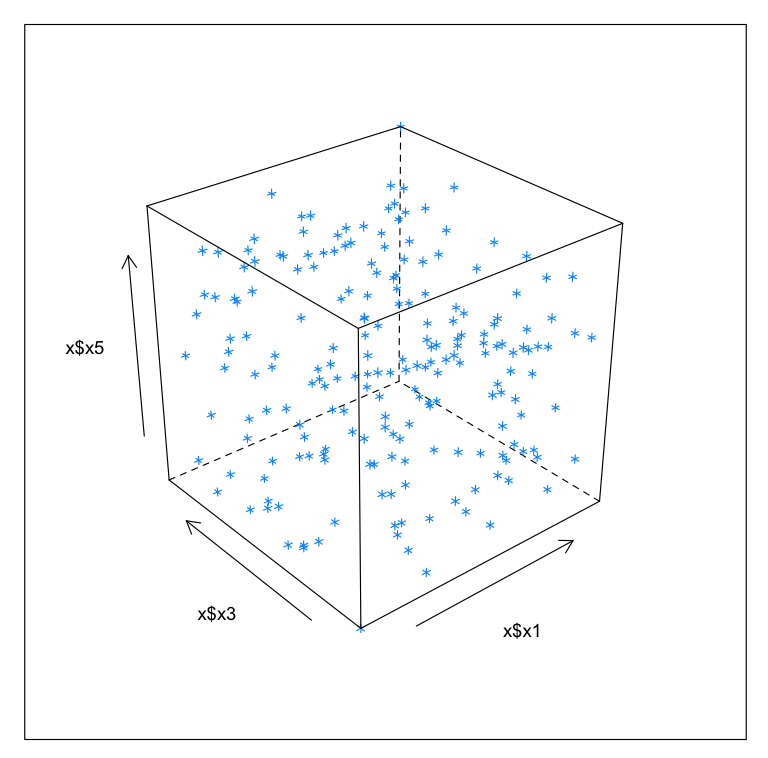

- randomNumbers module

Additional random number generators, not available in the standard python random.py library.

2.2.3. Handling big data

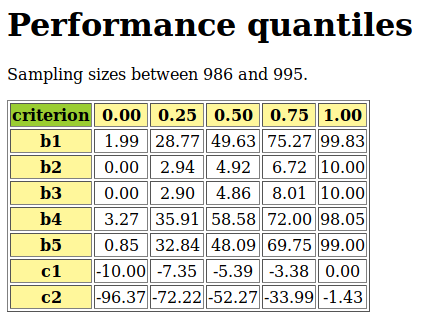

- performanceQuantiles module

Incremental representation of large performance tableaux via binned cumulated density functions per criteria. Depends on the

randomPerfTabsmodule.

- sparseOutrankingDigraphs module

Sparse implementation design for large bipolar outranking digraphs (order > 1000);

- mpOutrankingDigraphs module

New variable start-methods based multiprocessing construction of genuine bipolar-valued outranking digraphs.

- Cythonized modules for big digraphs

Cythonized C implementation for handling big performance tableaux and bipolar outranking digraphs (order > 1000).

- cRandPerfTabs module

Integer and float valued C version of the

randomPerfTabsmodule- cIntegerOutrankingDigraphs module

Integer and float valued C version of the

BipolarOutrankingDigraphclass- cIntegerSortingDigraphs module

Integer and float valued C version of the

QuantilesSortingDigraphclass- cSparseIntegerOutrankingDigraphs module

Integer and float valued C version of sparse outranking digraphs.

2.2.4. Sorting, rating and ranking tools

- ratingDigraphs module

Tools for solving relative and absolute rating problems with the abstract generic root

RatingDigraphclass;

- sortingDigraphs module

Additional tools for solving sorting problems with the generic root

SortingDigraphclass and the main QuantilesSortingDigraph class;

- linearOrders module

Additional tools for solving linearly ranking problems with the abstract generic root LinearOrder class;

- transitiveDigraphs module

Additional tools for solving pre-ranking problems with abstract generic root TransitiveDigraph class.

2.2.5. Miscellaneous tools

- digraphsTools module

Various generic methods and tools for handling digraphs.

- arithmetics module

Some common methods and tools for computing with integer numbers.

2.3. digraphs module

A tutorial with coding examples is available here: Working with the Digraph3 software resources

Inheritance Diagram

Python3+ implementation of the digraphs module, root module of the Digraph3 resources.

Copyright (C) 2006-2023 Raymond Bisdorff

This program is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3 of the License, or (at your option) any later version.

This program is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

You should have received a copy of the GNU General Public License along with this program; if not, write to the Free Software Foundation, Inc., 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301 USA.

- class digraphs.AsymmetricPartialDigraph(digraph)[source]

Renders the asymmetric part of a Digraph instance.

Note

The non asymmetric and the reflexive links are all put to the median indeterminate characteristic value!

The constructor makes a deep copy of the given Digraph instance!

- class digraphs.BipartitePartialDigraph(digraph, partA, partB, Partial=True)[source]

Renders the bipartite part of a Digraph instance.

Note

partA and partB must be parts of the actions attribute of the given Digraph instance

The non-bipartite links are all put to the median indeterminate characteristic value

The constructor makes a deep copy of the given Digraph instance

- class digraphs.BreakAddCocsDigraph(digraph=None, Piping=False, Comments=False, Threading=False, nbrOfCPUs=1)[source]

Specialization of general Digraph class for instantiation of chordless odd circuits augmented digraphs.

Parameters:

digraph: Stored or memory resident digraph instance.

Piping: using OS pipes for data in- and output between Python and C++.

A chordless odd circuit is added if the cumulated credibility of the circuit supporting arcs is larger or equal to the cumulated credibility of the converse arcs. Otherwise, the circuit is broken at the weakest asymmetric link, i.e. a link (x, y) with minimal difference between r(x S y) - r(y S x).

- closureChordlessOddCircuits(Piping=False, Comments=True, Debug=False, Threading=False, nbrOfCPUs=1)[source]

Closure of chordless odd circuits extraction.

- class digraphs.BrokenChordlessCircuitsDigraph(digraph=None, Piping=False, Comments=False, Threading=False, nbrOfCPUs=1)[source]

Specialization of general Digraph class for instantiation of chordless circuits broken digraphs.

Parameters:

digraph: stored or memory resident digraph instance.

Piping: using OS pipes for data in- and output between Python and C++.

All chordless odd circuits are broken at the weakest asymmetric link, i.e. a link

with minimal difference between

with minimal difference between  and

and  .

.

- class digraphs.BrokenCocsDigraph(digraph=None, Piping=False, Comments=False, Threading=False, nbrOfCPUs=1)[source]

Specialization of general Digraph class for instantiation of chordless odd circuits broken digraphs.

Parameters:

digraph: stored or memory resident digraph instance.

Piping: using OS pipes for data in- and output between Python and C++.

All chordless odd circuits are broken at the weakest asymmetric link, i.e. a link

with minimal difference between

with minimal difference between  and

and  .

.

- class digraphs.CSVDigraph(fileName='temp', valuationMin=-1, valuationMax=1)[source]

Specialization of the general Digraph class for reading stored csv formatted digraphs. Using the inbuilt module csv.

- Param:

fileName (without the extension .csv).

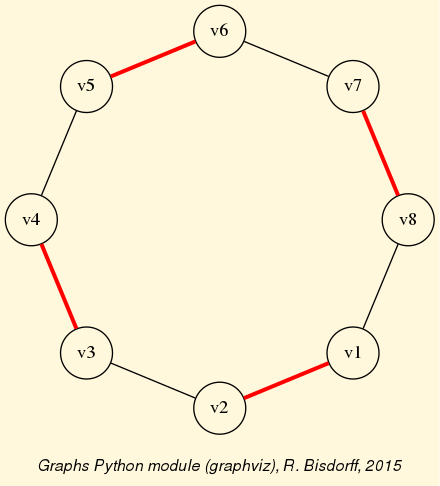

- class digraphs.CirculantDigraph(order=7, valuationdomain={'max': Decimal('1.0'), 'min': Decimal('-1.0')}, circulants=[-1, 1], IndeterminateInnerPart=False)[source]

Specialization of the general Digraph class for generating temporary circulant digraphs.

- Parameters:

- order > 0;valuationdomain ={‘min’:m, ‘max’:M};circulant connections = list of positive and/or negative circular shifts of value 1 to n.

- Default instantiation C_7:

- order = 7,valuationdomain = {‘min’:-1.0,’max’:1.0},circulants = [-1,1].

Example session:

>>> from digraphs import CirculantDigraph >>> c8 = CirculantDigraph(order=8,circulants=[1,3]) >>> c8.exportGraphViz('c8') *---- exporting a dot file for GraphViz tools ---------* Exporting to c8.dot circo -Tpng c8.dot -o c8.png # see below the graphviz drawing >>> c8.showChordlessCircuits() No circuits yet computed. Run computeChordlessCircuits()! >>> c8.computeChordlessCircuits() ... >>> c8.showChordlessCircuits() *---- Chordless circuits ----* ['1', '4', '7', '8'] , credibility : 1.0 ['1', '4', '5', '6'] , credibility : 1.0 ['1', '4', '5', '8'] , credibility : 1.0 ['1', '2', '3', '6'] , credibility : 1.0 ['1', '2', '5', '6'] , credibility : 1.0 ['1', '2', '5', '8'] , credibility : 1.0 ['2', '3', '6', '7'] , credibility : 1.0 ['2', '3', '4', '7'] , credibility : 1.0 ['2', '5', '6', '7'] , credibility : 1.0 ['3', '6', '7', '8'] , credibility : 1.0 ['3', '4', '7', '8'] , credibility : 1.0 ['3', '4', '5', '8'] , credibility : 1.0 12 circuits. >>>

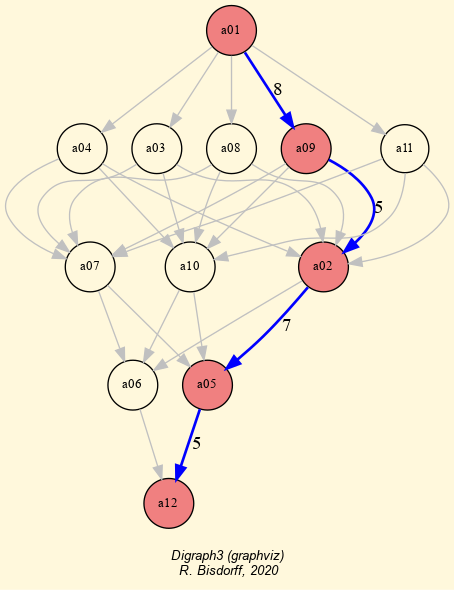

![circulant [1,3] digraph](_images/c8.png)

- class digraphs.CoDualDigraph(other, Debug=False)[source]

Instantiates the associated codual -converse of the negation- from a deep copy of a given Digraph instance called other.

Note

Instantiates self as other.__class__ ! And, deepcopies, the case given, the other.description, the other.criteria and the other.evaluation dictionaries into self.

- class digraphs.CocaDigraph(digraph=None, Piping=False, Comments=False)[source]

Old CocaDigraph class without circuit breakings; all circuits and circuits of circuits are added as hyper-nodes.

Warning

May sometimes give inconsistent results when an autranking digraph shows loads of chordless cuircuits. It is recommended in this case to use instead either the BrokenCocsDigraph class (preferred option) or the BreakAddCocsDigraph class.

Parameters:

digraph: Stored or memory resident digraph instance.

Piping: using OS pipes for data in- and output between Python and C++.

Specialization of general Digraph class for instantiation of chordless odd circuits augmented digraphs.

- class digraphs.CompleteDigraph(order=5, valuationdomain=(-1.0, 1.0))[source]

Specialization of the general Digraph class for generating temporary complete graphs of order 5 in {-1,0,1} by default.

- Parameters:

order > 0; valuationdomain=(Min,Max).

- class digraphs.ConverseDigraph(other)[source]

Instantiates the associated converse or reciprocal version from a deep copy of a given Digraph called other.

Instantiates as other.__class__ !

Deep copies, the case given, the description, the criteria and the evaluation dictionaries into self.

- class digraphs.CoverDigraph(other, Debug=False)[source]

Instantiates the associated cover relation -immediate neighbours- from a deep copy of a given Digraph called other. The Hasse diagram for instance is the cover relation of a transitive digraph.

Note

Instantiates as other.__class__ ! Copies the case given the other.description, the other.criteria and the other.evaluation dictionaries into self.

- class digraphs.CriteriaCorrelationDigraph(outrankingDigraph, ValuedCorrelation=True, WithMedian=False)[source]

Renders the ordinal criteria correlation digraph from the given outranking digraph.

If ValuedCorrelation==True, the correlation indexes represent the bipolar-valued p airwise relational equivalence between the marginal criteria outranking relation: that is tau * determination

Otherwise, the valuation represents the ordinal correlation index tau

If WithMedian==True, the correlation of the marginal criteria outranking with the global outranking relation, denoted ‘m’, is included.

- exportPrincipalImage(plotFileName=None, pictureFormat='pdf', bgcolor='cornsilk', fontcolor='red3', fontsize='0.85', tempDir='.', Comments=False)[source]

Export the principal projection of the absolute correlation distances using the three principal eigen vectors.

Implemeted picture formats are: ‘pdf’ (default), ‘png’, ‘jpeg’ and ‘xfig’.

The background, resp. font color is set by default to ‘cornsilk’, resp. ‘red3’.

_Colwise and _Reduced parameters are deprecated.

Warning

The method, writing and reading temporary files: tempCol.r and rotationCol.csv, resp. tempRow.r and rotationRow.csv, by default in the working directory (./), is hence not safe for multiprocessing programs, unless a temporary directory tempDir is provided.

- class digraphs.Digraph(file=None, order=7)[source]

Genuine root class of all Digraph3 modules. See Digraph3 tutorials.

All instances of the

digraphs.Digraphclass contain at least the following components:A collection of digraph nodes called actions (decision alternatives): a list, set or (ordered) dictionary of nodes with ‘name’ and ‘shortname’ attributes,

A logical characteristic valuationdomain, a dictionary with three decimal entries: the minimum (-1.0, means certainly false), the median (0.0, means missing information) and the maximum characteristic value (+1.0, means certainly true),

The digraph relation : a double dictionary indexed by an oriented pair of actions (nodes) and carrying a characteristic value in the range of the previous valuation domain,

Its associated gamma function : a dictionary containing the direct successors, respectively predecessors of each action, automatically added by the object constructor,

Its associated notGamma function : a dictionary containing the actions that are not direct successors respectively predecessors of each action, automatically added by the object constructor.

A previously stored

digraphs.Digraphinstance may be reloaded with the file argument:>>> from randomDigraphs import RandomValuationDigraph >>> dg = RandomValuationDigraph(order=3,Normalized=True,seed=1) >>> dg.save('testdigraph') Saving digraph in file: <testdigraph.py> >>> from digraphs import Digraph >>> dg = Digraph(file='testdigraph') # without the .py extenseion >>> dg.__dict__ {'name': 'testdigraph', 'actions': {'a1': {'name': 'random decision action', 'shortName': 'a1'}, 'a2': {'name': 'random decision action', 'shortName': 'a2'}, 'a3': {'name': 'random decision action', 'shortName': 'a3'}}, 'valuationdomain': {'min': Decimal('-1.0'), 'med': Decimal('0.0'), 'max': Decimal('1.0'), 'hasIntegerValuation': False,}, 'relation': {'a1': {'a1': Decimal('0.0'), 'a2': Decimal('-0.66'), 'a3': Decimal('0.44')}, 'a2': {'a1': Decimal('0.94'), 'a2': Decimal('0.0'), 'a3': Decimal('-0.84')}, 'a3': {'a1': Decimal('-0.36'), 'a2': Decimal('-0.70'), 'a3': Decimal('0.0')}}, 'order': 3, 'gamma': {'a1': ({'a3'}, {'a2'}), 'a2': ({'a1'}, set()), 'a3': (set(), {'a1'})}, 'notGamma': {'a1': ({'a2'}, {'a3'}), 'a2': ({'a3'}, {'a1', 'a3'}), 'a3': ({'a1', 'a2'}, {'a2'})}} >>>

- MISgen(S, I)[source]

- generator of maximal independent choices (voir Byskov 2004):

S ::= remaining nodes;

I ::= current independent choice

Note

Inititalize: self.MISgen(self.actions.copy(),set())

- abskernelrestrict(prekernel)[source]

Parameter: prekernel Renders absorbent prekernel restricted relation.

- agglomerationDistribution()[source]

Output: aggloCoeffDistribution, meanCoeff Renders the distribution of agglomeration coefficients.

- automorphismGenerators()[source]

Adds automorphism group generators to the digraph instance.

Note

Dependency: Uses the dreadnaut command from the nauty software package. See https://www3.cs.stonybrook.edu/~algorith/implement/nauty/implement.shtml

- On Ubuntu Linux:

…$ sudo apt-get install nauty

- averageCoveringIndex(choice, direction='out')[source]

Renders the average covering index of a given choice in a set of objects, ie the average number of choice members that cover each non selected object.

- bipolarKCorrelation(digraph, Debug=False)[source]

Renders the bipolar Kendall correlation between two bipolar valued digraphs computed from the average valuation of the XORDigraph(self,digraph) instance.

Warning

Obsolete! Is replaced by the self.computeBipolarCorrelation(other) Digraph method

- bipolarKDistance(digraph, Debug=False)[source]

Renders the bipolar crisp Kendall distance between two bipolar valued digraphs.

Warning

Obsolete! Is replaced by the self.computeBipolarCorrelation(other, MedianCut=True) Digraph method

- chordlessPaths(Pk, n2, Odd=False, Comments=False, Debug=False)[source]

New procedure from Agrum study April 2009 recursive chordless path extraction starting from path Pk = [n2, …., n1] and ending in node n2. Optimized with marking of visited chordless P1s.

- circuitAverageCredibility(circ)[source]

Renders the average linking credibility of a Chordless Circuit.

- circuitCredibilities(circuit, Debug=False)[source]

Renders the average linking credibilities and the minimal link of a Chordless Circuit.

- circuitMaxCredibility(circ)[source]

Renders the maximal linking credibility of a Chordless Circuit.

- circuitMinCredibility(circ)[source]

Renders the minimal linking credibility of a Chordless Circuit.

- closeTransitive(Reverse=False, InSite=True, Comments=False)[source]

Produces the transitive closure of self.relation.

Parameters:

If Reverse == True (False default) all transitive links are dropped, otherwise all transitive links are closed with min[r(x,y),r(y,z)];

If Insite == False (True by default) the methods return a modified copy of self.relation without altering the original self.relation, otherwise self.relation is modified.

- computeAllDensities(choice=None)[source]

parameter: choice in self renders six densitiy parameters: arc density, double arc density, single arc density, strict single arc density, absence arc density, strict absence arc densitiy.

- computeArrowRaynaudOrder()[source]

Renders a linear ordering from worst to best of the actions following Arrow&Raynaud’s rule.

- computeArrowRaynaudRanking()[source]

renders a linear ranking from best to worst of the actions following Arrow&Raynaud’s rule.

- computeAverageValuation()[source]

Computes the bipolar average correlation between self and the crisp complete digraph of same order of the irreflexive and determined arcs of the digraph

- computeBadChoices(Comments=False)[source]

Computes characteristic values for potentially bad choices.

Note

Returns a tuple with following content:

[(0)-determ,(1)degirred,(2)degi,(3)degd,(4)dega,(5)str(choice),(6)absvec]

- computeBadPirlotChoices(Comments=False)[source]

Characteristic values for potentially bad choices using the Pirlot’s fixpoint algorithm.

- computeBestChoiceRecommendation(Verbose=False, Comments=False, ChoiceVector=False, CoDual=True, Debug=False, _OldCoca=False, BrokenCocs=True)[source]

Sets self.bestChoice, self.bestChoiceData, self.worstChoice and self.worstChoiceData with the showBestChoiceRecommendation method.

First and last choices data is the following: [(0)-determ,(1)degirred,(2)degi,(3)degd,(4)dega,(5)str(choice),(6)domvec,(7)cover]

self.bestChoice = self.bestChoiceData[5] self.worstChoice = self.worstChoiceData[5]

- computeBipolarCorrelation(other, MedianCut=False, filterRelation=None, Debug=False)[source]

obsolete: dummy replacement for Digraph.computeOrdinalCorrelation method

- computeChordlessCircuits(Odd=False, Comments=False, Debug=False)[source]

Renders the set of all chordless circuits detected in a digraph. Result is stored in <self.circuitsList> holding a possibly empty list of tuples with at position 0 the list of adjacent actions of the circuit and at position 1 the set of actions in the stored circuit.

When Odd is True, only chordless circuits with an odd length are collected.

- computeChordlessCircuitsMP(Odd=False, Threading=False, nbrOfCPUs=None, startMethod=None, Comments=False, Debug=False)[source]

Multiprocessing version of computeChordlessCircuits().

Renders the set of all chordless odd circuits detected in a digraph. Result (possible empty list) stored in <self.circuitsList> holding a possibly empty list tuples with at position 0 the list of adjacent actions of the circuit and at position 1 the set of actions in the stored circuit. Inspired by Dias, Castonguay, Longo, Jradi, Algorithmica (2015).

Returns a possibly empty list of tuples (circuit,frozenset(circuit)).

If Odd == True, only circuits of odd length are retained in the result.

- computeConcentrationIndex(X, N)[source]

Renders the Gini concentration index of the X serie. N contains the partial frequencies. Based on the triangle summation formula.

- computeConcentrationIndexTrapez(X, N)[source]

Renders the Gini concentration index of the X serie. N contains the partial frequencies. Based on the triangles summation formula.

- computeCopelandOrder()[source]

renders a linear ordering from worst to best of the actions following Arrow&Raynaud’s rule.

- computeCopelandRanking()[source]

renders a linear ranking from best to worst of the actions following Arrow&Raynaud’s rule.

- computeCutLevelDensities(choice, level)[source]

parameter: choice in self, robustness level renders three robust densitiy parameters: robust double arc density, robust single arc density, robust absence arc densitiy.

- computeDensities(choice)[source]

parameter: choice in self renders the four densitiy parameters: arc density, double arc density, single arc density, absence arc density.

- computeDeterminateness(InPercents=False)[source]

Computes the Kendalll distance of self with the all median-valued indeterminate digraph of order n.

Return the average determination of the irreflexive part of the digraph.

determination = sum_(x,y) { abs[ r(xRy) - Med ] } / n(n-1)

If InPercents is True, returns the average determination in percentage of (Max - Med) difference.

>>> from outrankingDigraphs import BipolarOutrankingDigraph >>> from randomPerfTabs import Random3ObjectivesPerformanceTableau >>> t = Random3ObjectivesPerformanceTableau(numberOfActions=7,numberOfCriteria=7,seed=101) >>> g = BipolarOutrankingDigraph(t,Normalized=True) >>> g *------- Object instance description ------* Instance class : BipolarOutrankingDigraph Instance name : rel_random3ObjectivesPerfTab Actions : 7 Criteria : 7 Size : 27 Determinateness (%) : 65.67 Valuation domain : [-1.00;1.00] >>> print(g.computeDeterminateness()) 0.3134920634920634920634920638 >>> print(g.computeDeterminateness(InPercents=True)) 65.67460317460317460317460320 >>> g.recodeValuation(0,1) >>> g *------- Object instance description ------* Instance class : BipolarOutrankingDigraph Instance name : rel_random3ObjectivesPerfTab Actions : 7 Criteria : 7 Size : 27 Determinateness (%) : 65.67 Valuation domain : [0.00;1.00] >>> print(g.computeDeterminateness()) 0.1567460317460317460317460318 >>> print(g.computeDeterminateness(InPercents=True)) 65.67460317460317460317460320

- computeDiameter(Oriented=True)[source]

Renders the (by default oriented) diameter of the digraph instance

- computeDigraphCentres(WeakDistances=False, Comments=False)[source]

The centers of a digraph are the nodes with finite minimal shortes path lengths.

The maximal neighborhood distances are stored in self.maximalNeighborhoodDistances.

The corresponding digraph radius and diameter are stored respectively in self.radius and self.diameter.

With Comments = True, all these results are printed out.

Source: Claude Berge, The Theory of Graphs, Dover (2001) pp. 119, original in French Dunod (1958)

- computeDynamicProgrammingStages(source, sink, Debug=False)[source]

Renders the discrete stages of the optimal substructure for dynamic pogramming digrahs from a given source node to a given sink sink node.

Returns a list of list of action identifyers.

- computeGoodChoiceVector(ker, Comments=False)[source]

- Computing Characteristic values for dominant pre-kernelsusing the von Neumann dual fixoint equation

- computeGoodChoices(Comments=False)[source]

Computes characteristic values for potentially good choices.

..note:

Return a tuple with following content: [(0)-determ,(1)degirred,(2)degi,(3)degd,(4)dega,(5)str(choice),(6)domvec,(7)cover]

- computeGoodPirlotChoices(Comments=False)[source]

Characteristic values for potentially good choices using the Pirlot fixpoint algorithm.

- computeIncomparabilityDegree(InPercents=False, Comments=False)[source]

Renders the incomparability degree (Decimal), i.e. the relative number of symmetric indeterminate relations of the irreflexive part of a digraph.

- computeKemenyIndex(otherRelation)[source]

renders the Kemeny index of the self.relation compared with a given crisp valued relation of a compatible other digraph (same nodes or actions).

- computeKemenyOrder(orderLimit=7, Debug=False)[source]

Renders a ordering from worst to best of the actions with maximal Kemeny index. Return a tuple: kemenyOrder (from worst to best), kemenyIndex

- computeKemenyRanking(orderLimit=7, seed=None, sampleSize=1000, Debug=False)[source]

Renders a ranking from best to worst of the actions with maximal Kemeny index.

Note

Returns a tuple: kemenyRanking (from best to worst), kemenyIndex.

- computeKernelVector(kernel, Initial=True, Comments=False)[source]

- Computing Characteristic values for dominant pre-kernelsusing the von Neumann dual fixpoint equation

- computeKohlerOrder()[source]

Renders an ordering (worst to best) of the actions following Kohler’s rule.

- computeKohlerRanking()[source]

Renders a ranking (best to worst) of the actions following Kohler’s rule.

- computeMaxHoleSize(Comments=False)[source]

Renders the length of the largest chordless cycle in the corresponding disjunctive undirected graph.

- computeMeanInDegree()[source]

Renders the mean indegree of self. !!! self.size must be set previously !!!

- computeMeanOutDegree()[source]

Renders the mean degree of self. !!! self.size must be set previously !!!

- computeMeanSymDegree()[source]

Renders the mean degree of self. !!! self.size must be set previously !!!

- computeMedianOutDegree()[source]

Renders the median outdegree of self. !!! self.size must be set previously !!!

- computeMedianSymDegree()[source]

Renders the median symmetric degree of self. !!! self.size must be set previously !!!

Renders a list of more or less unrelated pairs.

- computeNetFlowsOrder()[source]

Renders an ordered list (from best to worst) of the actions following the net flows ranking rule.

- computeNetFlowsOrderDict()[source]

Renders an ordered list (from worst to best) of the actions following the net flows ranking rule.

- computeNetFlowsRanking()[source]

Renders an ordered list (from best to worst) of the actions following the net flows ranking rule.

- computeNetFlowsRankingDict()[source]

Renders an ordered list (from best to worst) of the actions following the net flows ranking rule.

- computeODistance(op2, comments=False)[source]

renders the squared normalized distance of two digraph valuations.

Note

op2 = digraphs of same order as self.

- computeOrbit(choice, withListing=False)[source]

renders the set of isomorph copies of a choice following the automorphism of the digraph self

- computeOrderCorrelation(order, Debug=False)[source]

Renders the ordinal correlation K of a digraph instance when compared with a given linear order (from worst to best) of its actions

K = sum_{x != y} [ min( max(-self.relation(x,y)),other.relation(x,y), max(self.relation(x,y),-other.relation(x,y)) ]

K /= sum_{x!=y} [ min(abs(self.relation(x,y),abs(other.relation(x,y)) ]

Note

Renders a dictionary with the key ‘correlation’ containing the actual bipolar correlation index and the key ‘determination’ containing the minimal determination level D of self and the other relation.

D = sum_{x != y} min(abs(self.relation(x,y)),abs(other.relation(x,y)) / n(n-1)

where n is the number of actions considered.

The correlation index with a completely indeterminate relation is by convention 0.0 at determination level 0.0 .

Warning

self must be a normalized outranking digraph instance !

- computeOrdinalCorrelation(other, MedianCut=False, filterRelation=None, Debug=False)[source]

Renders the bipolar correlation K of a self.relation when compared with a given compatible (same actions set)) digraph or a [-1,1] valued compatible relation (same actions set).

If MedianCut=True, the correlation is computed on the median polarized relations.

If filterRelation is not None, the correlation is computed on the partial domain corresponding to the determined part of the filter relation.

Warning

Notice that the ‘other’ relation and/or the ‘filterRelation’, the case given, must both be normalized, ie [-1,1]-valued !

K = sum_{x != y} [ min( max(-self.relation[x][y]),other.relation[x][y]), max(self.relation[x][y],-other.relation[x][y]) ]

K /= sum_{x!=y} [ min(abs(self.relation[x][y]),abs(other.relation[x][y])) ]

Note

Renders a tuple with at position 0 the actual bipolar correlation index and in position 1 the minimal determination level D of self and the other relation.

D = sum_{x != y} min(abs(self.relation[x][y]),abs(other.relation[x][y])) / n(n-1)

where n is the number of actions considered.

The correlation index with a completely indeterminate relation is by convention 0.0 at determination level 0.0 .

- computeOrdinalCorrelationMP(other, MedianCut=False, Threading=False, nbrOfCPUs=None, startMethod=None, Comments=False, Debug=False)[source]

Multi processing version of the digraphs.computeOrdinalCorrelation() method.

Note

The relation filtering and the MedinaCut option are not implemented in the MP version.

- computePairwiseClusterComparison(K1, K2, Debug=False)[source]

Computes the pairwise cluster comparison credibility vector from bipolar-valued digraph g. with K1 and K2 disjoint lists of action keys from g actions disctionary. Returns the dictionary {‘I’: Decimal(),’P+’:Decimal(),’P-‘:Decimal(),’R’ :Decimal()} where one and only one item is strictly positive.

- computePreKernels()[source]

- computing dominant and absorbent preKernels:

Result in self.dompreKernels and self.abspreKernels

- computePreRankingRelation(preRanking, Normalized=True, Debug=False)[source]

Renders the bipolar-valued relation obtained from a given preRanking in decreasing levels (list of lists) result.

- computePreorderRelation(preorder, Normalized=True, Debug=False)[source]

Renders the bipolar-valued relation obtained from a given preordering in increasing levels (list of lists) result.

- computePrincipalOrder(Colwise=False, Comments=False)[source]

Rendesr an ordering from wrost to best of the decision actions.

- computePrincipalRanking(Colwise=False, Comments=False)[source]

Rendesr a ranking from best to worst of the decision actions.

- computePrincipalScores(plotFileName=None, Colwise=False, imageType=None, tempDir=None, bgcolor='cornsilk', Comments=False, Debug=False)[source]

Renders a ordered list of the first principal eigenvector of the covariance of the valued outdegrees of self.

Note

The method, relying on writing and reading temporary files by default in a temporary directory is threading and multiprocessing safe ! (see Digraph.exportPrincipalImage method)

- computePrudentBetaLevel(Debug=False)[source]

computes alpha, ie the lowest valuation level, for which the bipolarly polarised digraph doesn’t contain a chordless circuit.

- computeRankingByBestChoosing(CoDual=False, Debug=False)[source]

Computes a weak preordering of the self.actions by recursive best choice elagations.

Stores in self.rankingByBestChoosing[‘result’] a list of (P+,bestChoice) tuples where P+ gives the best choice complement outranking average valuation via the computePairwiseClusterComparison method.

If self.rankingByBestChoosing[‘CoDual’] is True, the ranking-by-choosing was computed on the codual of self.

- computeRankingByBestChoosingRelation(rankingByBestChoosing=None, Debug=False)[source]

Renders the bipolar-valued relation obtained from the self.rankingByBestChoosing result.

- computeRankingByChoosing(actionsSubset=None, Debug=False, CoDual=False)[source]

Computes a weak preordring of the self.actions by iterating jointly first and last choice elagations.

Stores in self.rankingByChoosing[‘result’] a list of ((P+,bestChoice),(P-,worstChoice)) pairs where P+ (resp. P-) gives the best (resp. worst) choice complement outranking (resp. outranked) average valuation via the computePairwiseClusterComparison method.

If self.rankingByChoosing[‘CoDual’] is True, the ranking-by-choosing was computed on the codual of self.

- computeRankingByChoosingRelation(rankingByChoosing=None, actionsSubset=None, Debug=False)[source]

Renders the bipolar-valued relation obtained from the self.rankingByChoosing result.

- computeRankingByLastChoosing(CoDual=False, Debug=False)[source]

Computes a weak preordring of the self.actions by iterating worst choice elagations.

Stores in self.rankingByLastChoosing[‘result’] a list of (P-,worstChoice) pairs where P- gives the worst choice complement outranked average valuation via the computePairwiseClusterComparison method.

If self.rankingByChoosing[‘CoDual’] is True, the ranking-by-last-chossing was computed on the codual of self.

- computeRankingByLastChoosingRelation(rankingByLastChoosing=None, Debug=False)[source]

Renders the bipolar-valued relation obtained from the self.rankingByLastChoosing result.

- computeRankingCorrelation(ranking, Debug=False)[source]

Renders the ordinal correlation K of a digraph instance when compared with a given linear ranking of its actions

K = sum_{x != y} [ min( max(-self.relation(x,y)),other.relation(x,y), max(self.relation(x,y),-other.relation(x,y)) ]

K /= sum_{x!=y} [ min(abs(self.relation(x,y),abs(other.relation(x,y)) ]

Note

Renders a tuple with at position 0 the actual bipolar correlation index and in position 1 the minimal determination level D of self and the other relation.

D = sum_{x != y} min(abs(self.relation(x,y)),abs(other.relation(x,y)) / n(n-1)

where n is the number of actions considered.

The correlation index with a completely indeterminate relation is by convention 0.0 at determination level 0.0 .

- computeRelationalStructure(Debug=False)[source]

Renders the counted decomposition of the valued relations into the following type of links: gt ‘>’, eq ‘=’, lt ‘<’, incomp ‘<>’, leq ‘<=’, geq ‘>=’, indeterm ‘?’

- computeRubisChoice(Comments=False, _OldCoca=False, BrokenCocs=True, Threading=False, nbrOfCPUs=1)[source]

Renders self.strictGoodChoices, self.nullChoices self.strictBadChoices, self.nonRobustChoices.

Warning

Changes in site the outranking digraph by adding or braking chordless odd outranking circuits.

- computeRubyChoice(Comments=False, _OldCoca=False)[source]

dummy for computeRubisChoice() old versions compatibility.

- computeShortestPathLengths(WeakPaths=False, Comments=False, Debug=False)[source]

Renders a double dictionary with the directed distances, i.e. the shortest path lengths between all self.actions.

Equals None if there does not exist a directed path between two actions.

Source: Claude Berge, The Theory of Graphs, Dover (2001) pp. 119, original in French Dunod (1958)

- computeSingletonRanking(Comments=False, Debug=False)[source]

Renders the sorted bipolar net determinatation of outrankingness minus outrankedness credibilities of all singleton choices.

res = ((netdet,singleton,dom,absorb)+)

- computeSlaterOrder(isProbabilistic=False, seed=None, sampleSize=1000, Debug=False)[source]

Reversed return from computeSlaterRanking method.

- computeSlaterRanking(isProbabilistic=False, seed=None, sampleSize=1000, Debug=False)[source]

Renders a ranking of the actions with minimal Slater index. Return a tuple: slaterOrder, slaterIndex

- computeSymmetryDegree(InPercents=False, Comments=False)[source]

Renders the symmetry degree (Decimal) of the irreflexive part of a digraph.

Note

Empty and indeterminate digraphs are considered to be symmetric.

- computeTransitivityDegree(InPercents=False, Comments=False)[source]

Renders the transitivity degree (Decimal) of a digraph.

Note

An empty or indeterminate digraph is considered to be transitive.

Renders a list of more or less unrelated pairs.

- computeValuationLevels(choice=None, Debug=False)[source]

renders the symmetric closure of the apparent valuations levels of self in an increasingly ordered list. If parameter choice is given, the computation is limited to the actions of the choice.

- computeValuationPercentages(choice, percentiles, withValues=False)[source]

Parameters: choice and list of percentiles. renders a series of percentages of the characteristics valuation of the arcs in the digraph.

- computeValuationPercentiles(choice, percentages, withValues=False)[source]

Parameters: choice and list of percentages. renders a series of quantiles of the characteristics valuation of the arcs in the digraph.

- computeValuationStatistics(Sampling=False, Comments=False)[source]

Renders the mean and variance of the valuation of the non reflexive pairs.

- computeValuedRankingRelation(ranking)[source]

Renders the valued relation characteristics compatible with the given linar ranking. Discordant charcateristics are set to the indeterminate value.

- computeupdown1(s, S)[source]

Help method for show_MIS_HB2 method. fills self.newmisset, self.upmis, self.downmis.

- computeupdown2(s, S)[source]

Help method for show_MIS_HB1 method. Fills self.newmisset, self.upmis, self.downmis.

- computeupdown2irred(s, S)[source]

Help method for show_MIS_HB1 method. Fills self.newmisset, self.upmis, self.downmis.

- condorcetLosers()[source]

Renders the set of decision actions x such that self.relation[x][y] < self.valuationdomain[‘med’] for all y != x.

- condorcetWinners()[source]

Renders the set of decision actions x such that self.relation[x][y] > self.valuationdomain[‘med’] for all y != x.

- convertRelationToDecimal()[source]

Converts the float valued self.relation in a decimal valued one.

- convertValuation2Integer(InSite=True, Comments=False)[source]

Converts the self.relation valuation to integer values by converting the Decimals to Fractions and multiply by the least commun multiple of the fraction denominators.

Parameters:

If Insite == False (True by default) the method returns a modified copy of self.relation without altering the original self.relation, otherwise self.relation and self.valuationdomain is modified.

- coveringIndex(choice, direction='out')[source]

Renders the covering index of a given choice in a set of objects, ie the minimum number of choice members that cover each non selected object.

- crispKDistance(digraph, Debug=False)[source]

Renders the crisp Kendall distance between two bipolar valued digraphs.

Warning

Obsolete! Is replaced by the self.computeBipolarCorrelation(other, MedianCut=True) Digraph method

- detectChordlessCircuits(Comments=False, Debug=False)[source]

Detects a chordless circuit in a digraph. Returns a Boolean

- detectChordlessPath(Pk, n2, Comments=False, Debug=False)[source]

New procedure from Agrum study April 2009 recursive chordless path extraction starting from path Pk = [n2, …., n1] and ending in node n2. Optimized with marking of visited chordless P1s.

- determinateness(vec, inPercent=True)[source]

Renders the determinateness of a characteristic vector vec = [(r(x),x),(r(y),y), …] of length n in valuationdomain [Min,Med,Max]:

result = sum_x( abs(r(x)-Med) ) / ( n*(Max-Med) )

If inPercent, result shifted (+1) and reduced (/2) to [0,1] range.

- digraph2Graph(valuationDomain={'max': 1, 'med': 0, 'min': -1}, Debug=False, ConjunctiveConversion=True)[source]

Convert a Digraph instance to a Graph instance.

- dominantChoices(S)[source]

Generates all minimal dominant choices of a bipolar valued digraph.

Note

Initiate with S = self.actions.copy().

- domkernelrestrict(prekernel)[source]

Parameter: dominant prekernel Renders dominant prekernel restricted relation.

- exportGraphViz(fileName=None, actionsSubset=None, bestChoice={}, worstChoice={}, firstChoice={}, lastChoice={}, Comments=True, graphType='png', pictureFormat=None, graphSize='7,7', relation=None, bgcolor='cornsilk')[source]

export GraphViz dot file for graph drawing filtering.

- exportPrincipalImage(plotFileName=None, pictureFormat='pdf', bgcolor='cornsilk', fontcolor='red3', fontsize='0.75', Reduced=False, Colwise=False, tempDir='.', Comments=False)[source]

Export as PDF (default) the principal projection of the valued relation using the three principal eigen vectors.

Implemeted picture formats are: ‘pdf’ (default), ‘png’, ‘jpeg’ and ‘xfig’.

The background color is set by default to ‘cornsilk’.

Font size and color are set by default to ‘red3’, resp. ‘0.75’.

When Reduced==True, the valued relation characeteristics are centered and reduced.

When Colwise==True, the column vectors of the adjacency table are used for the principal projection, otherwise the rows (default) are used. Has no incidence when the Digraph instance self is symmetric.

Warning

The method, writing and reading temporary files: tempCol.r and rotationCol.csv, resp. tempRow.r and rotationRow.csv, by default in the working directory (./), is hence not safe for multiprocessing programs, unless a temporary directory tempDir is provided.

- gammaSets()[source]

Renders the dictionary of neighborhoods {node: (dx,ax)} with set dx gathering the dominated, and set ax gathering the absorbed neighborhood.

- generateAbsPreKernels()[source]

Generate all absorbent prekernels from independent choices generator.

- generateDomPreKernels()[source]

Generate all dominant prekernels from independent choices generator.

- htmlChoiceVector(ch, ChoiceVector=True, choiceType='good')[source]

Show procedure for annotated bipolar choices.

- independentChoices(U)[source]

Generator for all independent choices with neighborhoods of a bipolar valued digraph:

Note

Initiate with U = self.singletons().

Yields [(independent choice, domnb, absnb, indnb)].

- inner_prod(v1, v2)[source]

Parameters: two choice characteristic vectors Renders the inner product of two characteristic vetors.

- isAsymmetricIndeterminate(Debug=False)[source]

Checks the self.relation for assymmetric indeterminateness!!

Warning

The reflexive links are ignored !!

- isComplete(Debug=False)[source]

checks the completeness property of self.relation by checking for the absence of a link between two actions!!

Warning

The reflexive links are ignored !!

- isCyclic(Debug=False)[source]

checks the cyclicity of self.relation by checking for a reflexive loop in its transitive closure-

Warning

self.relation is supposed to be irreflexive !

- isIntegerValued(Debug=False)[source]

Checks whether the decimal valuation of self is integer-valued be using the as_integer_ratio() method of a Decimal giving a tuple (numerator,denominator). If denominator == 1, the number is an integer.

- isOutrankingDigraph(Comments=True, Debug=False)[source]

Checks the outranking digraph characteristic condition (3.3).

relation[x][y] + relation[y][x)[y] >= 0.0

Warning

The reflexive links are ignored and the valuation must be bipolar !!

- isStrictOutrankingDigraph(Comments=True, Debug=False)[source]

Checks the strict outranking digraph characteristic condition (3.1).

-(relation[x][y] + relation[y][x]) <= 0.0 , x != y

Warning

The reflexive links are ignored and the valuation must be bipolar !!

- isWeaklyComplete(Debug=False)[source]

checks the weakly completeness property of self.relation by checking for the absence of a link between two actions!!

Warning

The reflexive links are ignored !!

- iterateRankingByChoosing(Odd=False, CoDual=False, Comments=True, Debug=False, Limited=None)[source]

Renders a ranking by choosing result when progressively eliminating all chordless (odd only) circuits with rising valuation cut levels.

- Parameters

CoDual = False (default)/True Limited = proportion (in [0,1]) * (max - med) valuationdomain

- matmult2(m, v)[source]

Parameters: digraph relation and choice characteristic vector matrix multiply vector by inner production

- meanLength(Oriented=False)[source]

Renders the (by default non-oriented) mean neighbourhoor depth of self. !!! self.order must be set previously !!!

- minimalValuationLevelForCircuitsElimination(Odd=True, Debug=False, Comments=False)[source]

renders the minimal valuation level <lambda> that eliminates all self.circuitsList stored odd chordless circuits from self.

Warning

The <lambda> level polarised may still contain newly appearing chordless odd circuits !

- neighbourhoodDepthDistribution(Oriented=False)[source]

Renders the distribtion of neighbourhood depths.

- notGammaSets()[source]

Renders the dictionary of neighborhoods {node: (dx,ax)} with set dx gathering the not dominated, and set ax gathering the not absorbed neighborhood.

- readPerrinMisset(file='curd.dat')[source]

read method for 0-1-char-coded MISs by default from the perrinMIS.c curd.dat result file.

- recodeValuation(newMin=-1.0, newMax=1.0, ndigits=4, Debug=False)[source]

Recodes the characteristic valuation domain according to the parameters given.

ndigits indicates the number of decimal digits of the valuation.

- relationFct(x, y)[source]

wrapper for self.relation dictionary access to ensure interoperability with the sparse and big outranking digraph implementation model.

- save(fileName='tempdigraph', option=None, DecimalValuation=True, decDigits=2)[source]

Persistent storage of a Digraph class instance in the form of a python source code file

- saveCSV(fileName='tempdigraph', Normalized=False, Dual=False, Converse=False, Diagonal=False, Debug=False)[source]

Persistent storage of a Digraph class instance in the form of a csv file.

- saveXMCDA2(fileName='temp', fileExt='xmcda2', Comments=True, relationName='R', relationType='binary', category='random', subcategory='valued', author='digraphs Module (RB)', reference='saved from Python', valuationType='standard', digits=2, servingD3=False)[source]

save digraph in XMCDA 2.0 format. Deprecated now.

- sharp(x, y)[source]

Paramaters: choice characteristic values. Renders the sharpest of two characteristic values x and y.

- sharpvec(v, w)[source]

Paramaters: choice characteristic vectors. Renders the sharpest of two characteristic vectors v and w.

- showBestChoiceRecommendation(Verbose=False, Comments=True, ChoiceVector=False, CoDual=True, Debug=False, _OldCoca=False, BrokenCocs=True)[source]

Shows the RuBis best choice recommendation.

Note

Computes by default the Rubis best choice recommendation on the corresponding strict (codual) outranking digraph.

By default, with BrokenCocs=True, we brake all chordless circuits at their weakest determined ( abs(r(x>y)) + abs(r(y>x)) ) link.

When BrokenCocs=False we proceed like follows:

In case of chordless circuits, if supporting arcs are more credible than the reversed negating arcs, we collapse the circuits into hyper nodes. Inversely, if supporting arcs are not more credible than the reversed negating arcs, we brake the circuits on their weakest arc.

Usage example:

>>> from outrankingDigraphs import * >>> t = Random3ObjectivesPerformanceTableau(seed=5) >>> g = BipolarOutrankingDigraph(t) >>> g.showBestChoiceRecommendation() *********************** RuBis Best Choice Recommendation (BCR) (in decreasing order of determinateness) Credibility domain: [-100.0, 100.0] === >> potential first choices * choice : ['a04', 'a14', 'a19', 'a20'] independence : 1.19 dominance : 4.76 absorbency : -59.52 covering (%) : 75.00 determinateness (%) : 57.86 - most credible action(s) = { 'a14': 23.81, 'a19': 11.90, 'a04': 2.38, 'a20': 1.19, } === >> potential last choices * choice : ['a03', 'a12', 'a17'] independence : 4.76 dominance : -76.19 absorbency : 4.76 covering (%) : 0.00 determinateness (%) : 65.39 - most credible action(s) = { 'a03': 38.10, 'a12': 13.10, 'a17': 4.76, } Execution time: 0.024 seconds *****************************

- showChoiceVector(ch, choiceType='good', ChoiceVector=True)[source]

Show procedure for annotated bipolar choices.

- showChordlessCircuits(Recompute=False)[source]

Show method for chordless circuits observed in a Digraph instance.

If previous computation is required, stores the detected circuits in self.circuitsList attribute.

- showCorrelation(corr=None, ndigits=3)[source]

Renders the valued ordinal correlation index, the crisp Kendall tau index and their epistemic determination degree.

- showFirstChoiceRecommendation(Verbose=False, Comments=True, ChoiceVector=False, CoDual=True, Debug=False, _OldCoca=False, BrokenCocs=True)[source]

Shows the RuBis first choice recommendation.

Note

Computes by default the Rubis first choice recommendation on the corresponding strict (codual) outranking digraph.

By default, with BrokenCocs=True, we brake all chordless circuits at their weakest determined ( abs(r(x>y)) + abs(r(y>x)) ) link.

When BrokenCocs=False we proceed like follows:

In case of chordless circuits, if supporting arcs are more credible than the reversed negating arcs, we collapse the circuits into hyper nodes. Inversely, if supporting arcs are not more credible than the reversed negating arcs, we brake the circuits on their weakest arc.

Usage example:

>>> from outrankingDigraphs import * >>> t = Random3ObjectivesPerformanceTableau(seed=5) >>> g = BipolarOutrankingDigraph(t) >>> g.showFirstChoiceRecommendation() *********************** RuBis First Choice Recommendation (BCR) (in decreasing order of determinateness) Credibility domain: [-100.0, 100.0] === >> potential first choices * choice : ['a04', 'a14', 'a19', 'a20'] independence : 1.19 dominance : 4.76 absorbency : -59.52 covering (%) : 75.00 determinateness (%) : 57.86 - most credible action(s) = { 'a14': 23.81, 'a19': 11.90, 'a04': 2.38, 'a20': 1.19, } === >> potential last choices * choice : ['a03', 'a12', 'a17'] independence : 4.76 dominance : -76.19 absorbency : 4.76 covering (%) : 0.00 determinateness (%) : 65.39 - most credible action(s) = { 'a03': 38.10, 'a12': 13.10, 'a17': 4.76, } Execution time: 0.024 seconds *****************************

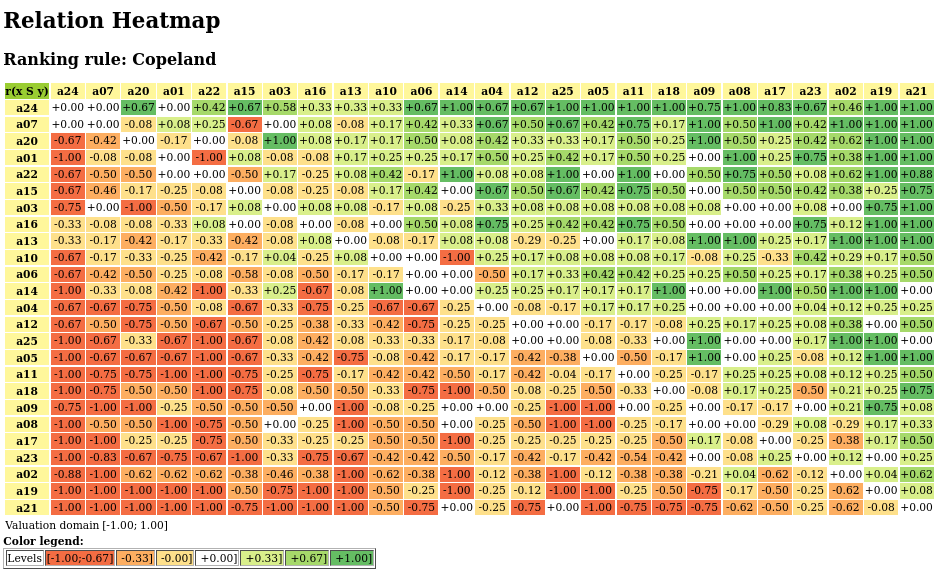

- showHTMLRelationHeatmap(actionsList=None, rankingRule='NetFlows', colorLevels=7, tableTitle='Relation Heatmap', relationName='r(x S y)', ndigits=2, fromIndex=None, toIndex=None, htmlFileName=None)[source]

Launches a browser window with the colored relation map of self.

See corresponding

showHTMLRelationMapmethod.The colorLevels parameter may be set to 3, 5, 7 (default) or 9.

When the actionsList parameter is None (default), the digraphs actions list may be ranked with the rankingRule parameter set to the ‘Copeland’ (default) or to the ‘Netlows’ ranking rule.

When the htmlFileName parameter is set to a string value ‘xxx’, a html file named ‘xxx.html’ will be generated in the current working directory. Otherwise, a temporary file named ‘tmp*.html’ will be generated there.

Example:

>>> from outrankingDigraphs import * >>> t = RandomCBPerformanceTableau(numberOfActions=25,seed=1) >>> g = BipolarOutrankingDigraph(t,ndigits=2) >>> gcd = ~(-g) # strict outranking relation >>> gcd.showHTMLRelationHeatmap(colorLevels=7,ndigits=2)

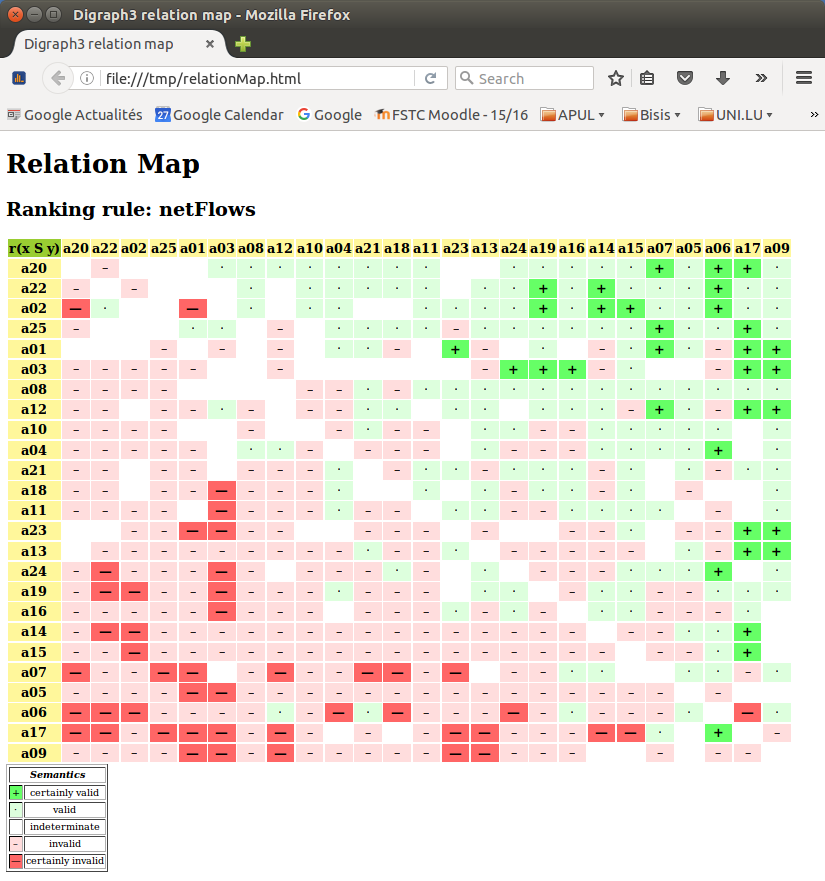

- showHTMLRelationMap(actionsList=None, rankingRule='Copeland', Colored=True, tableTitle='Relation Map', relationName='r(x S y)', symbols=['+', '·', ' ', '–', '—'], fromIndex=None, toIndex=None, htmlFileName=None)[source]

Launches a browser window with the colored relation map of self. See corresponding Digraph.showRelationMap() method.

When htmlFileName parameter is set to a string value, a html file with that name will be stored in the current working directory.

By default, a temporary file named: tmp*.html will be generated instead in the current working directory.

Example:

>>> from outrankingDigraphs import * >>> t = RandomCBPerformanceTableau(numberOfActions=25,seed=1) >>> g = BipolarOutrankingDigraph(t,Normalized=True) >>> gcd = ~(-g) # strict outranking relation >>> gcd.showHTMLRelationMap(rankingRule="NetFlows")

- showHTMLRelationTable(actionsList=None, relation=None, IntegerValues=False, ndigits=2, Colored=True, tableTitle='Valued Adjacency Matrix', relationName='r(x S y)', ReflexiveTerms=False, htmlFileName=None, fromIndex=None, toIndex=None)[source]

Launches a browser window with the colored relation table of self.

- showMIS_AH(withListing=True)[source]

Prints all MIS using the Hertz method.

Result saved in self.hertzmisset.

- showMIS_HB2(withListing=True)[source]

Prints all MIS using the Hertz-Bisdorff method.

Result saved in self.newmisset.

- showMIS_RB(withListing=True)[source]

Prints all MIS using the Bisdorff method.

Result saved in self.newmisset.

- showMIS_UD(withListing=True)[source]

Prints all MIS using the Hertz-Bisdorff method.

Result saved in self.newmisset.

- showMaxAbsIrred(withListing=True)[source]

- Computing maximal -irredundant choices:

Result in self.absirset.

- showMaxDomIrred(withListing=True)[source]

- Computing maximal +irredundant choices:

Result in self.domirset.

- showOrbits(InChoices, withListing=True)[source]

Prints the orbits of Choices along the automorphisms of the Digraph instance.

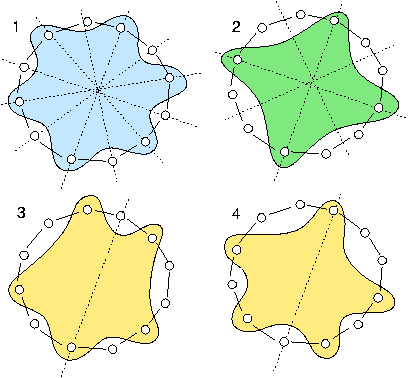

Example Python session for computing the non isomorphic MISs from the 12-cycle graph:

>>> from digraphs import * >>> c12 = CirculantDigraph(order=12,circulants=[1,-1]) >>> c12.automorphismGenerators() ... Permutations {'1': '1', '2': '12', '3': '11', '4': '10', '5': '9', '6': '8', '7': '7', '8': '6', '9': '5', '10': '4', '11': '3', '12': '2'} {'1': '2', '2': '1', '3': '12', '4': '11', '5': '10', '6': '9', '7': '8', '8': '7', '9': '6', '10': '5', '11': '4', '12': '3'} Reflections {} >>> print('grpsize = ', c12.automorphismGroupSize) grpsize = 24 >>> c12.showMIS(withListing=False) *--- Maximal independent choices ---* number of solutions: 29 cardinality distribution card.: [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12] freq.: [0, 0, 0, 0, 3, 24, 2, 0, 0, 0, 0, 0, 0] Results in c12.misset >>> c12.showOrbits(c12.misset,withListing=False) ... *---- Global result ---- Number of MIS: 29 Number of orbits : 4 Labelled representatives: 1: ['2','4','6','8','10','12'] 2: ['2','5','8','11'] 3: ['2','4','6','9','11'] 4: ['1','4','7','9','11'] Symmetry vector stabilizer size: [1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, ...] frequency : [0, 2, 0, 0, 0, 0, 0, 1, 0, 0, 0, 1, ...]

Figure: The symmetry axes of the non isomorphic MISs of the 12-cycle:

Reference: R. Bisdorff and J.L. Marichal (2008). Counting non-isomorphic maximal independent sets of the n-cycle graph. Journal of Integer Sequences, Vol. 11 Article 08.5.7 (openly accessible here)

- showOrbitsFromFile(InFile, withListing=True)[source]

Prints the orbits of Choices along the automorphisms of the digraph self by reading in the 0-1 misset file format. See the

digraphs.Digraph.readPerrinMisset()method.

- showPreKernels(withListing=True)[source]

- Printing dominant and absorbent preKernels:

Result in self.dompreKernels and self.abspreKernels

- showRankingByBestChoosing(rankingByBestChoosing=None)[source]

A show method for self.rankinByBestChoosing result.

Warning

The self.computeRankingByBestChoosing(CoDual=False/True) method instantiating the self.rankingByBestChoosing slot is pre-required !

- showRankingByChoosing(rankingByChoosing=None, WithCoverCredibility=False)[source]

A show method for self.rankinByChoosing result.

When parameter WithCoverCredibility is set to True, the credibility of outranking, respectively being outranked is indicated at each selection step.

Warning

The self.computeRankingByChoosing(CoDual=False/True) method instantiating the self.rankingByChoosing slot is pre-required !

- showRankingByLastChoosing(rankingByLastChoosing=None, Debug=None)[source]

A show method for self.rankinByChoosing result.

Warning

The self.computeRankingByLastChoosing(CoDual=False/True) method instantiating the self.rankingByChoosing slot is pre-required !

- showRelationMap(symbols=None, rankingRule='Copeland', fromIndex=None, toIndex=None, actionsList=None)[source]

Prints on the console, in text map format, the location of certainly validated and certainly invalidated outranking situations.

- By default, symbols = {‘max’:’┬’,’positive’: ‘+’, ‘median’: ‘ ‘,

‘negative’: ‘-’, ‘min’: ‘┴’}

The default ordering of the output is following the Copeland ranking rule from best to worst actions. Further available ranking rule is the ‘NetFlows’ net flows rule.

Example:

>>> from outrankingDigraphs import * >>> t = RandomCBPerformanceTableau(numberOfActions=25,seed=1) >>> g = BipolarOutrankingDigraph(t,Normalized=True) >>> gcd = ~(-g) # strict outranking relation >>> gcd.showRelationMap(rankingRule="NetFlows") - ++++++++ +++++┬+┬┬+ - - + +++++ ++┬+┬+++┬++ ┴+ ┴ + ++ ++++┬+┬┬++┬++ - ++ - ++++-++++++┬++┬+ - - - ++- ┬- + -+┬+-┬┬ ----- - -┬┬┬-+ -┬┬ ---- --+-+++++++++++++ -- --+- --++ ++ +++-┬+-┬┬ ---- - -+-- ++--+++++ + ----- ++- --- +---++++┬ + -- -- ---+ -++-+++-+ +-++ -- --┴---+ + +-++-+ - + ---- ┴---+-- ++--++++ - + --┴┴-- --- - --+ --┬┬ ---------+--+ ----- +-┬┬ -┴---┴- ---+- + ---+++┬ + -┴┴--┴---+--- ++ -++--+++ -----┴--- ---+-+- ++---+ -┴┴--------------- --++┬ --┴---------------- --+┬ ┴--┴┴ -┴--┴┴-┴ --++ ++-+ ----┴┴--------------- - ┴┴┴----+-┴+┴---┴-+---+ ┴+ ┴┴-┴┴┴-┴- - -┴┴---┴┴+ ┬ - ----┴┴-┴-----┴┴--- - -- Ranking rule: NetFlows >>>

- showRelationTable(Sorted=True, rankingRule=None, IntegerValues=False, actionsSubset=None, relation=None, ndigits=2, ReflexiveTerms=True, fromIndex=None, toIndex=None)[source]

Prints the relation valuation in actions X actions table format. Copeland and NetFlows rankings my be used.

- showRubisBestChoiceRecommendation(**kwargs)[source]

Dummy for backward portable showBestChoiceRecommendation().

- showRubyChoice(Verbose=False, Comments=True, _OldCoca=True)[source]

Dummy for showBestChoiceRecommendation() needed for older versions compatibility.

- showSingletonRanking(Comments=True, Debug=False)[source]

Calls self.computeSingletonRanking(comments=True,Debug = False). Renders and prints the sorted bipolar net determinatation of outrankingness minus outrankedness credibilities of all singleton choices.

res = ((netdet,sigleton,dom,absorb)+)

- singletons()[source]

list of singletons and neighborhoods [(singx1, +nx1, -nx1, not(+nx1 or -nx1)),…. ]

- sizeSubGraph(choice)[source]

Output: (size, undeterm,arcDensity). Renders the arc density of the induced subgraph.

- topologicalSort(Debug=False)[source]

If self is acyclic, adds topological sort number to each node of self and renders ordered list of nodes. Otherwise renders None. Source: M. Golumbic Algorithmic Graph heory and Perfect Graphs, Annals Of Discrete Mathematics 57 2nd Ed. , Elsevier 2004, Algorithm 2.4 p.44.

- weakCondorcetLosers()[source]

Renders the set of decision actions x such that self.relation[x][y] <= self.valuationdomain[‘med’] for all y != x.

- class digraphs.DualDigraph(other)[source]

Instantiates the dual ( = negated valuation) Digraph object from a deep copy of a given other Digraph instance.

The relation constructor returns the dual (negation) of self.relation with generic formula:

relationOut[a][b] = Max - self.relation[a][b] + Min, where Max (resp. Min) equals valuation maximum (resp. minimum).

Note

In a bipolar valuation, the dual operator corresponds to a simple changing of signs.

- class digraphs.EmptyDigraph(order=5, valuationdomain=(-1.0, 1.0))[source]

- Parameters:

order > 0 (default=5); valuationdomain =(Min,Max).

Specialization of the general Digraph class for generating temporary empty graphs of given order in {-1,0,1}.

- class digraphs.EquivalenceDigraph(d1, d2, Debug=False)[source]

Instantiates the logical equivalence digraph of two bipolar digraphs d1 and d2 of same order. Returns None if d1 and d2 are of different order

- computeCorrelation()[source]

Renders a dictionary with slots: ‘correlation’ (tau) and ‘determination’ (d), representing the ordinal correlation between the two digraphs d1 and d2 given as arguments to the EquivalenceDigraph constructor.

See the corresponding advanced topic in the Digraph3 documentation.

- class digraphs.FusionDigraph(dg1, dg2, operator='o-max', weights=None)[source]

Instantiates the epistemic fusion of two given Digraph instances called dg1 and dg2.

Parameter:

operator := “o-max (default)” | “o-min | o-average” : symmetrix disjunctive, resp. conjunctive, resp. avarage fusion operator.

weights := [a,b]: if None weights = [1,1]

- class digraphs.FusionLDigraph(L, operator='o-max', weights=None)[source]

Instantiates the epistemic fusion a list L of Digraph instances.

Parameter:

operator := “o-max” (default) | “o-min” | “o-average: epistemic disjunctive, conjunctive or symmetric average fusion.

weights := [a,b, …]: len(weights) matching len(L). If None, weights = [1 for i in range(len(L))].

- class digraphs.GraphBorder(other, Debug=False)[source]

Instantiates the partial digraph induced by its border, i.e. be the union of its initial and terminal kernels.

- class digraphs.GraphInner(other, Debug=False)[source]

Instantiates the partial digraph induced by the complement of its border, i.e. the nodes not included in the union of its initial and terminal kernels.

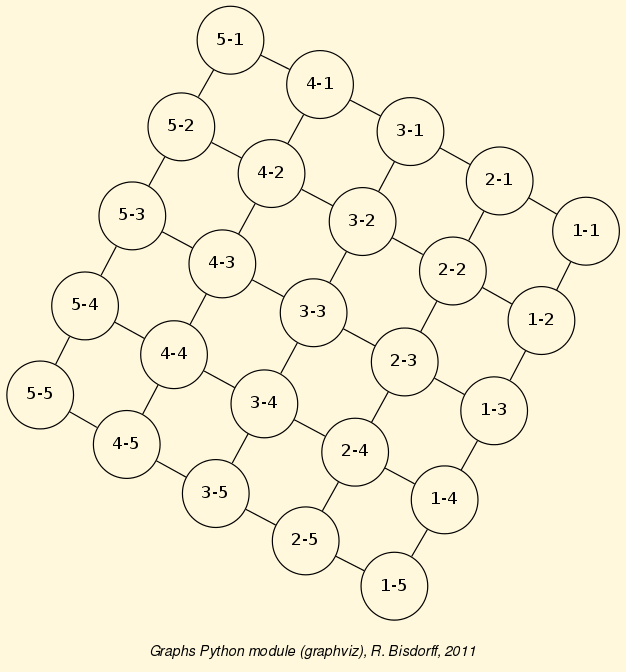

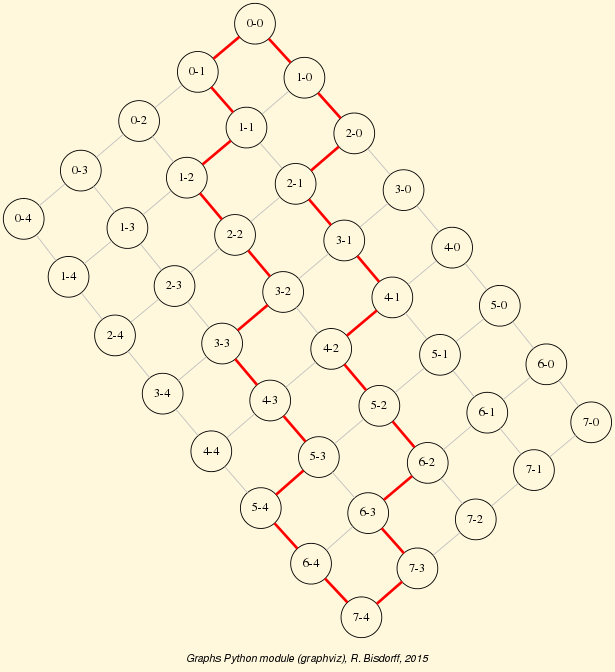

- class digraphs.GridDigraph(n=5, m=5, valuationdomain={'max': 1.0, 'min': -1.0}, hasRandomOrientation=False, hasMedianSplitOrientation=False)[source]

Specialization of the general Digraph class for generating temporary Grid digraphs of dimension n times m.

- Parameters:

n,m > 0; valuationdomain ={‘min’:m, ‘max’:M}.

- Default instantiation (5 times 5 Grid Digraph):

n = 5, m=5, valuationdomain = {‘min’:-1.0,’max’:1.0}.

Randomly orientable with hasRandomOrientation=True (default=False).

- class digraphs.IndeterminateDigraph(other=None, nodes=None, order=5, valuationdomain=(-1, 1))[source]

Parameters: order > 0; valuationdomain =(Min,Max). Specialization of the general Digraph class for generating temporary empty graphs of order 5 in {-1,0,1}.

- class digraphs.KneserDigraph(n=5, j=2, valuationdomain={'max': 1.0, 'min': -1.0})[source]

Specialization of the general Digraph class for generating temporary Kneser digraphs

- Parameters:

- n > 0; n > j > 0;valuationdomain ={‘min’:m, ‘max’:M}.

- Default instantiation as Petersen graph:

n = 5, j = 2, valuationdomain = {‘min’:-1.0,’max’:1.0}.

- class digraphs.PolarisedDigraph(digraph=None, level=None, KeepValues=True, AlphaCut=False, StrictCut=False)[source]

Renders the polarised valuation of a Digraph class instance:

- Parameters:

If level = None, a default strict 50% cut level (0 in a normalized [-1,+1] valuation domain) is used.

If KeepValues = False, the polarisation results in a crisp {-1,0,1}-valued result.

If AlphaCut = True a genuine one-sided True-oriented cut is operated.

If StrictCut = True, the cut level value is excluded resulting in an open polarised valuation domain. By default the polarised valuation domain is closed and the complementary indeterminate domain is open.

- class digraphs.RedhefferDigraph(order=5, valuationdomain=(-1.0, 1.0))[source]

Specialization of the general Digraph class for generating temporary Redheffer digraphs.

https://en.wikipedia.org/wiki/Redheffer_matrix

- Parameters:

order > 0; valuationdomain=(Min,Max).

- class digraphs.StrongComponentsCollapsedDigraph(digraph=None)[source]

Reduction of Digraph object to its strong components.

- class digraphs.SymmetricPartialDigraph(digraph)[source]

Renders the symmetric part of a Digraph instance.

Note

The not symmetric and the reflexive links are all put to the median indeterminate characteristics value!.

The constructor makes a deep copy of the given Digraph instance!

- class digraphs.XMCDA2Digraph(fileName='temp')[source]

Specialization of the general Digraph class for reading stored XMCDA-2.0 formatted digraphs. Using the inbuilt module xml.etree (for Python 2.5+).

- Param:

fileName (without the extension .xmcda).

- class digraphs.XORDigraph(d1, d2, Debug=False)[source]

Instantiates the XOR digraph of two bipolar digraphs d1 and d2 of same order.

- class digraphs.kChoicesDigraph(digraph=None, k=3)[source]

Specialization of general Digraph class for instantiation a digraph of all k-choices collapsed actions.

- Parameters:

- digraph := Stored or memory resident digraph instancek := cardinality of the choices

Back to the Table of Contents

2.4. randomDigraphs module

Inheritance Diagram

Python3+ implementation of some models of random digraphs Based on Digraphs3 ressources Copyright (C) 2015-2023 Raymond Bisdorff

This program is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3 of the License, or (at your option) any later version.

This program is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

You should have received a copy of the GNU General Public License along with this program; if not, write to the Free Software Foundation, Inc., 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301 USA.

- class randomDigraphs.RandomDigraph(order=9, arcProbability=0.5, IntegerValuation=True, Bipolar=True, seed=None)[source]

Specialization of the general Digraph class for generating temporary crisp (irreflexive) random crisp digraphs.

- Parameters:

order (default = 10);

arcProbability (in [0.,1.], default=0.5)

IntegerValuation (default = True);

If Bipolar=True, valuation domain = {-1,1} otherwise = {0,1}

If seed is not None, the random generator is seeded

- class randomDigraphs.RandomFixedDegreeSequenceDigraph(order=7, degreeSequence=[3, 3, 2, 2, 1, 1, 0], seed=None)[source]

Specialization of the general Digraph class for generating temporary random crisp graphs (symmetric digraphs) with a fixed sequence of degrees.

- Parameters:

order=n and degreeSequence=[degree_1, … ,degree_n]>

Warning

The implementation is not guaranteeing a uniform choice among all potential valid graph instances.

- class randomDigraphs.RandomFixedSizeDigraph(order=7, size=14, seed=None)[source]

Generates a random crisp digraph with a fixed size, by instantiating a fixed numbers of arcs from random choices in the set of potential oriented pairs of nodes numbered from 1 to order.

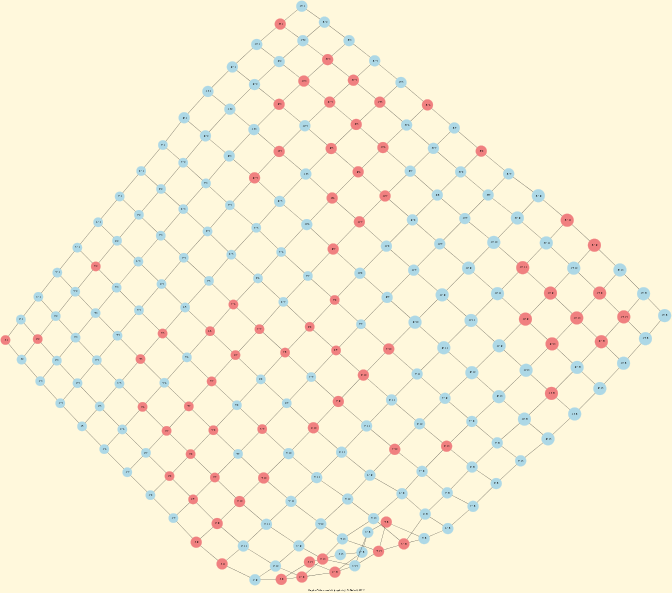

- class randomDigraphs.RandomGridDigraph(n=5, m=5, valuationdomain={'max': 1.0, 'min': -1.0}, seed=None, Debug=False)[source]

Specialization of the general Digraph class for generating temporary randomly oriented Grid digraphs of dimension n time m (default 5x5).

- Parameters:

n,m > 0;

valuationdomain = {‘min’:-1 (default),’max’: 1 (default)}.

- class randomDigraphs.RandomOutrankingValuationDigraph(order=5, weightsSum=10, distribution='uniform', incomparabilityProbability=0.1, polarizationProbability=0.05, ndigits=4, seed=None, Debug=False)[source]

Specialization of the general Digraph class for generating temporary uniform random outranking valuation digraphs.

Note

The valuation verifies the characteristic condition: r(x,y) >= -r(y,x) for x neq y

- Parameters:

weightsSum := integer (default = 10), supposed sum of criteria significance weights)

distribution := ‘uniform’ (default) | ‘triangular’

incomparabilityProbability := float (default = 0.05),

polarizationProbability := float (default = 0.05).

- class randomDigraphs.RandomPartialTournament(order=10, ndigits=2, Crisp=True, missingRelationProbability=0.3, seed=None, Debug=False)[source]

Specialization of the general Digraph class for generating temporary partial bipolar-valued tournaments.

- Parameter:

order := integer > 0 (default = 10)

ndigits := integer (default = 2

If Crisp = True, valuation domain is polarized to min (-1) and max (+1) values

missingRelationProbability := 0 < float < 1 (default 0.3)

- class randomDigraphs.RandomRegularDigraph(order=7, degree=2, seed=None)[source]

Specialization of Digraph class for random regular symmetric instances.

- Parameters:

order and degree.

- class randomDigraphs.RandomTournament(order=10, ndigits=2, Crisp=True, valuationDomain=[-1, 1], seed=None)[source]

Specialization of the general Digraph class for generating temporary complete tournaments.

- Parameter:

order := integer > 0

If valuationDomain = None, valuation is normalized (in [-1.0,1.0])

If is Crisp = True, valuation is polarized to min and max values

- class randomDigraphs.RandomValuationDigraph(order=9, ndigits=2, Normalized=True, IntegerValuation=False, seed=None)[source]

Specialization of the general Digraph class for generating temporary uniformly valuated random digraphs.

- Parameters:

order > 0, number of arcs;

ndigits > 0, number of digits if IntegerValuation = True, otherwise number of decimals;

Normalized = True (r in [-1,1] (default), r in [0,1] if False);

IntegerValuation = False (default)

If seed is not None, the random generator is seeded

- Example python3 session:

>>> from randomDigraphs import RandomValuationDigraph >>> dg = RandomValuationDigraph(order=5,Normalized=True) >>> dg.showAll() *----- show detail -------------* Digraph : randomValuationDigraph *---- Actions ----* ['1', '2', '3', '4', '5'] *---- Characteristic valuation domain ----* {'max': Decimal('1.0'), 'min': Decimal('-1.0'), 'med': Decimal('0.0'), 'hasIntegerValuation': False} * ---- Relation Table ----- S | '1' '2' '3' '4' '5' -----|----------------------------------- '1' | 0.00 0.28 0.46 -0.66 0.90 '2' | -0.08 0.00 -0.46 -0.42 0.52 '3' | 0.84 -0.10 0.00 -0.54 0.58 '4' | 0.90 0.88 0.90 0.00 -0.38 '5' | -0.50 0.64 0.42 -0.94 0.00 *--- Connected Components ---* 1: ['1', '2', '3', '4', '5'] Neighborhoods: Gamma : '4': in => set(), out => {'1', '2', '3'} '5': in => {'1', '2', '3'}, out => {'2', '3'} '1': in => {'4', '3'}, out => {'5', '2', '3'} '2': in => {'4', '5', '1'}, out => {'5'} '3': in => {'4', '5', '1'}, out => {'5', '1'} Not Gamma : '4': in => {'5', '1', '2', '3'}, out => {'5'} '5': in => {'4'}, out => {'4', '1'} '1': in => {'5', '2'}, out => {'4'} '2': in => {'3'}, out => {'4', '1', '3'} '3': in => {'2'}, out => {'4', '2'}

>>> dg.exportGraphViz()

- class randomDigraphs.RandomWeakTournament(order=10, ndigits=2, IntegerValuation=False, weaknessDegree=0.25, indeterminatenessProbability=0.0, seed=None, Comments=False)[source]

Specialization of the general Digraph class for generating temporary bipolar-valued weak, i.e. partially determinate, tournaments.

- Parameters:

order = n > 0

weaknessDegree in [0.0,1.0]: proportion of indeterminate links (default = 0.25)

If IntegerValuation = True, valuation domain = [-pow(10,ndigits); + pow(10,ndigits)] else valuation domain = [-1.0,1.0]

If seed is not None, the random number generator is seeded

Back to the Table of Contents